Latest AI and machine learning advancements have led to computer vision concepts, which describe the ability to process and classify objects based on pre-trained algorithms. Significant improvements in power, cost, and peripheral equipment size have made these technologies more accessible and sped up progress.

So, the computer vision market is showing steady growth. If in 2019 it was estimated at $27,3 billion, then by 2025, it will grow to $53 billion. It is driven by the high demand for wearables and smartphones, drones (consumer and military), autonomous vehicles, and the introduction of Industry 4.0 and automation in various spheres.

Given the incredible potential of computer vision, organizations are actively investing in image recognition to discern and analyze data coming from visual sources for various purposes. These are, in particular, medical images analysis, face detection for security purposes, object recognition in autonomous vehicles, etc.

This article will tell you what image recognition is and how it is associated with computer vision. You will know how neural networks work with images. Ultimately, we will look at how this technology is used across industries.

What is Meant by Image Recognition?

We live in a world full of things that we must recognize, classify, and understand. And the human brain does it subconsciously and automatically. This ability is remarkable, given that a dynamically changing environment constantly provides visual information that we associate with our internal representations of objects, categories, and concepts.

Despite all the technological innovations, computers still cannot boast the same recognition abilities as humans. Yes, due to its imitative abilities, AI can identify information patterns that optimize trends related to the task at hand. And unlike humans, AI never gets physically tired, and as long as it receives data, it will continue to work. But human capabilities are more extensive and do not require a constant stream of external data to work, as it happens to be with artificial intelligence.

However, as far as image recognition is concerned, the forecasts are quite optimistic. Computer engineers are trying to teach AI to interpret images in the same way that the human brain does. But it is already clear that AI can analyze these images much more thoroughly than we can.

What is Image Recognition and How Does it Work?

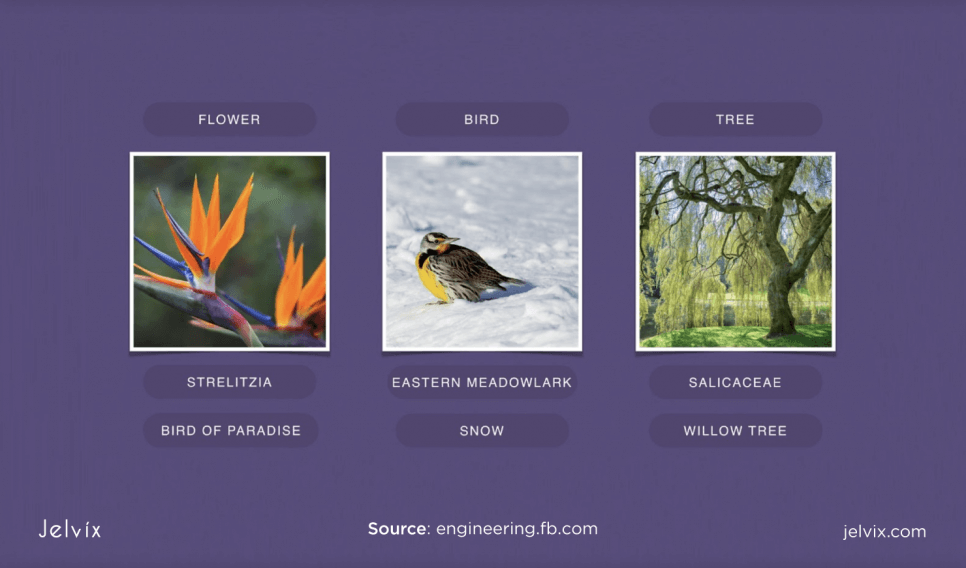

As a part of computer vision technology, image recognition is a pool of algorithms and methods that analyze images and find features specific to them. It can use these learned features to solve various issues, such as automatically classifying images into multiple categories and understanding what objects are present in the picture.

To detect certain objects, AI must familiarize with the marked data: images containing the necessary objects, object location, and class labels. Do you probably want to know how many images you need for this? The bigger, the better.

Traditional Computer Vision

The traditional approach to image recognition consists of image filtering, segmentation, feature extraction, and rule-based classification. But this method needs a high level of knowledge and a lot of engineering time. Many parameters must be defined manually, while its portability to other tasks is limited.

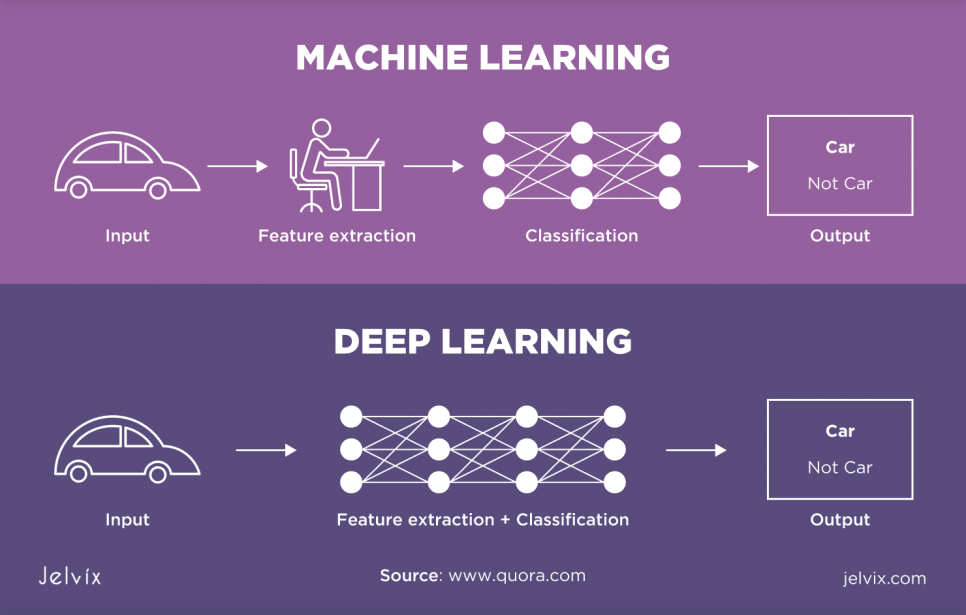

Machine Learning and Deep Learning

The introduction of deep learning, which uses multiple hidden layers in the model, has provided a big breakthrough in image recognition. Due to deep learning, image classification, and face recognition, algorithms have achieved above-human-level performance and can detect objects in real-time.

With enough training time, AI algorithms for image recognition can make fairly accurate predictions. This level of accuracy is primarily due to work involved in training machine learning models for image recognition.

Image Recognition Task Categories

So, there are various “tasks” that this technology can perform. Let’s take a more detailed look at them.

Object Detection

People often confuse image detection with image classification. However, the difference is obvious. If you need to classify elements of an image, you can use classification. But if you need to find them, you must use image detection.

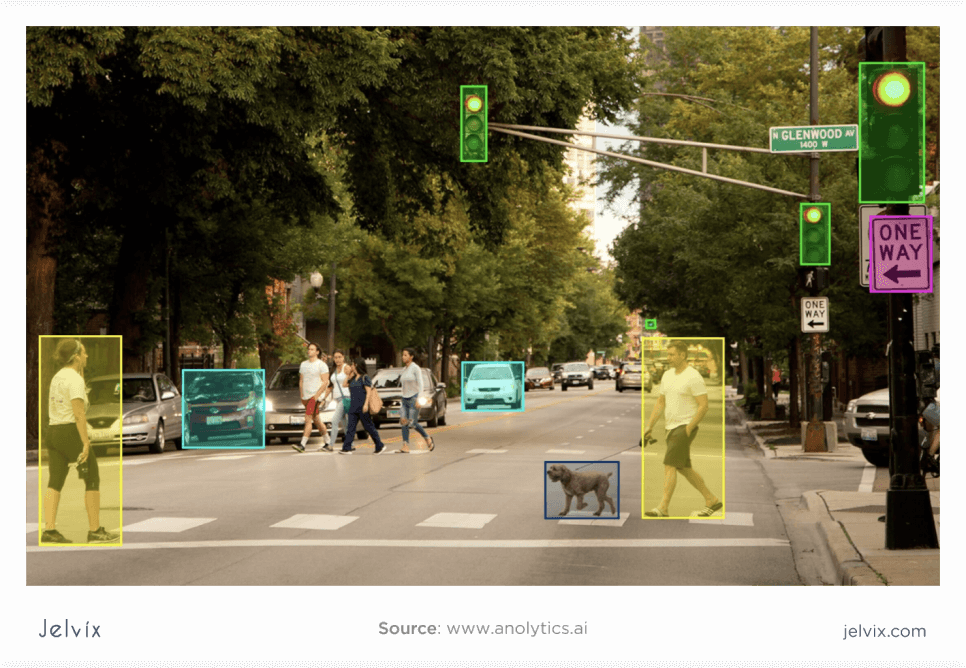

While the object classification network can tell if an image contains a particular object or not, it will not tell you where that object is in the image. Object detection networks provide both the class of objects contained in a picture and the bounding box that provides the object coordinates. Object detection is the first task performed in many computer vision systems because it allows for additional information about the detected object and the place.

People use object detection methods in real projects, such as face and pedestrian detection, vehicle and traffic sign detection, video surveillance, etc. For example, the detector will find pedestrians, cars, road signs, and traffic lights in one image. But he will not tell you which road sign it is (there are hundreds of them), which light is on at the traffic lights, which brand or color of a car is detected, etc.

Image Classification

For the classifier to do its job, it needs to be fed the result of the detector’s work as an input. If the object detection network detects a road sign, the label is passed to a classifier trained to classify road signs. And if the detector finds a car, then the result is given to another classifier trained to classify cars. Next, the classifier associates one or more labels with the given image.

- Single-label classification is the most common task in supervised image classification. As the name suggests, there is one label or annotation for each image in a single label classification. So the model outputs one value or prediction for every image it sees.

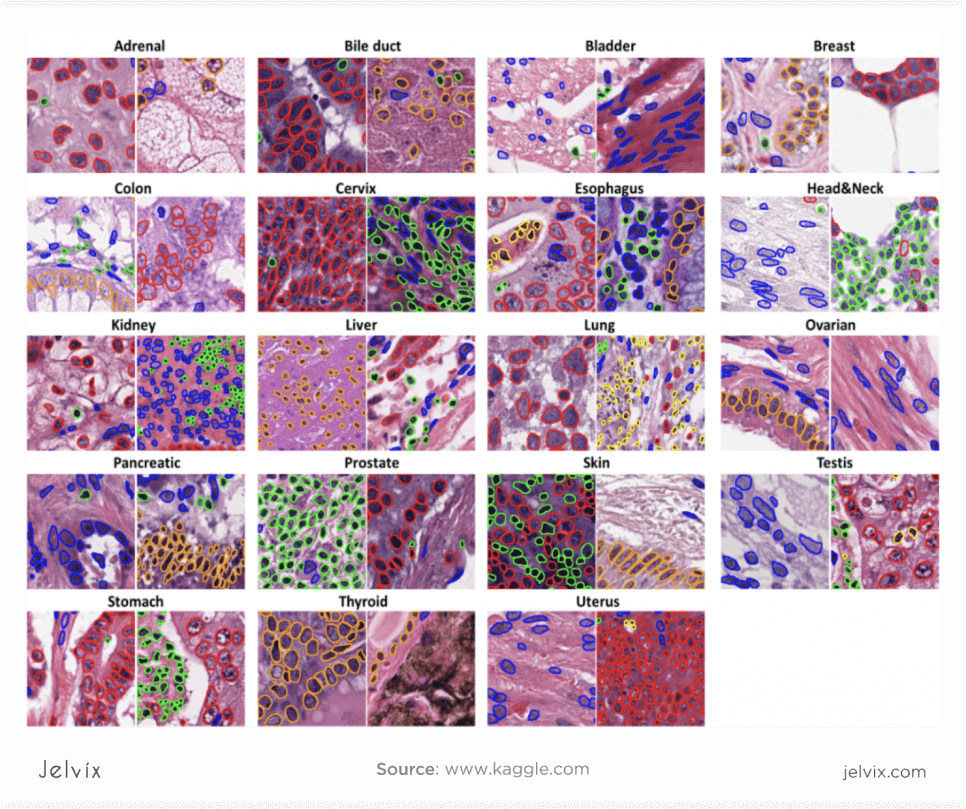

- Multi-label classification is a task where each image can contain more than one label, and some images can contain all labels simultaneously. While similar to single-label classification, the problem statement is much more complex than that. Multi-label classification problems commonly exist in the field of medical imaging, where a patient may have more than one disease that must be diagnosed from imaging data in the form of x-rays.

Segmentation

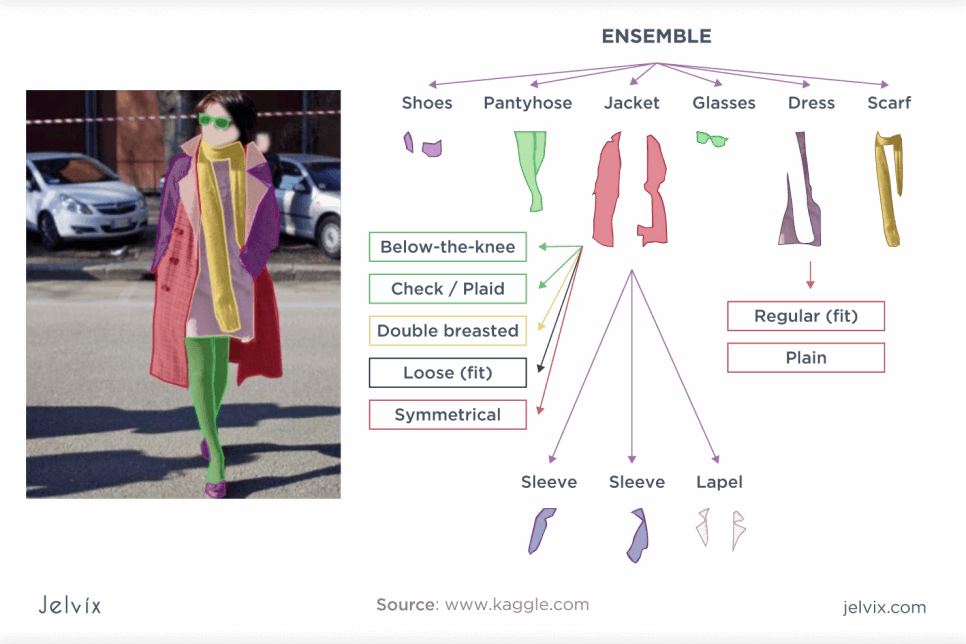

Image segmentation is a method of processing and analyzing a digital image by dividing it into multiple parts or regions. By dividing the image into segments, you can process only the important elements instead of processing the entire picture.

Image segmentation may include separating foreground from background or clustering regions of pixels based on color or shape similarity. For example, a common application of image segmentation in medical imaging is detecting and labeling image pixels or 3D volumetric voxels that represent a tumor in a patient’s brain or other organs.

The following tasks are also worth mentioning:

Object tracking is the following or tracking of an object after it has been found. This task applies to images taken in sequence or to live video streams. Autonomous vehicles, for example, must not only classify and detect objects such as other vehicles, pedestrians, and road infrastructure but also be able to do so while moving to avoid collisions.

Content-based image search, in turn, uses computer vision to search, view, and retrieve images from data stores based on content rather than their associated metadata tags. This task may include automatic image annotation, which replaces manual image labeling and is used for digital asset management systems to improve search and retrieval accuracy.

What does “annotating an image” mean?

Image annotation is the process of image labeling performed by an annotator and ML-based annotation program that speeds up the annotator’s work. Labels are needed to provide the computer vision model with information about what is shown in the image. The image labeling process also helps improve the overall accuracy and validity of the model.

There are companies that annotate data for customers (Annotell, Scale) and services (Amazon’s MTurk) that offer a platform so that the customer and the annotator can find each other.

Image Recognition System Processes

Typically, an image recognition task involves building a neural network (NN) that processes particular pixels in an image. These networks are loaded with as many pre-labeled images as possible to “teach” them to identify similar images.

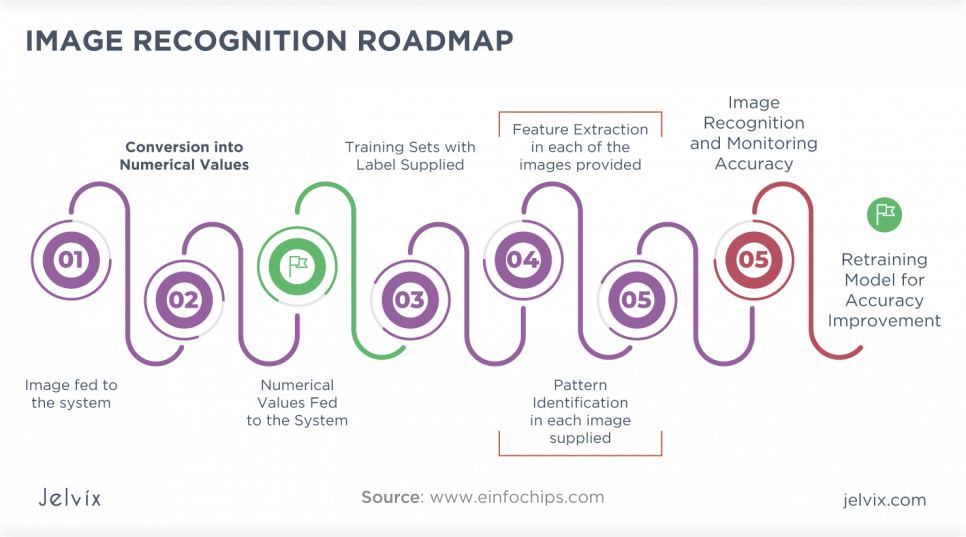

How Do Neural Networks Work With Images?

The digital picture is a matrix of numbers. Each number represents data related to image pixels. Meanwhile, different pixel intensities form the average of a single value and express themselves in a matrix format. So the data fed into the recognition system is the location and power of the various pixels in the image. And computers examine all these arrays of numerical values, searching for patterns that help them recognize and distinguish the image’s key features.

Prepare Data

The process starts with collecting images and annotating them. It may also include pre-processing steps to make photos more consistent for a more accurate model.

Create and Train a DL Model

While you build a deep learning model from scratch, it may be best to start with a pre-trained model for your application. Photos from the generated dataset are fed into the neural network. As the data is approximated layer by layer, NNs begin to recognize patterns and thus recognize objects in images. The model then iterates the information multiple times and automatically learns the most important features relevant to the pictures. As the training continues, the model learns more sophisticated features until the model can accurately decipher between the classes of images in the training set.

Test the AI Model

The model is tested with images not part of the training dataset. Thus, about 80% of the complete image dataset is used for model training, and the rest is reserved for model testing. It is necessary to determine the model’s usability, performance, and accuracy. As the training continues, the model learns more sophisticated features until it can accurately decipher between the image classes in the training set.

Image Recognition Problems

However, not everything is as smooth as we would like it to be in image recognition. Here are some challenges the models face:

Occlusion

If anything blocks a full image view, incomplete information enters the system. Developing an algorithm sensitive to such limitations with a wide range of sample data is necessary.

Interclass variation

Specific objects within a class may vary in size and shape yet still represent the same class. For example, tables, bottles, and buttons all look different.

Deformation

As you know, objects remain the same, even if they are deformed. When the system learns and analyzes images, it remembers the specific shape of a particular object. But if an object form was changed, that can lead to erroneous results.

Viewpoint Variation

In real cases, the objects in the image are aligned in various directions. When such photos are fed as input to an image recognition system, the system predicts incorrect values. Thus, the system cannot understand the image alignment changes, which creates a large image recognition problem.

Image Recognition Use Cases

There is a reason why image recognition has become an important technology for modern AI: it has the potential to be used in a wide variety of industries.

Agriculture

The use of CV technologies in conjunction with global positioning systems allows for precision farming, which can significantly increase the yield and efficiency of agriculture. Companies can analyze images of crops taken from drones, satellites, or aircraft to collect yield data, detect weed growth, or identify nutrient deficiencies.

Healthcare

Since 90% of all medical data is based on images, computer vision is also used in medicine. Its application is wide, from using new medical diagnostic methods to analyze X-rays, mammograms, and other scans to monitoring patients for early detection of problems and surgical care.

The thing is, medical images often contain fine details that CV systems can recognize with a high degree of certainty. In addition, AI systems can compare the image with thousands of other similar photos in the database of the medical system, and the result of the comparison is used to make a more accurate diagnosis by a medical specialist.

Autonomous Vehicles

Computer vision is one of the essential components of autonomous driving technology, including improved safety features.

Self-driving cars from Volvo, Audi, Tesla, and BMW use cameras, lidar, radar, and ultrasonic sensors to capture images of the environment. They can detect markings, signs, and traffic lights for safe driving. In addition, AI is already being used to identify objects on the road, including other vehicles, sharp curves, people, footpaths, and moving objects in general. But the technology must be improved, as there have been several reported incidents involving autonomous vehicle crashes.

Augmented Reality Gaming and Applications

The gaming industry has begun to use image recognition technology in combination with augmented reality as it helps to provide gamers with a realistic experience. Developers can now use image recognition to create realistic game environments and characters. Various non-gaming augmented reality applications also support image recognition. Examples include Blippar and CrowdOptics, augmented reality advertising and crowd monitoring apps.

Production

Computer vision has significantly expanded the possibilities of flaw detection in the industry, bringing it to a new, higher level. Now technology allows you to control the quality after the product’s manufacture and directly in the production process.

Photo, Video, and Entertainment

Last but not least is the entertainment and media industry that works with thousands of images and hours of video. Image recognition can greatly simplify the cataloging of stock images and automate content moderation to prevent the publication of prohibited content on social networks. Deep learning algorithms also help detect fake content created using other algorithms.

Security

This technology plays a vital role in the security industry. Whether it’s an office, smartphone, bank, or home, the function of recognition is integrated into every software. It is equipped with various security devices, including drones, CCTV cameras, biometric facial recognition devices, etc.

None of these projects would be possible without image recognition technology. And we are sure that if you are interested in AI, you will find a great use case in image recognition for your business.

Conclusion

Today lots of visual data have been accumulated and recorded in digital images, videos, and 3D data. The goal is to efficiently and cost-effectively optimize and capitalize on it.

At Jelvix, we develop complete, modular image recognition solutions for organizations seeking to extract useful information and value from their visual data. They are flexible in deployment and use existing on-premises infrastructure or cloud interfaces to automatically discover, identify, analyze, and visually interpret data.

We can also incorporate image recognition into existing solutions or use it to create a specific feature for your business. Don’t wait until your competitors are the first to use this technology! Contact us to get more out of your visual data and improve your business with AI and image recognition.

Need a certain developer?

Leverage the top skills and resources to scale your team capacity.