The healthcare sector has entered an era of accelerated AI adoption, one that’s evolving far faster than previous transformations like the move to electronic health records (EHRs). Spurred by the accessibility of large language models (LLMs) and the competitive mandate to modernize care delivery, decision-makers across Payers, Providers, and Pharma are now prioritizing AI development with board-level visibility and budgetary backing.

In 2024, AI-focused digital health startups got 42% of all funding around the world, up from only 7% in 2015. The Healthcare AI Adoption Index, which was created by Bessemer Venture Partners, AWS, and Bain & Company, reinforces these numbers.

A survey of 400 healthcare leaders found that 95% think GenAI will change how clinical decisions are made in three to five years. But only 30% of AI pilots make it to production right now. Why? There are a number of technical, cultural, and infrastructural problems that are getting in the way, including data readiness.

McKinsey and Company’s research shows that more than 60% of healthcare executives say their AI budgets are growing faster than their general IT budgets, and more than half expect to see a significant return on investment within the first year of use. Only 14% of healthcare organizations in the U.S. are using more than one GenAI model in production.

However, a significantly bigger group—almost 35%—is currently experimenting with or just starting to use GenAI models. Clinicians around the world employ AI in different ways, but the Asia-Pacific area is the most advanced in terms of real-world use.

Even though everyone is excited, enthusiasm alone doesn’t make a difference. Healthcare AI adoption is stalling in all areas, not because there isn’t enough money or interest from leaders, but because of basic issues, especially the difficulty of preparing AI-ready data.

Security concerns, high integration costs, and a lack of in-house expertise are all important. Yet, the biggest technical challenge is still having data that is incomplete, not standardized, or even missing. Nearly half of the Pharma executives who took the Index survey said that data quality and availability were the main problems that kept them from scaling AI solutions.

Providers and payers say they have similar worries, though the level of fragmentation and complexity differs from case to case. That’s what we’re going to review in this article: data in healthcare, its AI preparation, and everything it takes to get it right.

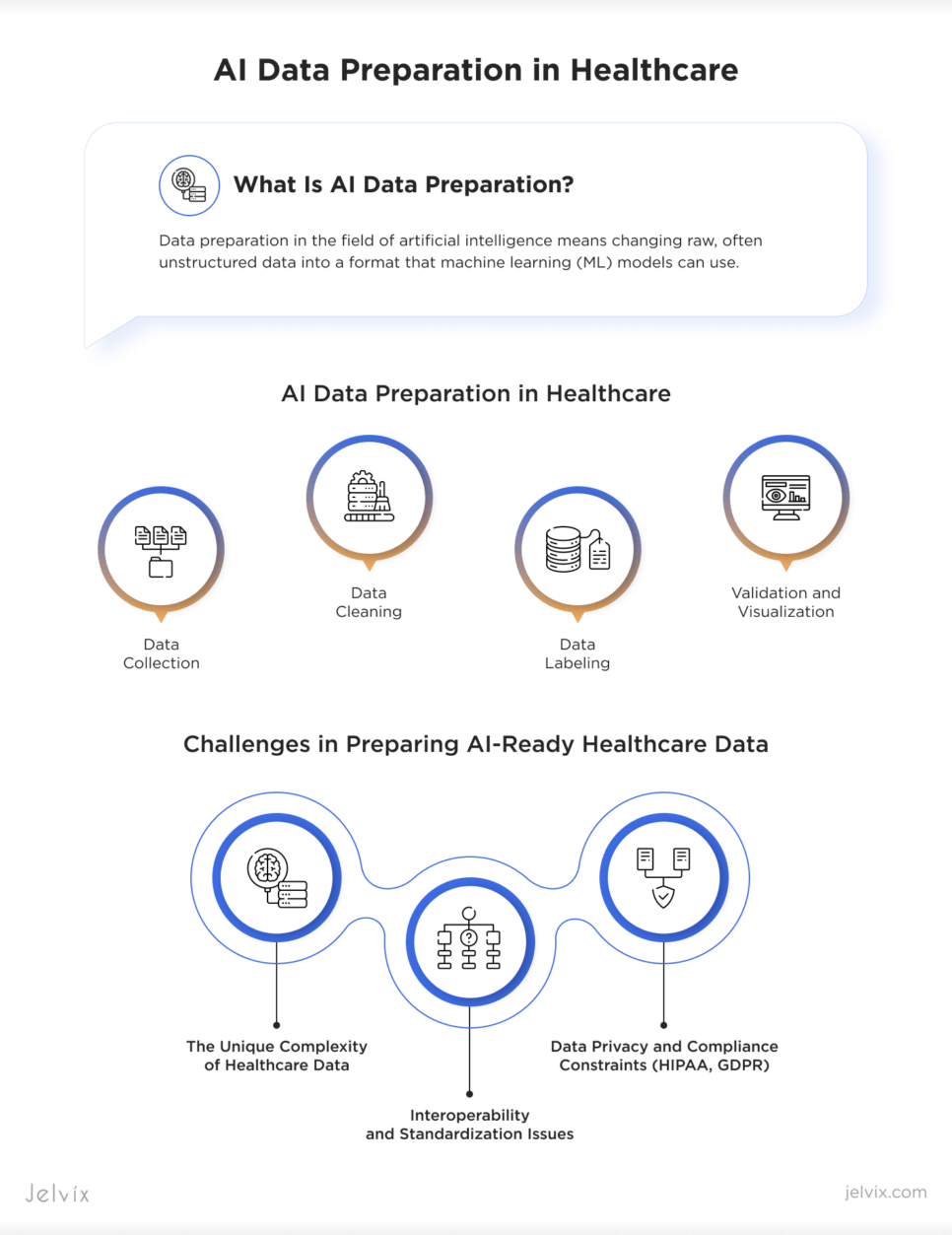

What Is AI Data Preparation?

Data preparation in the field of artificial intelligence means changing raw, often unstructured data into a format that machine learning (ML) models can use. This stage is very important in healthcare because data comes in from many different systems, such as electronic health records, imaging platforms, lab tests, patient wearables, and more.

Key Stages of the Data Preparation Process

The healthcare sector brings unique demands to every stage of the AI data pipeline.

- Data Collection

Data needs to be collected from a number of places, such as legacy EHR systems, imaging archives, cloud-based lab tools, IoT medical devices, and billing applications for administrative purposes. There are issues with data that is stored in silos, formats that don’t match, and standards that don’t operate together, such as HL7, FHIR, and DICOM.

Knowing where the data is and whether it can be accessed securely and ethically is the first step in understanding how to prepare data for machine learning.

Learn how we built an AI-driven FHIR data model that boosts cancer diagnostic accuracy through real-time patient data analysis.

- Data Cleaning

It’s not easy to see errors in clinical data, and they are typically very deeple embedded. At this point, you need to fix duplicate entries, missing values, wrong timestamps, coding standards that don’t match (like ICD-10 vs. SNOMED), and outliers. Cleaning ensures that the data being fed into models won’t skew results, trigger bias, or generate medically unsafe recommendations.

- Data Labeling

Labeling in healthcare is particularly nuanced. It could mean tagging pictures for signs of pneumonia, finding mentions of medications in unstructured doctor notes, or annotating EEG signals for potential seizures. For training supervised models, you need high-quality annotated datasets. However, labeling them requires a significant amount of time and money, as it necessitates input from clinical experts.

- Validation and Visualization

Before training can start, datasets need to be checked for accuracy both statistically and clinically. This means looking at distributions, finding biases, and making sure that the sample is diverse across different groups of people. Visualizations help teams understand how data changes over time and if variables are related in a meaningful way.

Histograms can show that some age groups of patients are not well represented, and scatter plots can show that there are gaps in the documentation of outcomes.

How It Differs from Traditional Data Handling

Healthcare institutions have been managing data for decades. However, traditional data handling was not intended for predictive analytics, but rather for billing, compliance, and recordkeeping.

Data systems operate as passive repositories in conventional contexts. AI models, on the other hand, need data that has been carefully selected to be representative, labeled, structured, and pertinent. For instance, an AI model requires that thousands of lab results be normalized, timestamp-aligned, and mapped to patient outcomes, even though a standard EHR may store thousands of such results.

Similar to this, a clinical note is only useful if it is properly parsed; NLP pipelines are required to transform free text into a structured form.

AI also necessitates constant data readiness. ML models retrain, adapt, and evolve in contrast to static reporting systems. Data preparation is now a continuous, version-controlled pipeline integrated into clinical workflows rather than a one-time event. It requires a cooperative approach from domain specialists, IT specialists, compliance officers, and healthcare data experts.

The Untapped Potential of Healthcare Data

In healthcare, there is a lot of data, but relevant, high-quality data is still hard to get. AI has to figure out how to solve that problem. Every day, clinical systems create terabytes of data, yet a lot of it is still stuck in silos, kept in old formats, or doesn’t have the right context.

Before we can fully use AI in healthcare, we need to grasp what sorts of data are out there and why processing this data correctly is the actual competitive edge.

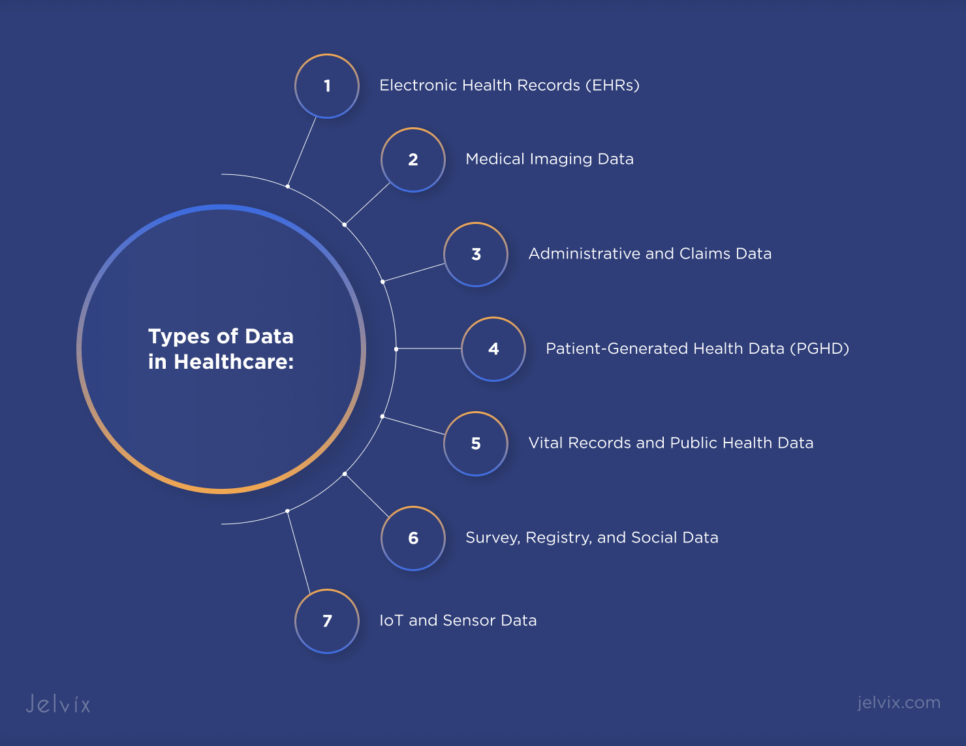

Types of Data in Healthcare

The healthcare ecosystem produces an extraordinarily diverse array of data types. Each source brings its own characteristics. Together, they form the raw material for healthcare data for machine learning:

Electronic Health Records (EHRs)

EHRs are longitudinal records of clinical encounters, including diagnoses, medications, procedures, lab results, and physician notes.

Medical Imaging Data

MRI scans, X-rays, and CT images are central to diagnostics. Imaging data is large in volume and stored in DICOM format, often lacking standardized labeling.

Administrative and Claims Data

These records track billing codes, procedures, and patient interactions across payers and providers. They are valuable for identifying patterns in utilization, fraud detection, and chronic disease management at scale.

Patient-Generated Health Data (PGHD)

Sourced from wearables, mobile apps, and connected devices, PGHD reflects real-world behavior, including heart rate variability, sleep cycles, glucose levels, and more.

IoT and Sensor Data

ICU monitors, infusion pumps, and telemetry devices all send out signals at high frequencies. To combine this data in real-time, it needs to be preprocessed and synchronized across systems.

Survey, Registry, and Social Data

Disease registries, patient satisfaction surveys, and even social media sites can give us indirect information about how patients feel and how their health is doing.

Vital Records and Public Health Data

Structured government information, such reports on births, deaths, and the causes of death, give us a big-picture view of population health trends and the spread of rare diseases.

Understanding these sources is the first step in building AI-ready infrastructure. Yet the real transformation begins when we apply artificial intelligence data processing techniques to extract clinical meaning from this complexity.

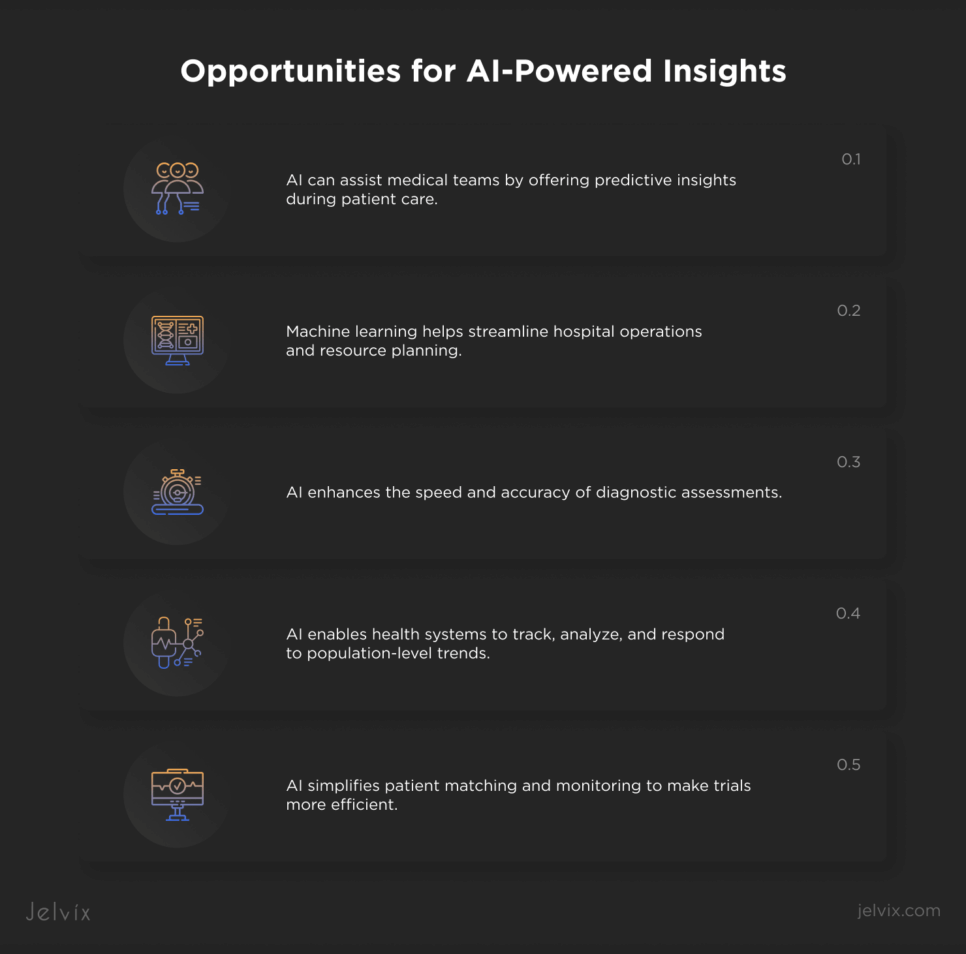

Opportunities for AI-Powered Insights

Properly harnessed, data has the potential to shift the industry from reactive care to predictive, personalized medicine. However, that shift depends entirely on data precision in healthcare, specifically how well it is prepared, curated, and contextualized before it reaches a model.

Here’s where AI, supported by rigorous preparation, starts to deliver:

- AI can assist medical teams by offering predictive insights during patient care.

- Machine learning helps streamline hospital operations and resource planning.

- AI enhances the speed and accuracy of diagnostic assessments.

- AI enables health systems to track, analyze, and respond to population-level trends.

- AI simplifies patient matching and monitoring to make trials more efficient.

Across all these areas, the value of AI is tightly coupled to the quality of the underlying data. AI doesn’t fix flawed information—it amplifies it. That’s why data preparation is a core competency that defines whether a healthcare organization will lead or lag in the AI era.

Challenges in Preparing AI-Ready Healthcare Data

Deploying an effective data preparation system in healthcare means working within a landscape shaped by clinical nuance, fragmented infrastructure, and regulatory rigidity.

The Unique Complexity of Healthcare Data

Healthcare data naturally doesn’t want to be uniform. Thousands of people from different fields create it, often in real time, and the pressures of providing care often shape it more than the analytic design. This means that even simple data attributes like time, context, and certainty may not be there or may be coded in ways that AI models can easily get wrong.

Strategy: To overcome this, organizations need to include clinical context in the data preparation process itself, not just as a last step to check the data, but as an ongoing collaboration between domain experts and data engineers. Clinical insight should guide how raw inputs are labeled, structured, and understood before modeling starts.

Interoperability and Standardization Issues

Even while systems have gone digital, there are still no common standards between EHR vendors, imaging platforms, and data registries. This makes it challenging to put data together in a way that makes sense. When AI models are taught in isolated situations, they are less portable and helpful.

This fragmentation makes things less consistent, creates blind spots, and makes data teams spend a lot of time and money translating semantic intent across structures that don’t work together.

Strategy: The best answer is to create translation layers at the architectural level. This means using middleware to actively combine incoming data into a consistent internal representation that AI models can use, no matter what format or system it came from.

Data Privacy and Compliance Constraints (HIPAA, GDPR)

Every piece of healthcare data is connected to real-life outcomes for people; thus privacy is not only a technical need but also one of ethics. HIPAA and GDPR are two laws that make it very clear how data can be accessed, shared, and stored. The real problem is making these standards work in AI workflows that move quickly and weren’t built with compliance in mind.

Strategy: Forward-looking systems build privacy into their designs by organizing pipelines to include automated de-identification, fine-grained permission control, and traceability that is easy to audit. So, compliance isn’t enforced by gatekeeping; instead, it comes from a system that makes ethical handling a routine part of the data lifecycle.

The Risks of Poor-Quality Data in AI Training

In healthcare, poor data quality negatively impacts model performance and can compromise patient safety, clinical trust, and ethical standards. AI systems trained on flawed datasets tend to perpetuate the very inequalities the technology seeks to address. In medicine, where accuracy is very important, AI outputs that are based on weak data may do well in testing but not so well in real life.

Real-World Impacts of Inaccurate AI Predictions

AI based on datasets that are not balanced or that only include certain demographics might have disproportionately adverse impacts on groups that are poorly represented. This is not because the algorithms are biased, but because they are only as good as the data they are trained on.

A striking example is found in melanoma detection models, many of which are trained predominantly on light-skinned patient images from the U.S., Europe, and Australia. Despite the biological variations in melanoma presentation across skin tones, these models consistently underperform when evaluating lesions on darker skin.

This makes it harder to find cancer early and may make existing racial differences in cancer outcomes even worse.

In another study involving MIMIC-III ICU data, researchers discovered that a model trained to predict in-hospital mortality exhibited critically low recall for underrepresented racial groups. In practice, this means that the algorithm consistently failed to find high-risk patients from certain backgrounds. If this happened on a large scale, it may lead to unequal access to treatment or inferior professional oversight for already marginalized groups.

Hidden Costs of Data Errors in Clinical Settings

The risks posed by inaccurate predictions are not limited to diagnosis. In clinical settings, even tiny variations in data can spread across decision-support systems, modifying care plans, postponing interventions, or issuing false signals that overwhelm clinicians.

People might not recognize these mistakes until they start to show up in patterns of harm. By that time, the systems in question are frequently so thoroughly ingrained in the process that they are difficult to undo.

The financial and operational costs are equally significant. Alerts that go to the wrong place and false predictions can cause resources to be used incorrectly, make things harder for administrators, and put the company at risk of legal action.

Also, once a model has failed in a high-stakes situation, it is much harder to get providers and patients to trust it again. In this case, high AI data quality and strong preparation are not a luxury; they are necessary for safety, fairness, and long-term success.

Strategies for Successful AI Data Preparation

A strong healthcare data model needs more than just raw data. It needs smart pipelines, workflows that always function, and the flexibility to manage more data without losing quality. Here are several basic, reliable, scalable, and ethical techniques for AI data processing.

Core Principles of Data Quality

High-performing healthcare data intelligence systems are grounded in four essential attributes:

- Accuracy: Ensures that recorded values reflect real-world observations without transcription or entry bias.

- Completeness: Means that critical data points aren’t missing due to system fragmentation or faulty extraction

- Consistency: Requires that formats and meanings remain stable across time and systems.

- Timeliness: Reflects the ability of data to be captured and updated quickly enough to influence decision-making in real time.

These principles are measurable and must be embedded into every data preparation software and process used in healthcare AI.

Building a Scalable Data Pipeline

Modern healthcare institutions face intense data storage requirements for AI, as multi-modal datasets grow in different directions. Scalability here is about engineering flexibility into how data moves.

Healthcare-specific ETL tools support automated pipelines that allow data from different sources to be continuously ingested, transformed, and routed. These pipelines must be able to normalize legacy EHR formats. They need to decode medical imaging standards. They also have to handle streaming telemetry data from devices.

Scalability needs more than just volume; it also needs resilience, which means being able to keep performance and integrity even when new data kinds, regulatory updates, and analytic models are added over time. A well-architected pipeline is a strategic asset, serving as the foundation for sustainable AI data processing.

Data Labeling and Annotation for AI Training

Labeling is the interface between raw healthcare data and functional AI insight. Whether tagging CT scans for lesion detection or assigning ICD codes to unstructured notes, annotation transforms noise into signal. Both manual and automated techniques have roles to play.

The best data preparation software platforms incorporate hybrid workflows, audit trails, and user feedback loops, ensuring that annotations evolve alongside the models they support.

Handling Unstructured Data in Healthcare

Most healthcare data is unstructured by nature. These kinds of data often have important diagnostic, contextual, or behavioral information that regular analytical tools can’t see. To get them ready for AI, you need to use methods that are specific to the field.

For example, natural language processing (NLP) can be utilized to pick out and organize clinical concepts from free text, computer vision techniques can be employed for reading radiographic images, and audio processing pipelines can be used to write down and analyze speech inputs.

There are issues with each of these domains, such as different terms, noise in the resolution, and unclear language. However, there is also the potential for each of them to transform. The healthcare data model is full of useful information, but you can only get to it if you know how to handle unstructured data well. AI models in healthcare can get insights that structured inputs alone could never give them.

The Role of Data Governance in AI Success

Without governance, even the most technically advanced AI pipelines in healthcare risk collapsing under legal exposure, data leakage, or ethical failure. Governance is the control layer that ensures trust and security across the entire data lifecycle.

Defining Ownership, Access, and Stewardship

AI efforts in intricate healthcare systems typically fail not because of technical challenges, but because no one can answer the fundamental question, “Who owns the data?” It is crucial to be clear about who owns what for both legal and operational reasons, especially when data transfers between departments or institutions. Access must be tiered and tracked so that only persons who are entitled to see sensitive information may do so.

Stewardship means giving people or groups the job of keeping data quality high, keeping an eye on lineage, and enforcing retention rules. When these roles aren’t clear, data quality goes down, privacy violations go unnoticed, and AI models slowly get worse. Strong governance frameworks make these duties official and enforceable in the processes of preparing and modeling data.

Compliance Frameworks and Auditing Practices

You need more than simply encryption and consent forms to follow HIPAA, GDPR, and other rules that are only for specified sectors. AI systems need to support active compliance, which entails automatically enforcing retention policies, discovering re-identification risks before they happen, and having dynamic control over how data is utilized downstream.

This includes data preparation companies that work with third parties, cloud providers, and platforms for training models.

Modern Data Quality Management Approaches

Modern platforms use both rule-based validation and anomaly detection to find missing values, outliers, or changes in data distribution in real time. These checks connect quality metrics directly to model performance, making the link between input integrity and clinical reliability clear and measurable.

Advanced data preparation software also lets you keep track of different versions of datasets, which makes it easier to follow the changes made to the pipeline and make sure that audits or retraining can be done again. Some systems have built-in data profiling tools that keep track of changes over time or across demographics. This is very important for stopping bias from getting worse in AI models in healthcare.

Quality management is no longer just for healthcare software development, which is the most important thing. It is embedded into workflows, made evident to both clinical and operational teams, and everyone who is responsible for it manages it. Quality is not a checkpoint in this paradigm; it is always a component of the infrastructure.

The Smart Way to Choose – Download Your Guide to Selecting the Right Healthcare Tech Partner!

Conclusion

AI readiness is rarely derailed by a lack of innovation; more often, it stalls in the space between fragmented systems and overburdened teams. Companies that try to address these problems on their own typically find that having a lot of technical knowledge isn’t enough to make up for not knowing the rules or the subtleties of the field.

Experience is what sets them apart, and it’s not judged by qualifications but by how well a team can deal with uncertainty. They also need to know how to handle edge circumstances. Also, good governance should be put into action without slowing down the speed.

When data becomes too complicated and starts to affect deadlines or trust, it’s important to find a partner who sees healthcare limits as design factors instead of hurdles. We don’t give templates at Jelvix. Instead, we develop infrastructure that suits how your organization really works, making sure that AI strategy matches clinical and business goals.

We can help you get to the next step of your AI roadmap if it depends on data you can trust. We’ll do it with accuracy, speed, and responsibility.

Get in touch with us today for a free consultation and start turning your data into useful AI.

FAQ

What types of healthcare data are most relevant for AI training?

Commonly used are electronic health records (EHR), diagnostic imaging, medical notes, wearable/IoT data, insurance claims, and genomics. To get AI-ready, each type of data needs to go through certain steps, like de-identification, labeling, and normalization.

How long does it take to prepare healthcare data for AI?

It all relies on the size of the project, the amount of data, and the quality of the data. Getting data ready for an organization might take anywhere from a few weeks to a few months. Automation and professional data engineering teams can help speed up this process without decreasing quality or security.

What’s the risk of skipping or rushing through data preparation?

If you don’t prepare your data, your AI results may be biased, incomplete, or unreliable. In healthcare, this can lead to erroneous diagnoses, FDA approvals that don’t go through, or a breach in trust between doctors and patients. There is no choice but to have clean data; it is important for the safety of patients and the smooth running of operations.

Can Jelvix help with both clinical and operational data preparation?

Yes. Jelvix’s main job is to get clinical data (like EHR, lab results, and imaging) and operational data (like RCM, scheduling, and claims) ready for AI integration. We make sure that our solutions meet the technical and regulatory needs of both healthcare enterprises and healthtech startups.

Do I need internal data scientists to prepare data for AI?

Not always. It’s helpful to have internal data science teams, but a lot of healthcare providers and startups hire outside professionals to do the hard, technical, and time-consuming elements of data preparation. This saves time and money while ensuring the quality is high.

How do I get started with Jelvix for healthcare AI data preparation?

Contact us for a free consultation. We’ll look at how ready your data is right now, set the scope, and give you a custom plan to turn your raw healthcare data into AI-ready assets in a safe, efficient, and large-scale way.

Need high-quality professionals?

Extend your development capacity with the dedicated team of professionals.