Most hypertension platforms don’t stall because the algorithm is weak. They stall because the data is untrustworthy: device streams arrive in different formats and units, timestamps drift, and EHR connections vary by site. By the time you need payer‑grade outcomes and reliable clinician workflows, your product is negotiating with five systems instead of serving patients.

Translation: if data can’t move predictably, your AI won’t matter and your enterprise deals won’t close.

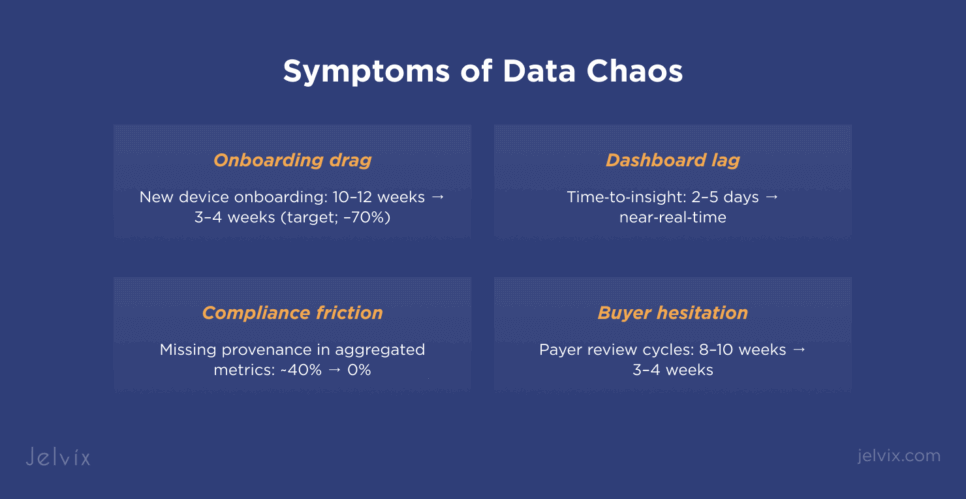

Symptoms you can feel today

- Onboarding drags every time you add a new BP cuff, wearable, or facility.

- Dashboards lag by days; risk scores are stale when clinicians check them.

- Compliance friction: different services handle PHI and consent differently; audit questions multiply.

- Enterprise buyers hesitate because outcomes aren’t standardized or reproducible.

Root causes (and why they persist)

- Fragmented device & EHR feeds — vendor APIs, CSV drops, and site‑specific FHIR capabilities.

- No canonical model — units, timestamps, and event taxonomies differ; you debug conversions instead of improving outcomes.

- Integration bottlenecks — read‑only at one site, limited write‑back at another; legacy HL7 still present.

- Analytics not wired for operations — batch jobs designed for BI, not near‑real‑time care.

- Compliance bolted on — controls vary by service; provenance is incomplete; consent is scattered.

The fix: build a Digital Health Bridge

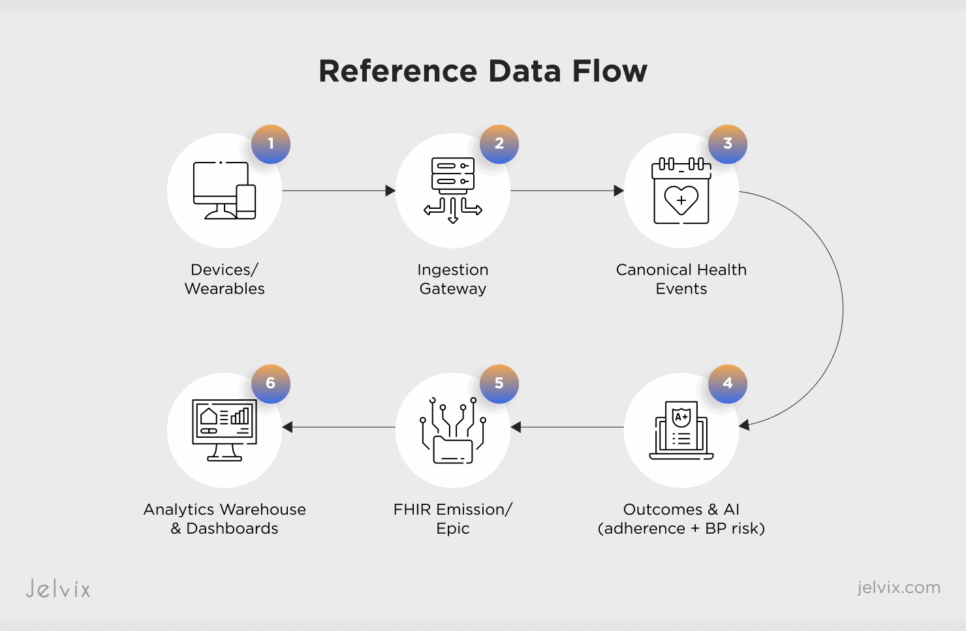

A digital health bridge is a HIPAA‑ready, AI‑augmented integration layer that unifies ingestion, normalizes data, and emits standards‑based events to EHRs and analytics — with compliance built into the pipeline.

Core capabilities:

- Unified ingestion (FHIR + IoT): connectors for BP cuffs/wearables; webhooks and scheduled polling; buffering and de‑duplication.

- Canonical model: unit and timestamp normalization; event taxonomy for readings, adherence, exceptions.

- Outcomes & AI services: adherence scoring and BP‑risk prediction with feature store and retraining hooks.

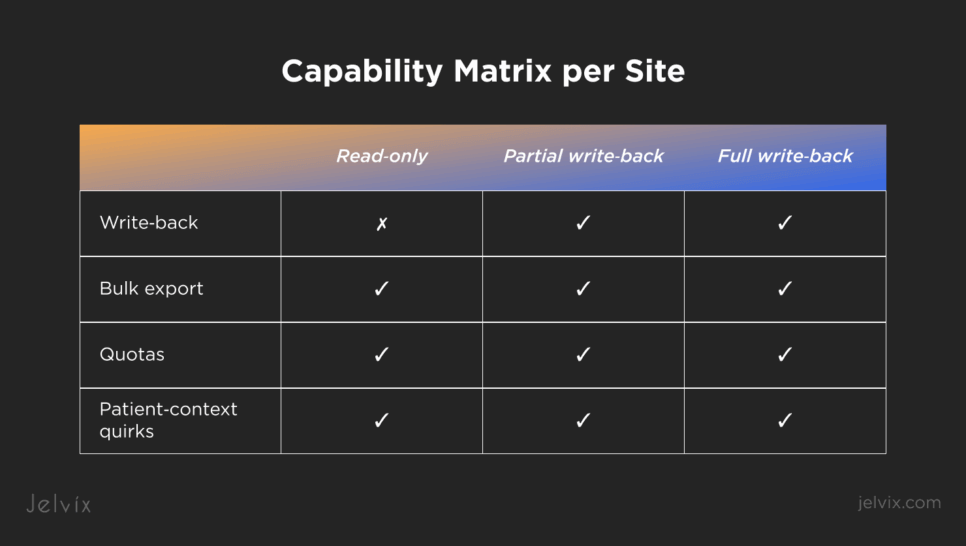

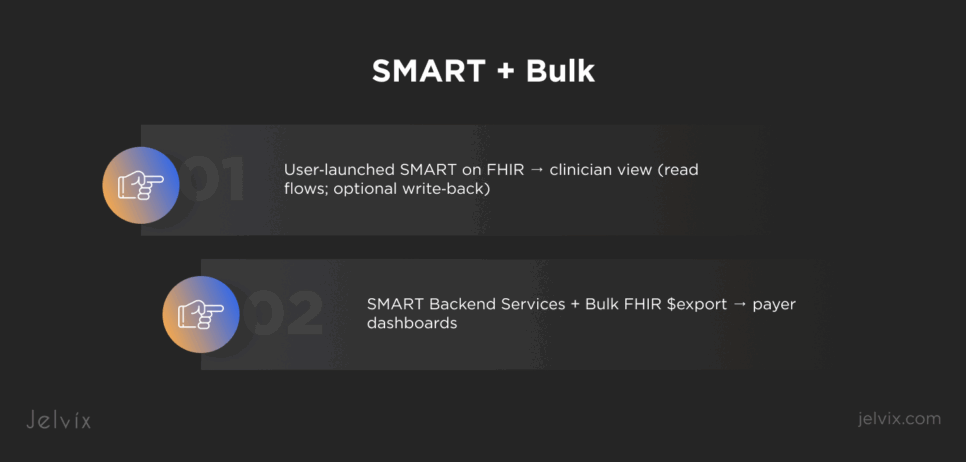

- EHR integration: SMART on FHIR (user & backend); site capability matrix (read‑only / partial / full write‑back).

- Analytics path: near‑real‑time denormalized facts for cohorts, payer dashboards, and ROI tracking.

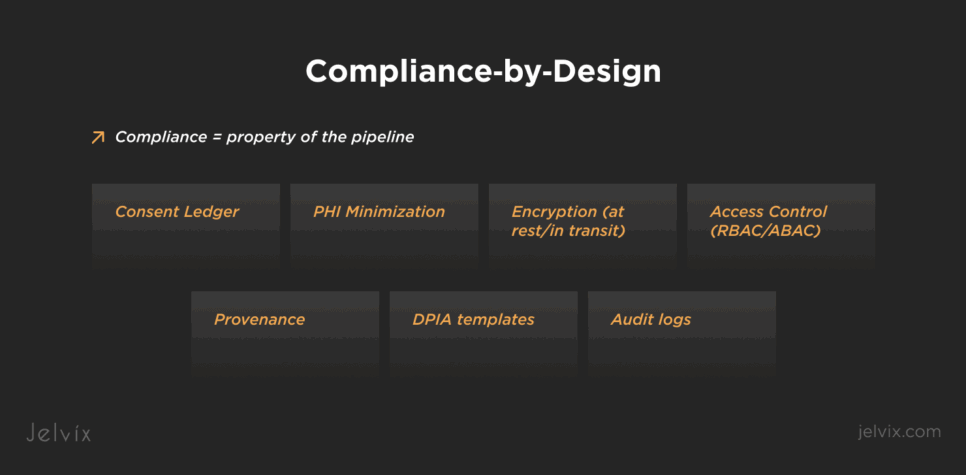

- Compliance‑by‑design: consent ledger, PHI boundaries, encryption, audit logs, and Provenance on every transformation.

Reference architecture (at a glance)

Devices & Wearables → Ingestion Gateway → Canonical Health Events → AI Services (adherence + BP risk) → FHIR Emission / Epic → Analytics Warehouse & Dashboards

Design notes

- Treat idempotency as a first‑class constraint across retries and backfills.

- Log capability per site at runtime; degrade to read‑only cleanly; queue deferred write‑back.

- Keep a two‑lane flow: near‑real‑time for clinician UX; batch (Bulk/Backend Services) for cohorts and payers.

- Attach Provenance (what unit, what exclusion, what algorithm version) to every aggregated metric.

What to implement first (90‑day plan)

Days 0–15 — Blueprint & guardrails

- Freeze target devices and sites; document write/read expectations.

- Define canonical units (mmHg), timestamp policy (TZ/drift), and event taxonomy.

- Introduce a capability matrix per site; enumerate quotas and Bulk availability.

- Prepare DPIA templates and a consent ledger stub.

Days 16–45 — Bridge MVP

- Build ingestion adapters and buffering; normalize units/timestamps; emit canonical events.

- Ship initial adherence score and a lightweight BP‑risk flag; store features and model versions.

- Integrate with Epic via SMART on FHIR in user context; validate read flows.

- Stand up near‑real‑time analytics tiles (adherence, elevated BP flags).

Days 46–90 — Scale & harden

- Add Backend Services for cohorts; start Bulk exports for payer dashboards.

- Implement deferred write‑back where permitted; test negative paths at read‑only sites.

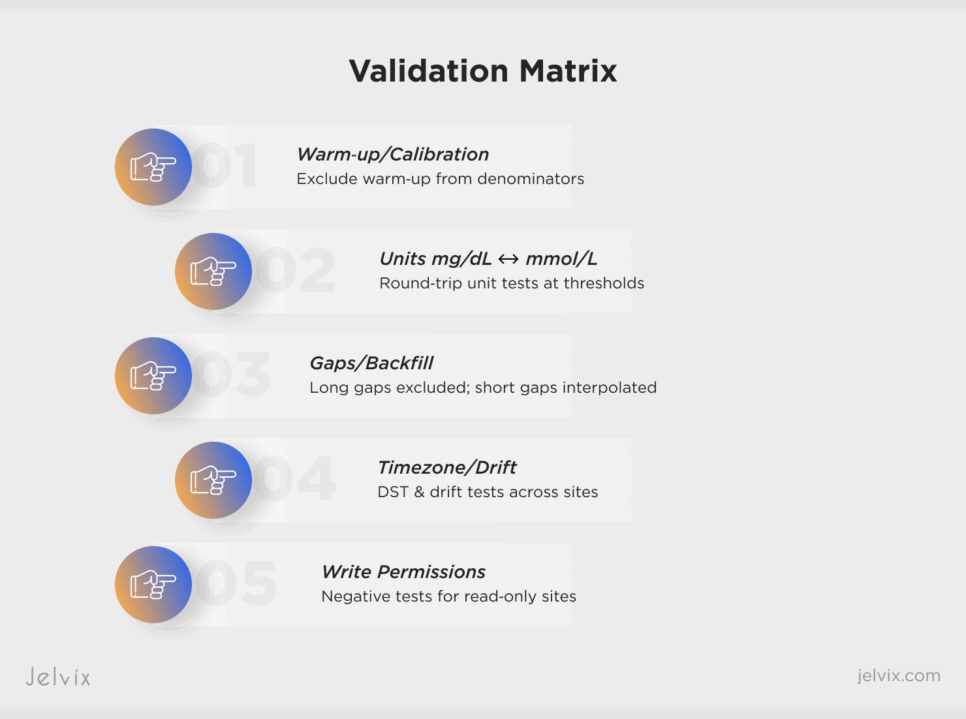

- Expand QA: synthetic datasets for unit switches, gaps, DST crossover; provenance checks.

- Add model monitoring (drift, calibration) and rollback playbooks.

Short examples (what good looks like)

Epic FHIR adoption, pragmatically: Register a patient‑context SMART app for clinician workflows and a system client for cohorts. Expect different behavior by site; toggle features with the capability matrix.

FDA SaMD AI expectations, operationalized: Version your models, record training data lineage, and use predetermined change patterns to update models safely. Use shadow deployments before promoting.

Payer ROI expectations, answered: Standardize outcomes (adherence streaks, BP control rates) and make cohort exports reproducible; document definitions in the bridge itself so sales and compliance speak the same language.

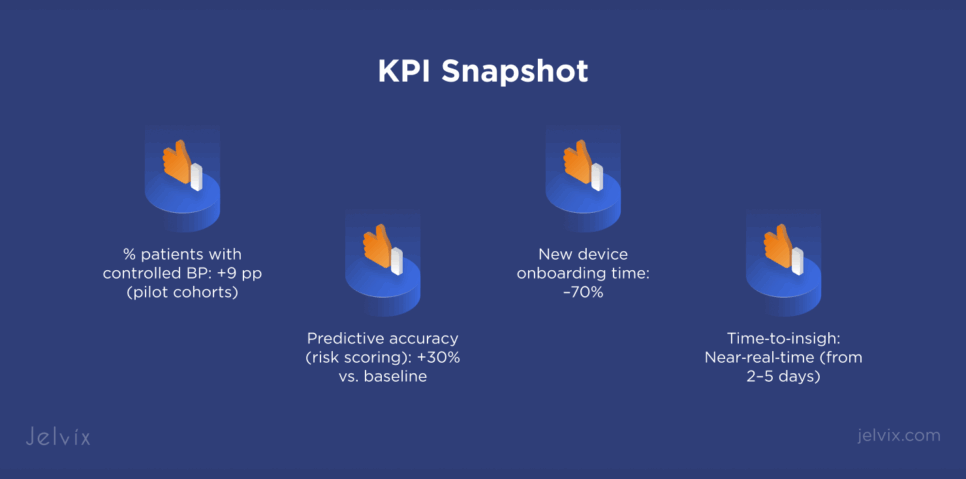

What to measure (and show buyers)

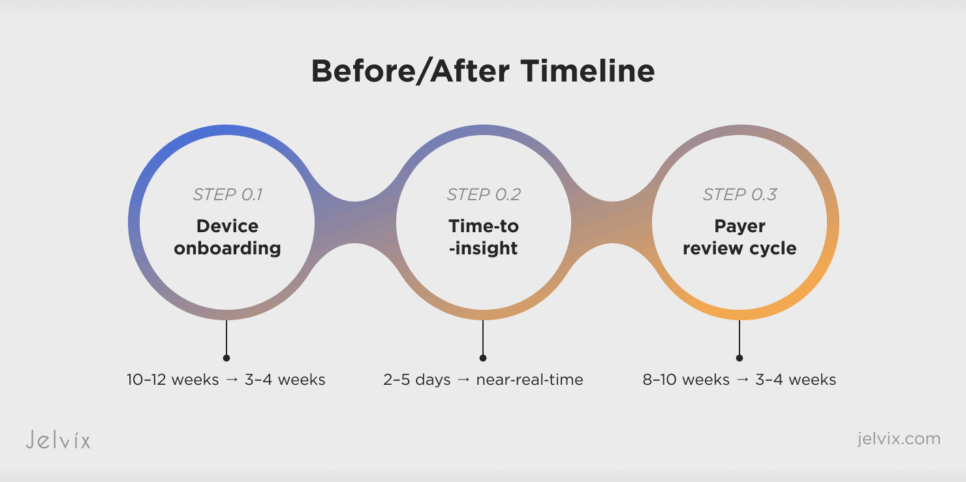

- Device onboarding time (target: –70% vs. baseline).

- Risk‑score accuracy (target: +30% vs. baseline).

- Time‑to‑insight (target: near‑real‑time).

- Write‑back success (where allowed) and read‑only fallback reliability.

- Audit completeness (provenance coverage, access reviews passed).

Compliance, for real

Compliance is easiest when it’s a property of the pipeline, not a post‑hoc document. Enforce PHI minimization at the schema boundary, keep consent as a first‑class entity, and attach transformation Provenance to every aggregated reading and risk score. This keeps FDA‑grade traceability and makes payer due diligence faster.

Executive checklist (copy/paste)

- Do we have a canonical model and capability matrix per site?

- Can we run Bulk cohort exports and reproduce results for payers?

- Are adherence and BP risk computed with versioned models and clear lineage?

- Will the app degrade to read‑only gracefully and queue deferred writes?

- Is Provenance attached to every computed metric?

Why this playbook works

It recognizes that data chaos, not algorithms, kills scale. A digital health bridge makes devices, EHRs, analytics, and AI play nicely — and proves it with measurable onboarding speed, predictive lift, and audit‑ready outcomes.

Ready to evaluate your current pipeline? Let’s run a 2‑week readiness review across ingestion, normalization, EHR, analytics, and compliance.