Catastrophic forgetting poses a dire challenge to developing safe and useful AI. We, humans, tend to forget things over time for different reasons: the information could be too old, not valuable enough, or not used frequently. Interestingly enough, AI has the same problems. When AI systems learn new tasks, they can forget what they’ve already mastered, leading to serious issues. Imagine an AI in an autonomous car suddenly forgetting how to recognize stop signs—it’s a risk no one can afford.

This problem isn’t just theoretical; it’s happening in real-world applications. If AI systems can’t retain past knowledge, their performance and reliability suffer. Developers must tackle this head-on to ensure AI stays sharp and dependable as it evolves. Let’s dive deeper into this topic with real-life examples, strategies for overcoming catastrophic forgetting in Neural Networks, and best-use practices.

What is Catastrophic Forgetting?

Catastrophic interference, also referred to as catastrophic forgetting, is when an artificial neural network suddenly and completely forgets old information when learning new information. Imagine you have a chalkboard where you write down everything you learn. Catastrophic forgetting is like erasing the entire chalkboard every time you want to add something new. Instead of adding new information in a new corner, you basically wipe everything down, which leads to losing all the old notes in the process. Annoying, isn’t it? That’s what happens to a neural network when it experiences catastrophic forgetting—it forgets everything it previously learned as soon as it learns something new.

McCloskey and Cohen (1989) were among the first to notice a major problem in how neural networks learn, which they called catastrophic interference. They conducted experiments where a network initially mastered elementary additions such as 1+1 and 1+2 until they were solved accurately. However, when imparting the network with new kinds of additions like 2+1 and 2+2, it forgot how to tackle the problems it had previously learned. Even a single new lesson could induce the network to forget the older ones, which posed a big problem.

In another experiment, McCloskey and Cohen replicated how human memory works when learning new information. They trained a network on two lists of word pairs, one after the other. Upon learning the second listing, the network nearly entirely forgot the previous one. This showed that the new learning was essentially wiping out the old knowledge, unlike in humans, where discovering something novel doesn’t normally induce us to forget what we have formerly comprehended.

While Ratcliff built upon earlier investigations in 1990, he discovered similar struggles. Networks would forget even thoroughly learned material as soon as fresh data arrived. Forgetting persisted regardless of network size or group instruction. Additionally, Ratcliff found networks struggled to separate novel information from familiar knowledge as learning expanded. This contradicted common human memory function. Though extra attempts, such as supplementary reaction nodes, were made, the issue endured, underlining a basic difficulty in mimicking how mankind learns.

Why Does Catastrophic Forgetting Occur?

Catastrophic forgetting occurs because of how artificial neural networks, particularly those based on backpropagation, are designed to learn. To understand this, it might be a good idea to start with artificial neural network definition. An artificial neural network is a computing system inspired by human brains processing information. It consists of layers of interconnected nodes, or “neurons,” that work together to recognize patterns and make decisions. The network learns by adjusting the connections, or “weights,” between these neurons based on the data it receives.

The whole problem of catastrophic forgetting starts when an artificial neural network attempts to gain new information. In learning, it revises these weights to boost performance on the fresh task. Still, these changes can overwrite the weights that were crucial for keeping the memory of earlier learned tasks or facts. This occurs because the system lacks an intrinsic mechanism to safeguard prior knowledge while discovering new things.

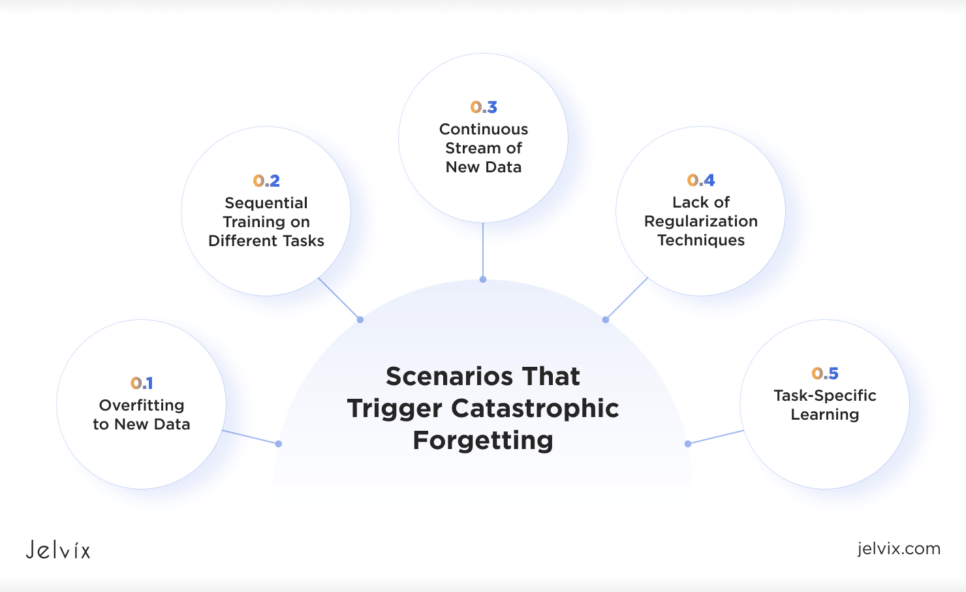

Certain scenarios can trigger catastrophic forgetting more easily:

- Sequential Training on Different Tasks: When the network learns new tasks in a row without practicing the old ones, it might forget them. Practice makes perfect… Right?

- Continuous Stream of New Data: If an AI keeps receiving new information without reviewing what it already knows, it can lose the old knowledge.

- Overfitting to New Data: When the network becomes too focused on the new task, it can forget how to do the old tasks.

- Lack of Regularization Techniques: Without techniques like dropout or elastic weight consolidation, which help preserve older knowledge, the network is more prone to catastrophic forgetting.

- Task-Specific Learning: If the network learns tasks that are very different from each other without a way to connect them, it might forget how to do the earlier ones.

Consequently, when the network centers on new facts, it commonly loses the ability to execute duties it has previously mastered. This is why, in technical terms, catastrophic expectations arise when the network’s structure isn’t designed to accommodate both old and new knowledge simultaneously.

Impacts of Catastrophic Forgetting

Catastrophic forgetting poses a serious threat to the dependability of AI systems. When a neural network forgets previously learned tasks while trying to master new ones, its overall accuracy and reliability can drop big time. This can lead to AI systems that are inconsistent, making them less trustworthy for applications where stable performance is crucial. For example, an algorithm that forgets how to identify certain items while endeavoring to learn of others may grow untrustworthy in crucial settings like self-driving motors or medical diagnosis, where lives hang in the balance.

Catastrophic forgetting can have real-world consequences. Take a customer service chatbot, for example. Initially, it answers a wide range of queries well. But after retraining to handle more specific questions, it might start messing up basic ones. This frustrates users and damages the company’s reputation.

From a business standpoint, this issue can drive up costs. Companies may need to spend extra time and money retraining AI models to recover lost knowledge. This slows down AI deployment and creates operational risks, especially where accuracy is crucial. To avoid these problems, businesses need strategies that help AI learn new things without forgetting the old, keeping performance steady and reliable.

Examples in Real-World Applications

AI is being implemented in many industries. It’s no wonder since it helps automate many tasks that require repetition, consistency, and high precision, which can sometimes be challenging for humans to maintain all the time. Let’s imagine AI systems that are involved in so many crucial processes forgetting a few details.

Most commonly, AI models used in healthcare help to diagnose diseases and recommend treatments. Unfortunately, in the case of catastrophic forgetting, these may be devastating. For instance, a model might be trained to detect one of many types of medical conditions but, after that may be retrained to specialize in detecting a specific disease. If catastrophic forgetting happens, the model may forget how to diagnose conditions other than what it has been learning recently and might make up incorrect diagnoses. In the case of LLMs used to process medical text, this is especially problematic as catastrophic forgetting can lead to outdated or inaccurate information being applied in patient care, potentially setting catastrophic expectations for clinicians and patients.

In the financial sector, AI is used for tasks like fraud detection and risk assessment. Catastrophic forgetting can cause a model that was effective at detecting certain types of fraud to lose that ability after being updated with new data on different types of fraud. This then leaves a financial institution open to any undetected fraud while at the same time undermining trust in the system’s effectiveness. For example, an LLM applied to temporal series forecasting could “forget” older economic patterns that still hold true when re-fitted with new data and make incorrect predictions subsequently leading to substantial financial losses. Naturally, the stakes for AI in finance are high, and failures resulting from catastrophic forgetting can come at a significant cost both monetarily as well as to reputation.

Video analytics in security and surveillance areas use AI to recognize strange activities or objects. Catastrophic forgetting is particularly bad when the system was originally capable of accurately detecting some sort of behaviors or objects under the camera but loses this capability consequently after being trained on new data. E.g. a system that is trained to spot anomalous behavior in a public place might just overlook those same activity types if it was instructed differently and required this new kind of behavior only. If unaddressed, this failure may in turn result in security breaches and shake the trust of AI-driven surveillance systems.

The key lesson from these examples is the importance of balancing new learning with the retention of existing knowledge. Much research exists on catastrophic forgetting, so let’s explore the existing and proven solutions.

Read more about the most common software development strategies and take a look at their benefits and drawbacks.

Strategies To Mitigate Catastrophic Forgetting

Catastrophic forgetting is a significant challenge in AI development and deployment. Fortunately, various strategies have been developed to mitigate this issue. These strategies focus on helping models retain previously learned information while acquiring new knowledge.

Rehearsal

Rehearsal is the simplest and most powerful weapon against catastrophic forgetting. In principle, the AI model must be retrained periodically using both fresh data and a segment of old data. This method is similar to the way the human brain revises older concepts to keep them fresh in memory.

When using rehearsal, it’s important to carefully select a representative subset of the old data to ensure that the model continues to perform well on all previously learned tasks.

The benefits are:

- simple to implement;

- effectively preserves old knowledge.

Unfortunately, this approach can quickly become computationally expensive for very large datasets, as it requires storing and managing old data.

Regularization

Regularization methods, such as Elastic Weight Consolidation (EWC), aim to avoid catastrophic forgetting by penalizing large changes in weights that are important for previously seen tasks. In EWC, the method itself points out which parameters are important for past tasks and then penalizes when those parameters change too much during learning a new task. This method keeps the old information intact so that only new knowledge is learned.

EWC works great while handling sequential learning tasks. It allows the model to consolidate weights associated with old tasks, making it less likely to overwrite them with new information.

To effectively use EWC, it’s important to properly balance the strength of regularization. Too much regularization can hinder the learning of new tasks, while too little can lead to forgetting. Additionally, EWC works best when tasks are similar to each other.

This technique is favored because it:

- does not require storing old data;

- effectively reduces the risk of forgetting related tasks.

However, it is not suitable for cases where the new tasks are significantly different from the old tasks. It also requires careful tuning of regularization parameters.

Architectural Changes

An alternative approach to tackling catastrophic forgetting is architectural changes to the neural network. With the proliferation of techniques like Progressive Neural Networks (PNNs) or dynamically expandable networks, the architecture can allocate new resources for learning new tasks while simultaneously maintaining its original structure and preserving existing weights so that it does not forget how to perform old tasks. This way, any new learning cannot disturb previously learned knowledge.

Do, however, look out for architectural changes as they are very hard to implement and may require a lot of computational power. Nonetheless, they may be very powerful in conditions wherein the model has to tune a broad range of tasks throughout time.

Therefore, the advantages of this strategy include:

- being highly effective at preserving old knowledge;

- handling a broad range of tasks.

And the weaknesses are:

- complex to implement;

- requires more computational resources.

To summarize, when to use each strategy:

- Rehearsal: Resources are available to handle both old and new data together.

- Regularization Techniques: Tasks are related, and there’s a need to balance old and new learning.

- Architectural Changes: The AI needs to handle many different tasks over time.

By understanding and applying these strategies, developers can significantly reduce the impact of catastrophic forgetting, ensuring that AI models remain robust, reliable, and capable of learning over time.

Best Practices in AI Training to Prevent Forgetting

Preventing catastrophic forgetting in AI systems requires a combination of well-planned training strategies, continuous learning, and the right tools. Artificial neural networks, inspired by the human brain, learn by adjusting connections between their “neurons” based on data. To avoid catastrophic forgetting, it’s crucial to plan training carefully.

- Sequential Learning with Rehearsal: When training on multiple tasks, regularly retrain the model on previous tasks alongside new ones. This keeps old information alive and allows the model to continue performing pre-trained tasks.

- Balanced Data Representation: Use a mix of old and new data during training to prevent the model from becoming biased towards recent data.

Continuous learning helps models stay effective by adapting to new information without losing old knowledge.

- Incremental Learning: Update your models as new data comes in (important for dynamic environments). This process keeps the model up-to-date by not wiping its memory completely.

- Elastic Weight Consolidation.

Tools to Support Best Practices in AI Training for Preventing Catastrophic Forgetting

- TensorFlow and PyTorch: TensorFlow and PyTorch are popular frameworks for advanced neural network architecture training. They offer extensive libraries and tools for implementing continuous learning, regularization techniques like EWC, and other strategies to mitigate forgetting.

- OpenAI‘s Gym and RLlib: For reinforcement learning scenarios, where AI models learn through interaction with environments, tools like OpenAI’s Gym and RLlib provide robust platforms for training and testing models with continuous learning and other advanced techniques.

- Transfer Learning Frameworks: Transfer learning, which involves adapting a pre-trained model to a new task, can also help prevent forgetting. Pre-trained models from libraries such as Keras and Hugging Face Transformers enable one to fine-tune existing architectures for specific tasks without retraining the entire network, hence mitigating forgetting.

From strategic training phases and model retraining to leveraging the right tools, devs can make sure their models are reliable even in such a fast-evolving environment.

Future Trends and Research Directions

The field of AI training is rapidly evolving, with new solutions and innovations emerging to address challenges like catastrophic forgetting and to improve the overall efficiency and adaptability of AI models.

Continuous learning is one of the most exciting areas. Traditional training is performed in isolated stages, unlike continual learning which enables models to learn continuously from fresh streams of data without the need to forget acquired knowledge. This is a critical aspect of AI systems that need to work in the real world, where one should always adapt to new information coming on-the-fly. Methods like Elastic Weight Consolidation (EWC) and memory-based approaches, such as experience replay, are at the forefront of these, trying to balance between retaining old knowledge and acquiring new one.

Another new solution is meta-learning, which people in the field of AI sometimes call “learning to learn”. Meta-learning algorithms are designed to quickly adapt to new tasks using experience from the old. This method could significantly reduce the time and data requirements for training AI models in novel tasks, creating new opportunities, especially when large datasets are either difficult or expensive to obtain. Meta-learning also offers hope of reducing catastrophic forgetting since meta-learners, in principle, perform better across tasks by generalising how to solve new ones.

Several research areas are gaining significant attention as the AI community seeks to overcome the limitations of current training methods.

- Neurosymbolic AI: The combination of neural networks and symbolic reasoning, where the learning from data is supplemented with logical rules. Integrating structured knowledge may be able to help reduce forgetting.

- Self-Supervised Learning: Involves training models on unlabeled data, helping them learn useful representations without needing extensive labeled datasets. This method supports continuous updates with less risk of forgetting.

- Robustness and Explainability: As AI is used in critical areas, there’s a growing focus on making models more robust and easy to understand. This ensures AI can handle unexpected inputs and that its decisions can be clearly explained.

Looking ahead, the landscape of AI training is expected to be shaped by several key trends:

- Integrated Continual Learning.

- Focus on Generalization: AI training will shift towards improving how models generalize across different tasks, using techniques like meta-learning and neurosymbolic AI.

- AI-Human Collaboration: AI development will increasingly involve collaboration with human experts, ensuring models are both effective and ethically sound.

- Scalable Training: As models grow more complex, there will be a push for more efficient training methods that scale well with large datasets and complex tasks.

Conclusion

As AI continues to evolve, addressing challenges like catastrophic forgetting is crucial for building robust, adaptable systems. Researchers and practitioners are encouraged to explore and implement strategies such as continual learning, meta-learning, and architectural innovations to ensure AI models remain reliable and effective over time. By staying informed on emerging trends and actively contributing to ongoing research, the AI community can drive forward the development of smarter, more resilient systems. This is the time to expand on what AI can do—it’s our opportunity to take part in shaping intelligent technology for years down the line.

Reach out to us to develop cutting-edge AI solutions that leverage continual learning and meta-learning, ensuring your systems remain adaptive, resilient, and ahead of the curve.

FAQ

What is the difference between catastrophic forgetting and regular forgetting in AI?

Catastrophic forgetting is when an AI model completely forgets how to perform tasks it previously learned after being trained on new tasks. Regular forgetting is a gradual decline in performance over time, but it’s not as extreme or immediate as catastrophic forgetting.

Can catastrophic forgetting occur in all types of machine learning models?

Catastrophic forgetting mostly happens in neural networks and deep learning models because of their complex structures. It can also occur in other types of models like decision trees or support vector machines, but it’s less common.

How can you identify if an AI system is experiencing catastrophic forgetting?

You can spot catastrophic forgetting if there’s a sudden drop in performance on tasks the model used to handle well, or if it gives inconsistent results. Regularly testing the model on old tasks can help detect this issue.

Are there specific industries more affected by catastrophic forgetting?

Industries like healthcare, finance, and autonomous driving, which need continuous learning and adaptation, are more affected by catastrophic forgetting. These sectors require AI models to update often without losing past knowledge.

What role do hyperparameters play in preventing catastrophic forgetting?

Hyperparameters like learning rate, batch size, and regularization terms help balance learning and prevent catastrophic forgetting. Proper tuning of these settings can help the model keep old knowledge while learning new information.

Need a qualified team of developers?

Fill the expertise gap in your software development and get full control over the process.