By April 2025, the FDA had approved over 340 AI tools in radiology, covering the detection of cancers, strokes, and other critical conditions. Approximately two‑thirds of U.S. radiology departments now use AI.

However, when it comes to artificial intelligence in oncology, the promise of AI often collapses in practice. Models trained on world-class datasets break down when faced with dirty lab results, disconnected EHR flows, and untraceable registry inputs. Instead of empowering clinicians, AI modules end up delayed due to compliance gaps and integration failures.

If you want to prevent your cancer treatment technology from becoming another cautionary tale, read this article. You’ll find out why AI fails to scale in cancer tech, which hidden integration problems require immediate action, and how to ensure that your AI software development efforts deliver usable and trustworthy AI models.

Evidence of Success: How Artificial Intelligence in Oncology Delivers Results

AI in oncology has already shown measurable success across several domains. These breakthroughs prove that the potential of AI in healthcare software development is real, even if scaling it into everyday clinical workflows remains a challenge.

FDA-Cleared AI Pathology Tools

The U.S. Food and Drug Administration cleared multiple AI-powered pathology solutions that assist clinicians in detecting malignancies. These cancer technologies help flag high-risk samples, reduce oversight errors, and support pathologists in handling rising diagnostic volumes. Their regulatory approval signals that AI can meet strict safety and reliability standards in oncology.

For instance, the FDA granted Breakthrough Device designation to Paige PanCancer Detect in April 2025. Notably, this marks the first time such a designation was granted to an AI tool capable of flagging both common and rare cancers across a range of anatomical sites.

Another significant milestone was the Ibex Prostate Detect oncology software, which received FDA 510(k) clearance in early 2025. This AI-powered digital pathology solution analyzes whole-slide images from prostate core needle biopsies, generating heatmaps to highlight areas of concern. In validation studies cited by Urology Times, the system achieved a 99.6% positive predictive value (PPV) for heatmap accuracy and helped identify 13% of cancers initially missed by pathologists.

Improved Accuracy in Clinical Imaging and Screening

AI-powered cancer technology advancements demonstrated accuracy equal to or even surpassing human radiologists in specific detection tasks across mammography to lung cancer CT scans. By reducing false positives and false negatives, AI-driven imaging supports earlier interventions and decreases the workload of already stretched oncology teams.

For example, a national screening trial in Germany revealed that AI‑augmented mammography increased cancer detection by 17.6%, without boosting false positives, which highlights AI’s precision and efficiency. Achieving these results at scale, however, requires that care coordination software uses clean data pipelines and interoperability frameworks such as a proven FHIR integration solution.

Accelerating Early Detection

Medical researchers confirm that AI powers radiomics, deep learning, and multimodal fusion across CT, MRI, and PET to detect tumors earlier, classify stages more precisely, and guide personalized therapy.

Genomic sequencing, biomarker discovery, and liquid biopsy analysis have benefited from machine learning models that spot cancer signatures earlier than traditional methods. Detecting cancer at earlier stages not only improves patient survival rates but also reduces treatment costs, making AI a critical enabler of value-based oncology care.

Healthcare providers face rising complexity in managing care and operations. Explore 15 types of healthcare software that streamline workflows and improve patient outcomes.

AI in Oncology: Big Hype, Low Usage

Despite the investment and media attention, artificial intelligence in oncology rarely makes it past pilot projects or demo videos. What holds AI back isn’t the science, but the fact that its outputs often lack clarity, context, and credibility for clinicians.

Why Most AI Cancer Treatment Tools Remain Unused in Real Workflows

Studies show that while radiology and imaging AI adoption is growing, oncology SaaS platforms lag far behind, often because leaders don’t have a complete understanding of how to improve interoperability in healthcare.

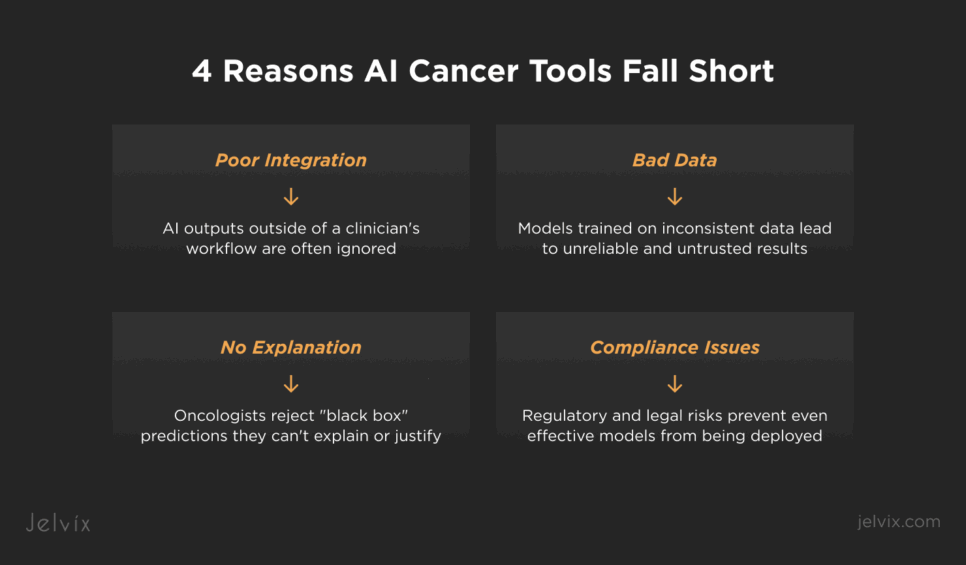

Lack of integration with EHRs, registries, and lab systems

If AI chronic care management software outputs exist in a separate dashboard, clinicians ignore them. Without direct embedding into EHR interfaces, pathology viewers, or cancer registry workflows, even accurate models remain disconnected from daily decision-making.

Inconsistent data pipelines

Genomic, pathology, and lab results often arrive as PDFs, faxes, or unstructured text. Without preprocessing and normalization, AI models produce biased or incomplete results that clinicians quickly learn not to trust.

Black box predictions without clinical context

Oncologists reject outputs they can’t explain to patients or justify in tumor board meetings. Predictions without confidence intervals, traceable inputs, or visual explanations are sidelined regardless of technical accuracy. This is why explainable AI in healthcare is essential for adoption in oncology workflows.

Compliance uncertainty stalls rollouts

Even when models perform well in pilots, deployment halts if data lineage, consent management, or audit trails don’t meet HIPAA, GDPR, or FDA requirements. Legal risk outweighs experimental benefit.

The Real Needs of Oncologists Compared to Current AI Tools

Oncologists are often opposed to chronic care management apps that create extra work without adding clinical value. To make innovation usable at the point of care, it’s critical to understand what oncologists truly expect from AI solutions, against what most vendors actually deliver.

AI that supports decisions rather than replacing judgment

Clinicians want tools that surface anomalies, highlight cases at risk, or reduce manual workload, while leaving final calls to the physician. Assistive AI earns adoption, while prescriptive AI often fails.

Insights embedded into existing clinical workflows

Outputs must appear in the same system that an oncology data specialist already uses for charting, ordering tests, or reviewing images. Every additional click reduces adoption.

Transparent and auditable predictions

Clinicians want to know why a case was flagged. That means audit trails, version control, and clear visual or statistical explanations that make AI recommendations defensible in front of peers and regulators.

Relevance to immediate oncology outcomes

Oncologists aren’t looking for abstract metrics or theoretical forecasts. Instead, they want concrete support in diagnosis, treatment planning, patient stratification, and long-term follow-up. Anything outside of these core areas is unlikely to gain traction.

Why do most AI tools in oncology fail? Discover how resilient data integration makes AI effective in cancer care.

The Hidden Threat in Chronic Care Management Solutions: Dirty Data and Broken Pipelines

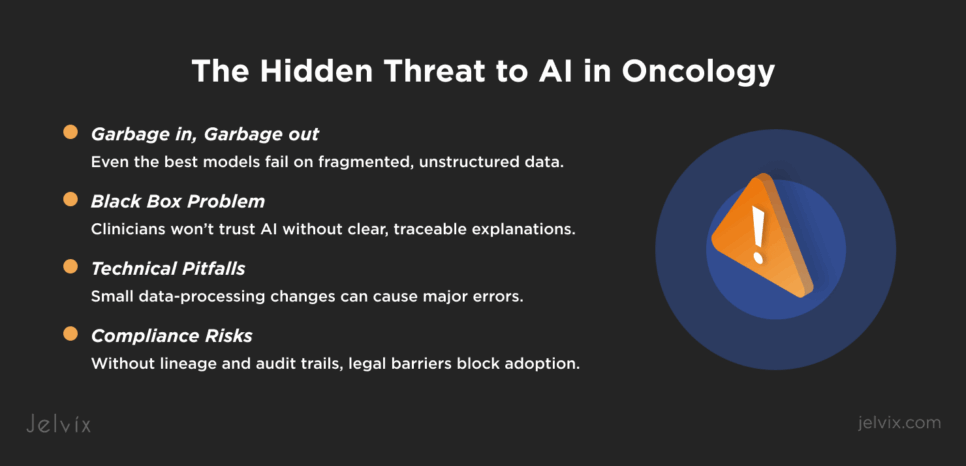

Artificial intelligence in oncology is only as strong as the data and pipelines feeding it. While research papers showcase state-of-the-art accuracy, production environments tell a different story. Here, fragmented inputs, unstructured lab files, and broken integrations destroy even the most advanced models.

Real-World Examples of AI Misfunction

AI failures in oncology often appear as subtle but dangerous, where the model outputs confident results that are completely wrong. These breakdowns usually trace back to messy inputs or broken pipelines rather than the AI itself.

According to a 2025 study, the method used for identifying tissue in digital histopathology had downstream effects on cancer grading AI. When classical thresholding was used instead of more robust AI-based segmentation, it missed portions of the tissue entirely, resulting in clinically significant grading errors in about 3.5% of malignant slides.

At the same time, a European Radiology study demonstrated that AI’s ability to classify nodules in lung CT was heavily influenced by the choice of reconstruction kernel. While nodule detection rates remained consistent, type classification accuracy varied dramatically depending on image processing parameters, highlighting how subtle technical variations can mislead AI.

Finally, AI was noticed to fail at flagging issues in virtual pathology. In virtual staining, a UCLA-led team developed a secondary AI model (AQuA) that detects critical hallucinations in AI-generated images. This control model achieved 99.8% accuracy in detecting errors that even expert pathologists missed, underscoring how primary AI models can confidently produce misleading artifacts without oversight

Why “Garbage In, Garbage Out” Is More True Than Ever

In oncology, flawed inputs don’t just reduce accuracy, but compromise clinical confidence. Even a single high-confidence mistake can make clinicians stop trusting the system.

Small lapses in segmentation or data partitioning can lead to serious misclassifications that only become visible in real-world use. Imaging AI that looks reliable in validation may fail when confronted with small differences in scanning protocols or reconstruction methods. Virtual staining research has shown that even highly advanced AI can create convincing but false images, and without additional oversight, these errors go unnoticed by pathologists.

The core lesson for oncology SaaS leaders goes that building trustworthy AI is less about algorithmic sophistication and more about ensuring data quality, clinical context alignment, and robust validation checkpoints across the entire pipeline.

Addressing the Traceability Trap

Black-box models may win benchmarks, but they rarely survive in oncology workflows. Clinicians need to explain every decision they make, whether in front of a patient, a tumor board, or a regulatory body. When an AI tool produces a risk score or prediction without clear reasoning, it is usually ignored. In oncology, trust depends not just on accuracy but on the ability to trace how an output was generated and which data sources were used.

This is where data lineage becomes critical. Oncology data flows through multiple layers, from cancer registry management systems to hospital EHR systems, then into machine learning pipelines. Each transition introduces risks of data loss, mislabeling, or contextual gaps. Without a transparent chain of custody, platforms cannot prove where a particular input came from or how it was transformed before reaching the model.

For example, a risk stratification model may base its predictions on genomic results, but if those results were uploaded as scanned PDFs into an EHR and then parsed with imperfect OCR, the downstream AI is already working with compromised data. If an oncologist later asks why a patient was flagged as high risk, the platform must be able to show exactly which data fields contributed and how they were processed. Without this traceability, the model becomes impossible to defend clinically and legally, underscoring that clinical interoperability is a must for AI adoption.

Oncology SaaS leaders who want artificial intelligence in oncology to be trusted must therefore build audit trails, version control, and lineage mapping directly into their integration architecture.

The Importance of Compliance in AI Cancer Treatment Technology

In oncology, compliance cannot be treated as paperwork done after development. Regulations such as GDPR in Europe, HIPAA and ONC rules in the U.S., and the EU MDR for medical devices govern not only how patient data is stored but also how it flows through AI pipelines. Every input and every output must meet these standards, or the platform risks regulatory delays, financial penalties, and loss of market access.

For example, GDPR requires explicit consent management and the right to data erasure. HIPAA mandates strict safeguards on protected health information.

The ONC interoperability rules enforce audit trails and accessible APIs for patient data exchange, while the FHIR interoperability standard ensures structured and secure data flow across oncology systems.

MDR demands proof of clinical safety and post-market surveillance for AI classified as medical devices. These are not isolated checkboxes. They directly shape how data can be collected, transformed, and used by AI models.

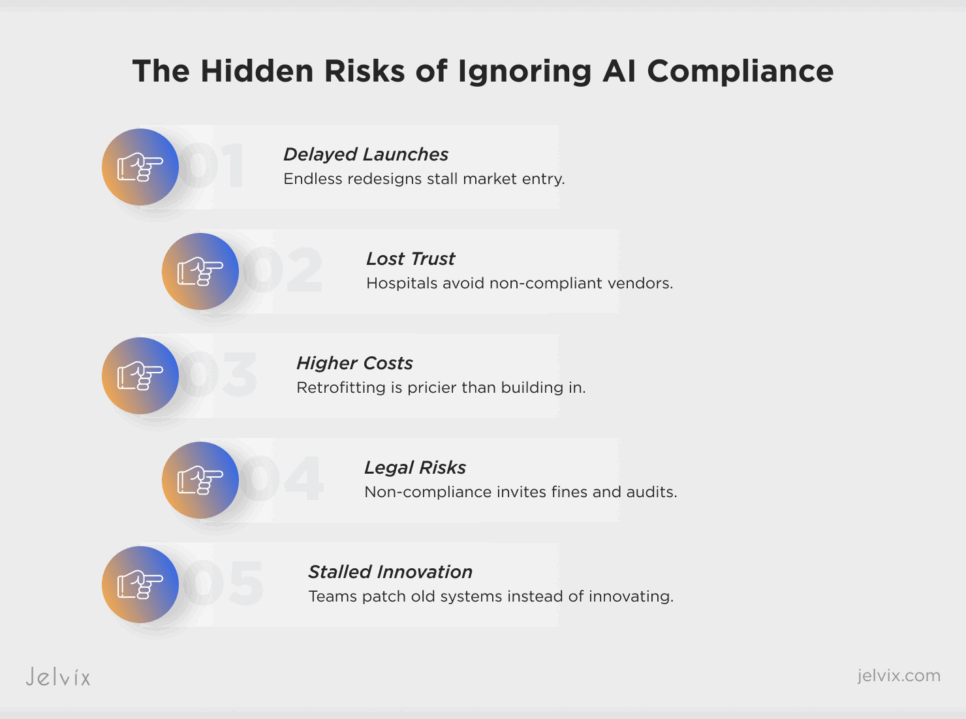

How the Lack of Integrated Compliance Stalls Feature Deployment

When compliance is bolted on at the end of development, features stall. A new risk prediction tool may technically work, but if the pipeline cannot demonstrate where the data originated, how it was consented, and whether outputs can be explained, it will never reach production. The delay does not come from regulators alone. Clinical buyers and hospital compliance officers routinely block the adoption of cancer technology advancements if the traceability and auditability are missing.

Delayed Market Entry

Features that fail compliance reviews are forced into repeated cycles of redesign and revalidation. Instead of launching on schedule, platforms lose months or even years of potential market traction.

Lost Customer Trust

Hospitals and cancer centers are reluctant to partner with vendors who cannot prove compliance readiness. One failed rollout is enough to damage credibility and slow down future deals.

Increased Cost of Remediation

Retrofitting compliance into a product after launch costs significantly more than building it into the pipeline from the start. Engineering teams are forced to rebuild data flows, redesign audit trails, and re-certify models—burning time and budget.

Higher Regulatory Risk

Deploying without compliance certainty opens the door to penalties, audits, or even product withdrawals. In oncology, where regulations are strict and patient safety is paramount, this risk is magnified.

Slower Innovation Cycle

When compliance fixes dominate the roadmap, innovation stalls. Teams that should be developing new AI modules or trial-matching engines spend their time patching old pipelines to pass regulatory checks.

What Clinicians Really Need From AI in Oncology

For oncology SaaS platforms, success does not come from adding more algorithms. It comes from designing AI that fits seamlessly into the workflows clinics already rely on. The mistake many vendors make is creating impressive dashboards that live outside the systems oncologists use every day. Even if the model is accurate, an extra login or disconnected view ensures low adoption.

Embedding into Existing EHR Workflows

The first priority is embedding AI outputs directly into existing EHR workflows. If a clinician is reviewing pathology results in the EHR, the AI-generated insights must appear in the same view, in real time, without switching applications. The less friction, the more likely the tool is to be trusted and used.

Supporting Decision-Making with Comprehensive UX

Equally important is designing a user experience that supports decision-making rather than adding to dashboard fatigue. Oncology professionals do not want to sift through endless visualizations or irrelevant predictions. They need clear, context-aware prompts: which patient requires urgent follow-up, which genomic result changes a treatment plan, which registry data indicates a compliance risk. A well-designed UX reduces noise and surfaces the exact information needed at the right moment.

For SaaS leaders, the challenge is not building more models but building the right delivery mechanisms. Artificial intelligence in oncology that is invisible in the workflow yet indispensable in decision-making is the kind that survives in production.

The Jelvix Blueprint: Integration That Makes AI Work

At Jelvix, we see the same pattern across oncology SaaS platforms: the AI models are rarely the weak link. The real challenge lies in connecting disparate systems, cleaning fragmented data, and creating pipelines that can scale without losing compliance or clinician trust. The platforms that succeed are the ones that treat integration as their core product strategy.

ETL Connectors and Cancer Registry Management Pipelines

The foundation starts with ETL connectors and cancer registry pipelines. Every oncology dataset—whether it comes from pathology labs, imaging centers, or registries—must be extracted, normalized, and transformed into a structure that AI can process. Without reliable ETL pipelines, even the best models will deliver inconsistent results.

Real-Time ML Data Preparation, Explainability Layer, and Audit-Ready AI

On top of the foundation layer comes real-time machine learning data preparation, explainability, and audit readiness. AI predictions should never be delivered as black-box outputs. Each score or recommendation must carry metadata that explains which data points were used, how the model versioned them, and what confidence thresholds apply. This explainability layer not only builds trust with clinicians but also ensures that every prediction is defensible in front of regulators.

Clinical Trial AI Modules and Patient-Matching Engines

Finally, integration must extend beyond treatment workflows into clinical research and patient engagement. AI modules for trial matching, eligibility screening, and longitudinal patient tracking can only function when data streams flow seamlessly across registries, EHRs, and genomic datasets. Platforms that enable this kind of patient-matching intelligence create value not just for clinicians but also for pharma sponsors and research networks.

This blueprint is about building a data and compliance infrastructure that allows artificial intelligence in oncology to be deployed at scale, trusted by clinicians, and validated by regulators. That is what separates oncology SaaS platforms that remain in pilot mode from those that define the next decade of cancer tech.

If you’re looking to develop a chronic care management platform that performs well in production or improves data quality and integration in your existing systems, contact us. Jelvix integration experts will help you design the data pipelines, compliance layers, and interoperability solutions that transform fragmented healthcare data into clean and actionable insights for AI-driven oncology care.

Integration Made Simple.

Team up with experts who bridge data silos and deliver smooth digital operations.