With the increasing number of applications and processes, the volume of big data has been growing exponentially. According to IDC, it will reach 163 zettabytes in 2025. Such growth will result in the high complexity of big data, which will reduce the efficiency of organizations that rely heavily on their information assets.

Traditional storage systems are not suitable for keeping and processing raw data. Consequently, companies need solutions that help manage data at a diverse yet integrated data tier. The data lakes concept can resolve this issue.

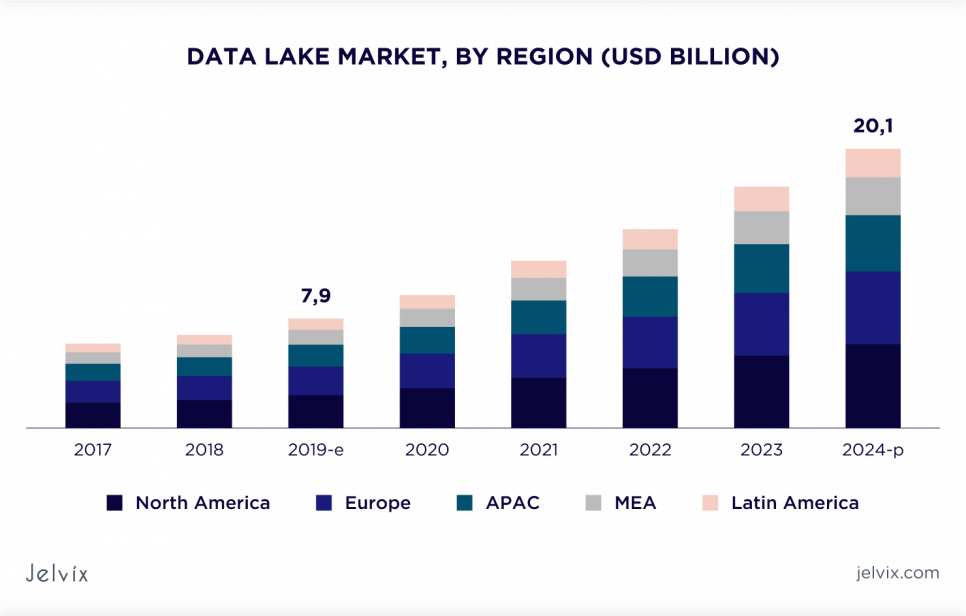

Data lakes are becoming a necessary part of any corporate data ecosystem. The global data lake market presumably will have grown from $8.81 billion in 2021 to $20.1 billion by 2024. Research predicts nearly 10% revenue growth for companies using this technology.

Introduction in Contemporary Data Architecture Principles

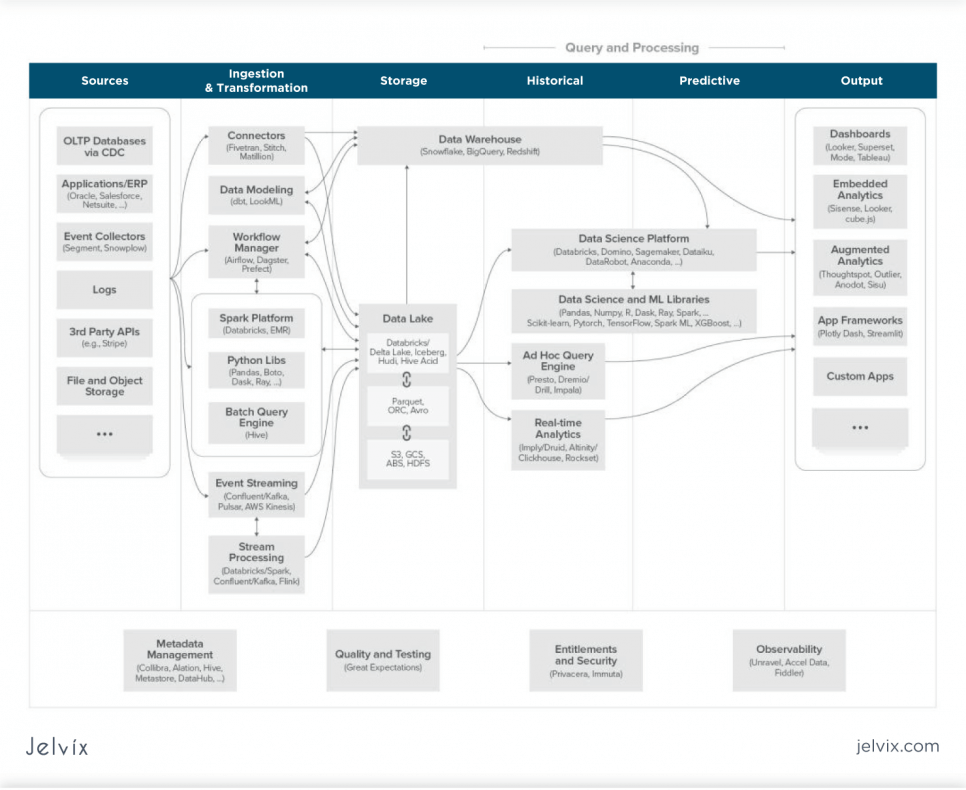

Data is of immense business value. Therefore, every company that accumulates data for decision-making has experienced the need to rethink its data architecture. Practices, methods, and data infrastructure tools are continually evolving, so getting a holistic view of how all elements fit together can be difficult.

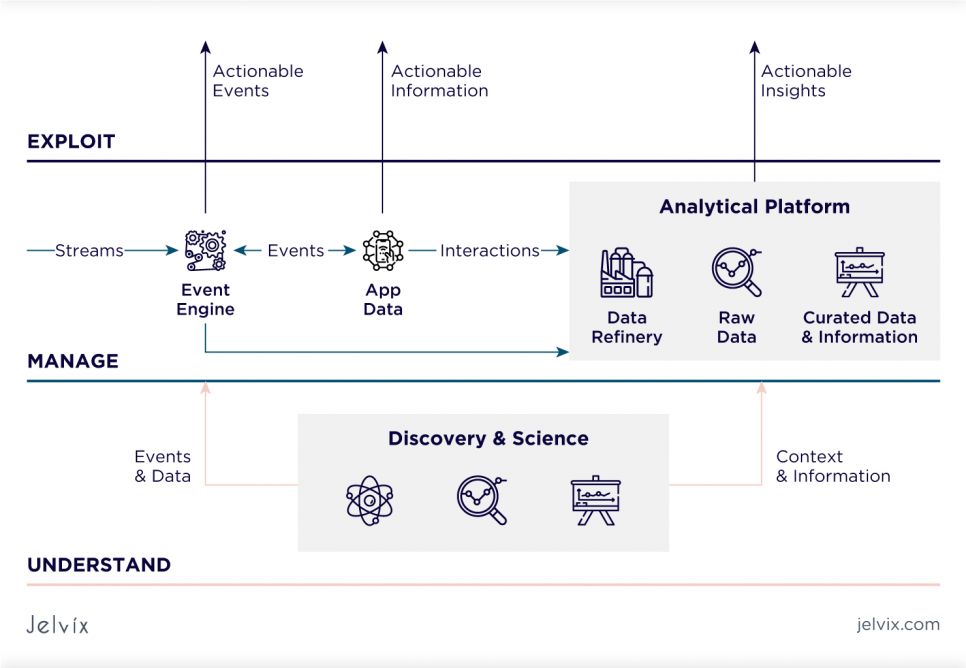

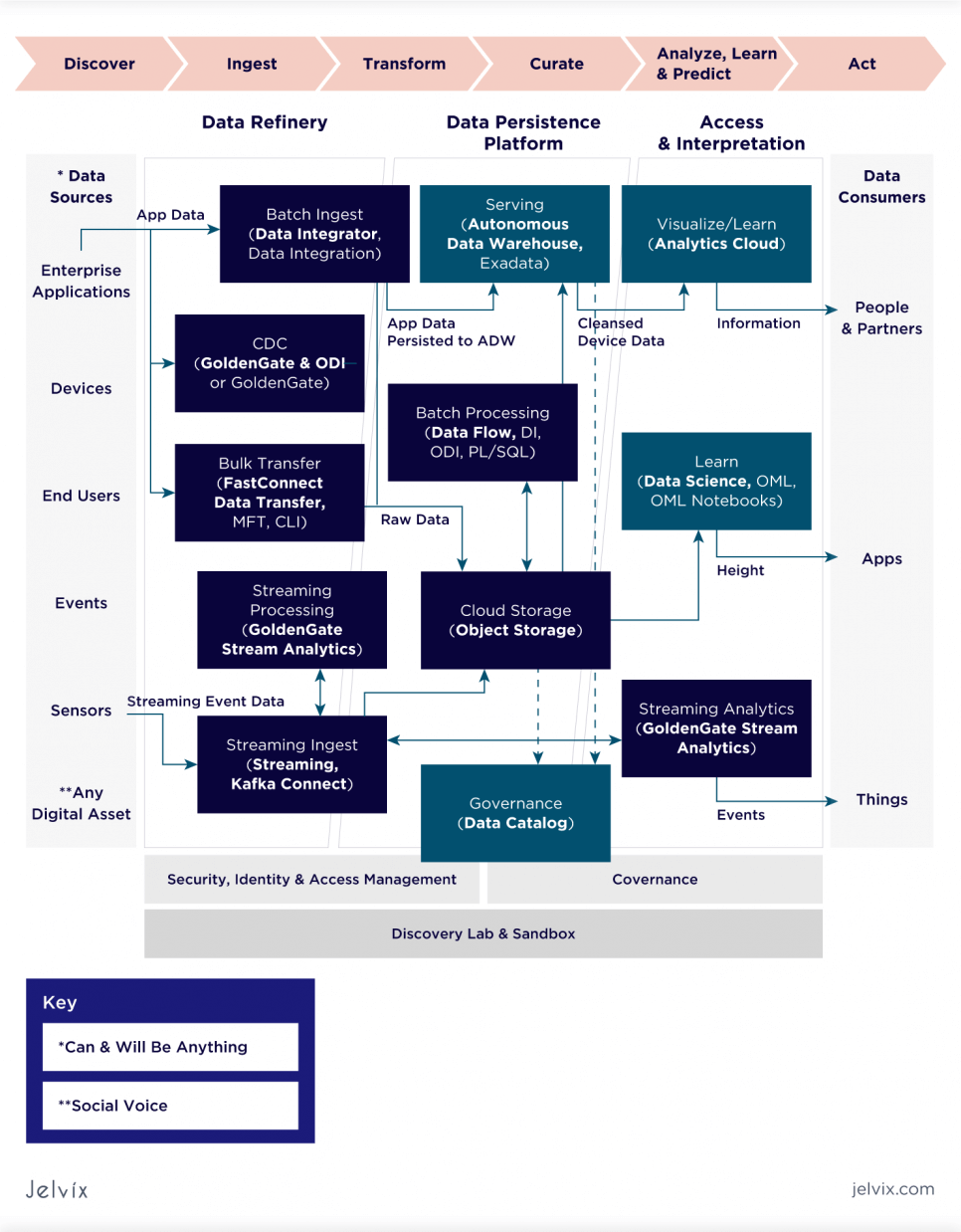

The unified data architecture looks like this:

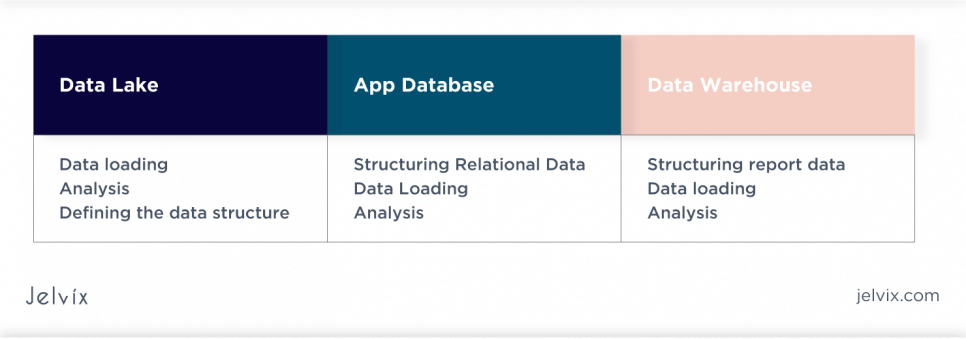

The data warehouse is the centrepiece of the analytics context. It stores data exceptionally in a structured format and lets you extract information based on key business metrics using SQL or Python. Unfortunately, conventional data warehouses cannot scale to support modern workloads and high-end user concurrency requirements.

Therefore, at first, there was a need for separate data marts. The concept of data lake developed later.

The data lake is the backbone of today’s big data environment. This technology delivers the flexibility and performance needed for more complex data processing and specialized applications by providing scalable storage to handle growing data volumes and operate in various languages.

Data Warehouse vs Data Lake

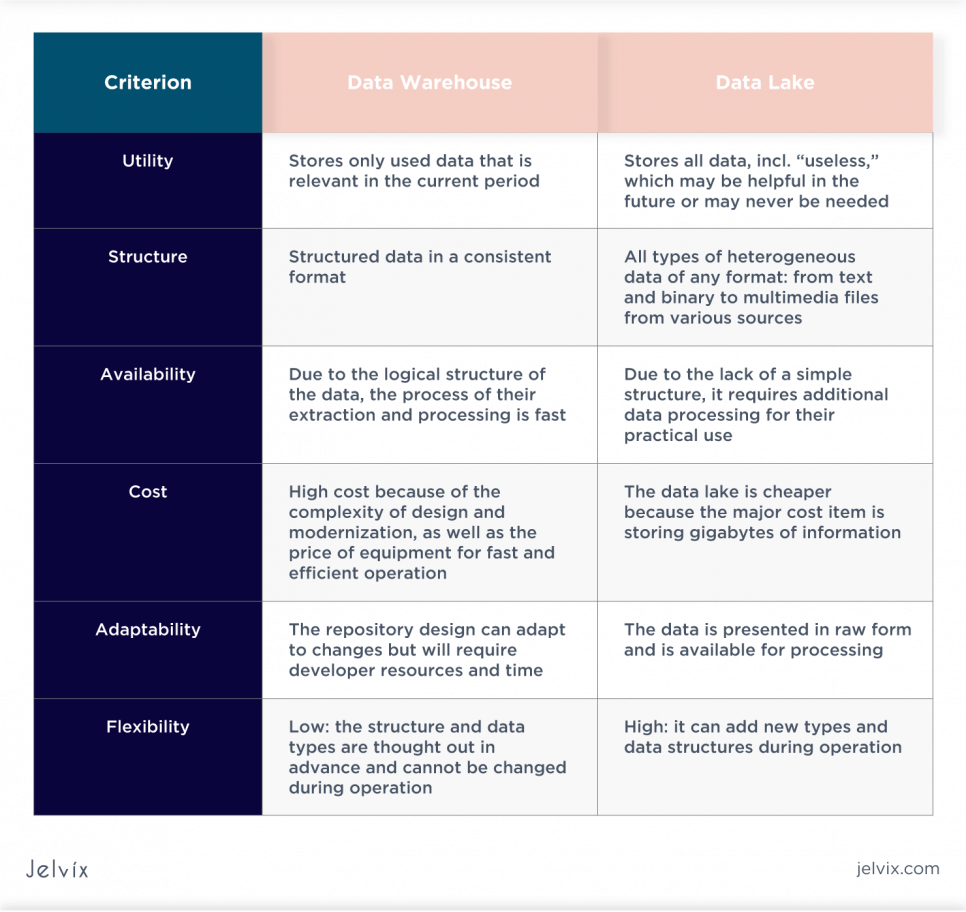

Although they are often confused, these two solutions serve varied purposes. Both store big data, but that’s where the similarities end. What is the difference between a data warehouse and a data lake? Take a look at the table:

A vital difference is that the lake can scale to extreme amounts of data while still keeping costs low. Of course, the lake will not replace the organization’s standard data storage. But it delivers new analytical capabilities and insights while reducing processing and storage costs.

What is a Data Lake Concept?

Distinctive properties of Big Data are their heterogeneity and unstructuredness. Usually, this is a wide range of data from CRM or ERP systems, product catalogs, banking programs, social networks, smart devices, and sensors – any systems that a business uses. Before loading them into databases, they have to be processed for a long time since parts of the data may be lost.

A data lake as an element of Big Data infrastructure centralized storage that accepts, organizes, and protects large volumes of structured data (relational databases columns and rows), unstructured data (PDF files, documents, emails), and semi-structured data (XML, logs, JSON, CSV), in their initial format.

Data lakes provide unlimited storage space with no data access and file size restrictions (REST calls, SQL-like queries, and programming). It supports metadata extraction, augmentation, formatting, indexing, transformation, segregation, aggregation, and cross-referencing.

The expression Data Lake was coined in 2010 and belonged to James Dixon. The then CTO of the Pentaho Corporation rightly believed that the lack of access to raw data slows down the working-out of new use cases and prevents the birth of new ideas.

The primary purpose of creating a data lake is to proffer data analysts a raw view of the data. Then you start working with them – extracting them according to a specific template into classic databases and processing them right inside the “lake.”

Raw data assets remain intact while machine learning (ML), data exploration, reporting, visualization, analytics, and tweaking are needed. This means that you reuse raw data with little hassle.

At the conceptual level, a technological solution solves the following problem:

How Does Data Get into the Data Lake?

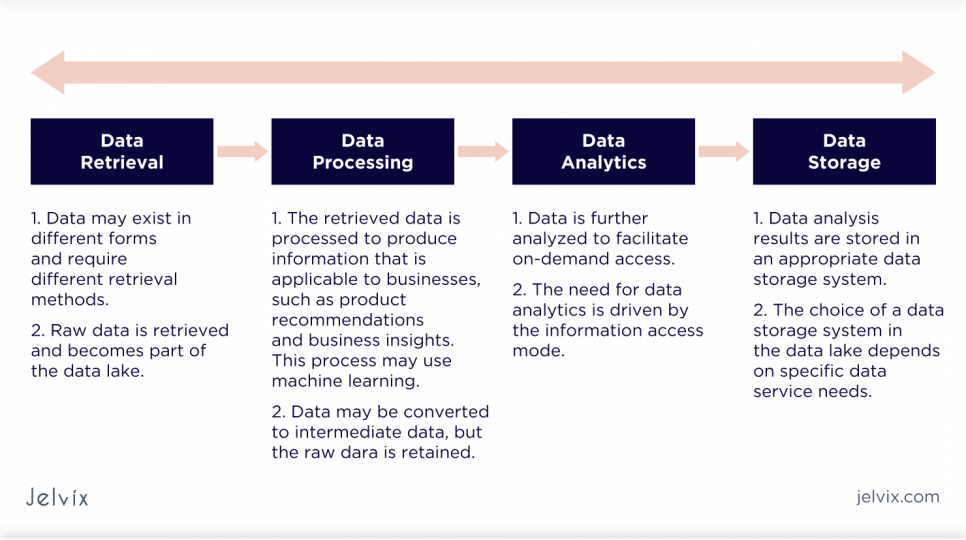

Business analytics or data scientists start by identifying significant or interesting data sources. They then replicate data from selected sources into a data lake with little or no structural, organizational, or formatting changes.

Data arrives in two ways – batching (loading at intervals) and streaming (data stream). Replicating raw data enables companies to simplify ingesting data while creating an integrated source of trusted information for ML and data analysis purposes.

The multi-step process used to receive raw data and prepare it for later use is abbreviated as ELT – extract, load, transform. The phase of extraction identifies the data sources and loads the data into the ELT pipeline. They then go as-is to the storage resource or data lake. Finally, they are converted to the format required for analysis.

Transformation usually includes:

- Converting data types or schemas

- Combining data from varied sources

- Data anonymization

- Aggregate calculations

Who Needs Data Lakes and Why?

Any business that accumulates large amounts of data requires data lakes. Manufacturing, IT, marketing, logistics, retail – in all these areas, you collect big data and upload it to the data lake for further work or analysis. The average company with over 1000 employees extracts data from over 400 sources, and 20% of the largest corporations store data from over 1000 sources.

Companies require data lakes because a vast amount of data must be collected, stored, and indexed before being analyzed. Lakes are used to keep important information under legal requirements and in case of verification. It also merges data that seems useless but may be helpful to the company in the future. The following positions can work with data lakes:

- Business analysts looking to track business performance and create dashboards;

- Researchers analyzing network data;

- Data scientists using prediction algorithms for massive datasets.

An efficient data pipeline ensures that each of these categories of users will have access to the data with the tools they own, without the intervention of data providers (data engineering, DevOps) for each additional request.

Data Lake Architecture Compared to Traditional Databases and Stores

The authentic architecture of the data lake varies as the concept applies to multiple technologies. For instance, a Hadoop data lake architecture diverges from Amazon S3.

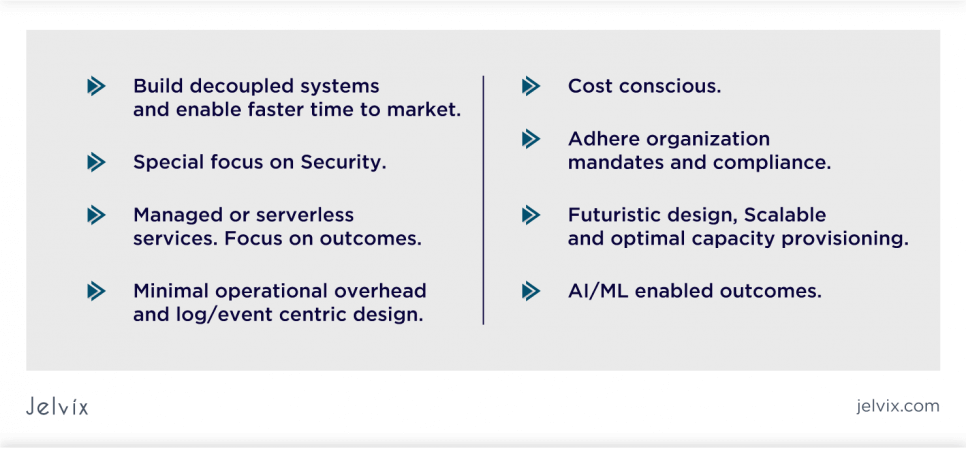

While the data is unstructured and not intended to answer any question, it still needs to be organized in a way that is convenient in the future. Therefore, whichever technology is ultimately used to deploy a company’s data lake, several features need to be envisioned to ensure that the data lake is up and running and that an enormous repository of unstructured data will be useful later.

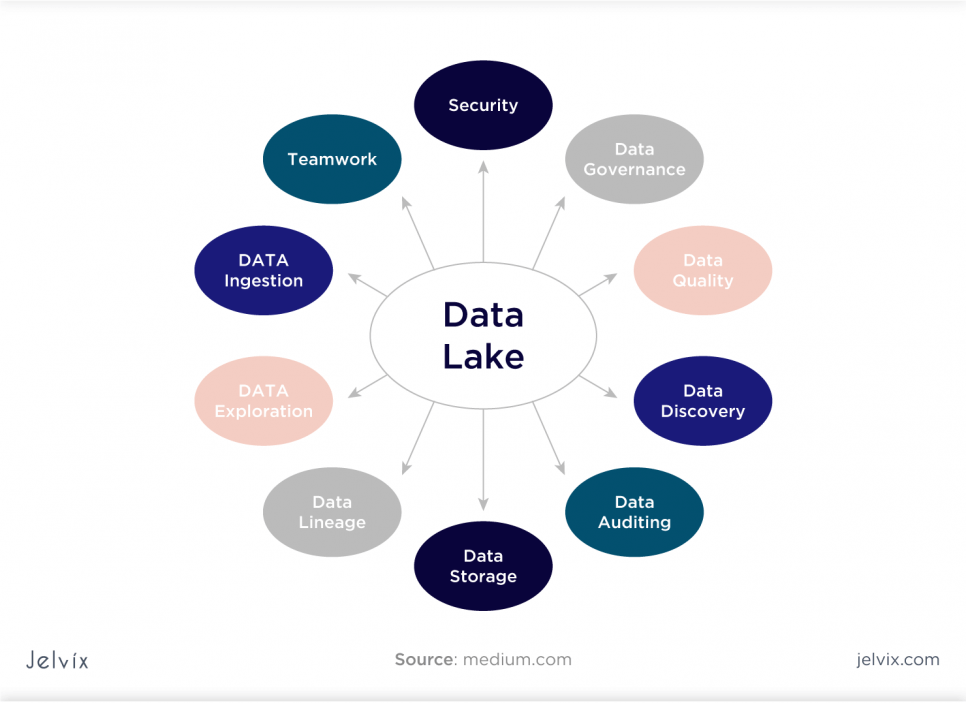

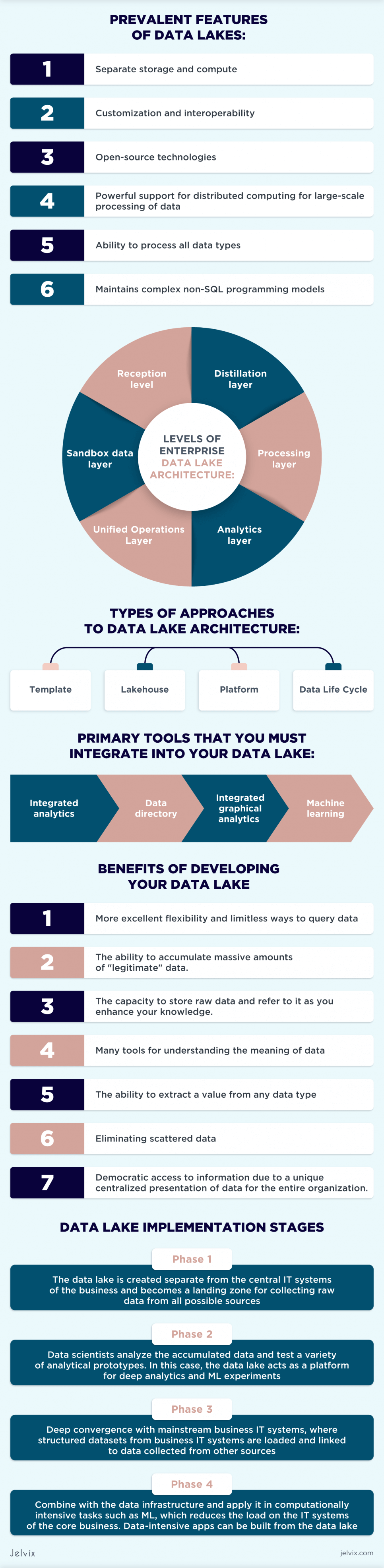

Prevalent Features of Data Lakes

Separate storage and compute. This feature makes it easy to parse and enrich data for streaming and querying.

Customization and interoperability. Data lakes support the scalability of the data platform by making it easy to share different stack members as your needs evolve.

Open-source technologies. This helps reduce dependency on vendors and ensures excellent customization, which is well-suited for companies with large teams of data engineers.

Powerful support for distributed computing for large-scale processing of data. This gives excellent concurrency, better-sharded query performance, and a more fault-tolerant design.

Ability to process all data types. Data lakes support raw data, which means you have more flexibility when working with your data and more control over calculations and aggregates.

Maintaining complex non-SQL programming models. Many “lakes” support PySpark, Hadoop, Apache Spark, and other ML and advanced data science platforms.

Levels of Enterprise Data Lake Architecture

We can think of each data lake as a separate repository that has discrete layers. Data processing can be conditionally organized in such a conceptual model:

Reception Level

Data lake solutions accept structured and unstructured data from a mix of sources. A raw data layer is data in its format and cannot be transformed or redefined. We reserve the option to revert to this needed data. However, raw data needs to be ordered by folders, for example, subject area / source / object / month / day of receipt / raw data. End-users do not have access to this level because the data is not ready for use.

Distillation Layer

The distillation level converts and interprets the data stored at the receiving level into structured data for further analysis and saves it as files or tables. The data is cleaned up, denormalized, outputted, and then it becomes consistent with the encoding format and data type.

The standardized data layer is the format best suited for cleaning. The structure is the same as in the previous layer, but it can be divided to reduce graininess if necessary.

Processing Layer

This level runs custom queries and analytics for structured data. This happens online, in batch, or in real-time. At this level, business logic is applied, and analytical apps use the data. This layer is also known as reliable, gold, or production-ready.

The cleaned data is converted into consumable datasets for storage in files or tables. The data purpose, as its structure, is plain. For example: Target / Type / Files. Typically, access to this level is only provided to end-users.

Analytics Layer

Analytics is one of the most important levels of enterprise Data Lake Architecture and includes several sub-processes. Insights layer is the data lake query interface or, as it is also called, the inference interface. This layer uses SQL or NoSQL to query and output data to dashboards or reports.

Application Data Layer includes row-level security surrogate keys – specific to the app using the layer. If any of your apps utilize ML models computed in your data lake, you will take them from here. The data structure will remain the same as in Cleansed.

Unified Operations Layer

At this level, the system is administered through workflow management, auditing, and skill management.

Sandbox Data Layer

Certain Data Lake implementations comprise a sandbox layer. This layer is the place for data scientists and analysts to explore data – a temporary data layer – which can store temporary files or folders.

Additional Components

While the bulk of the data lake is made up of layers, additional components are important, which are:

Security – “lakes” contain huge amounts of valuable data and are designed for high availability. To ensure that your data is only available to users and applications the way you want it, you must implement security tools. Data Lake Security should include tools for managing authorization, authentication, network security perimeter, backup, replication and recovery, secure protocols, SSL encryption, and access restriction policies.

Governance includes tools that allow you to control the data lake’s performance, capacity, and usage. All transactions must be recorded so that they can be tracked.

Orchestration tools enable you to manage ELT processes, control job scheduling, and make data ready for users and apps. This is indispensable if your distributed data lake consists of multiple resources or cloud services.

Types of Approaches to Data Lake Architecture

Most vendors take one of three approaches:

Template

This solution utilizes a pattern-based data lake architecture method. It automatically configures existing services to maintain data lake features such as tagging, transforming, sharing, accessing, and managing data in a centralized repository.

Lakehouse

Another method comprises linking warehouse and data lake functional into a changed Lakehouse architecture. The concept reduces cloud raw data storage cost while supporting specific analytics concepts such as SQL access to carefully curated data tables or maintaining large-scale data processing.

Platform

In this architecture, the core of the data lake is located on top of a cloud-based data repository, presenting features that aid businesses in achieving the benefits of the data lake and realize the full power of their data.

All of these architectures give you the capabilities you need, but only the data lake platform has a thoroughly optimized architecture that decreases management complexity and technical overhead.

Data Life Cycle

In a well-administered “lake”, raw data is stored permanently, and process data is constantly evolving and adapting to meet growing business needs.

What is Data Lake Tools?

Placing data in the repository is only a part of the task. While data can be retrieved from there for further analysis, without integrated tools, the data lake would be functional but cumbersome and even awkward.

By integrating the right tools into the lake, the entire user interface opens up. The result is simplified data access with minimized errors during export and ingestion. Integrated tools don’t just make things faster and easier.

Speeding up automation opens doors to exciting new ideas, revealing new perspectives that can maximize the potential of your business. Here are four primary tools that you must integrate into your data lake:

- Integrated analytics;

- Data directory;

- Integrated graphical analytics;

- Machine learning.

Locally or in the Cloud?

Data lakes are typically configured on a cluster of low-cost and scalable bulk hardware. This permits dumped data into the lake without bothering about storage capacity.

It is important to remember that a data lake has two components: storage and computes. These resources can be located both locally and in the cloud. Therefore, a wide variety of combinations are possible when creating a data lake architecture.

Generally, lakes are implemented locally, with HDFS storage and processing (YARN) in clusters. Data Lake Hadoop is scalable and relatively inexpensive, offering powerful capabilities and the undeniable advantage of data locality.

In the process of creating a local infrastructure, there might be problems with:

- Space – bulky servers take up space, resulting in higher costs.

- Resources – purchasing equipment and setting up a data center will require additional resources and time.

- Scalability – enlarging storage capacity takes effort due to space requirements and cost alignment.

- Requirements Assessment – it is essential to correctly assess the hardware requirements at the beginning of the project as data arrives every day.

- Cost – it is higher than cloud alternatives.

Cloud platforms are a modern alternative for companies looking for centralized online storage. This helps save on local hardware and internal resources. Cloud data lake enables you to:

- Get started easier and faster;

- Pay as you go;

- Scale as your needs grows, excluding the stress of assessing requirements and getting approvals.

Top-Rate Data Lake Solutions

Out-of-the-box Data Lakes with a user-friendly interface instead of code-based management can make the technology easier to implement and use. There are already several solutions on the market that can meet the needs of any business.

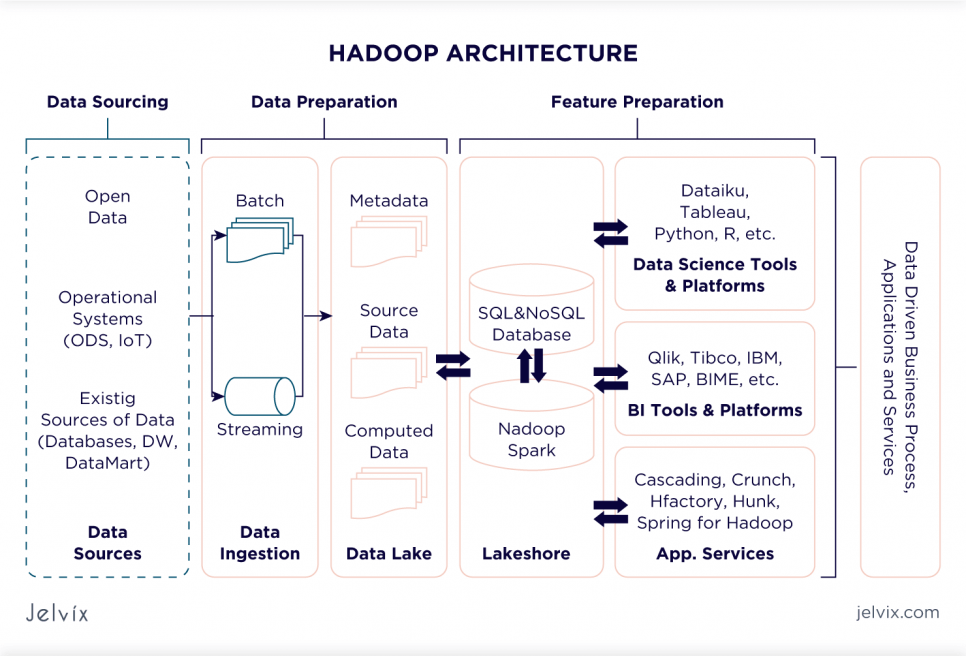

Hadoop Data Lake Architecture

This type is built on a platform of Hadoop clusters and is an essential piece of the architecture used to make data lakes. In addition, Hadoop is open-source, which reduces the budget for making large-scale warehouses of data.

Basic features

- The data and information saved in Hadoop clusters are not relational and include log files, JSON objects, images, and web messages. This architecture supports analytical applications, not transaction processing.

- The data is collected on the HDFS, which allows data to be processed concurrently. When received, the data is split into shards and distributed among the various nodes in the cluster. Hadoop can also accept arrays of any data type, making them more suitable for routine operations than conventional data warehouses.

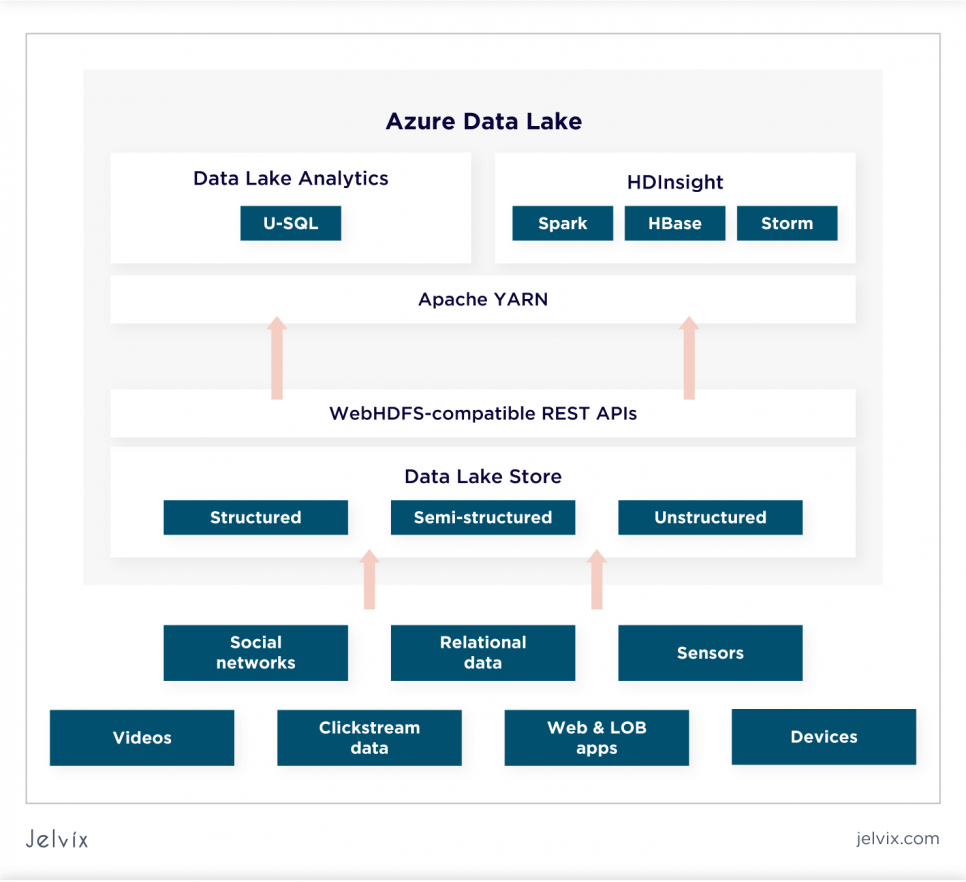

Azure Data Lake Architecture

This type of Data Lake is built on top of Apache Hadoop and the Apache YARN cloud management tool. It is Microsoft’s cloud-based implementation for HDFS.

It is an entirely cloud-based solution that does not demand installing any hardware or server on the user side. It can be scaled as needed. A data lake in Azure stores and interprets massive amounts of data at varying speeds. It is not concerned about the source and purpose of the data. It just gives a common repository for doing deep analytics.

Basic features:

The Azure Data Lake system is suitable for handling demanding workloads. Data Lake makes available very low latency and real-time analytics and sensor processing.

Storage costs can be optimized as they will depend on your use. The overall Azure structure has a maximum number of Compliance Certificates.

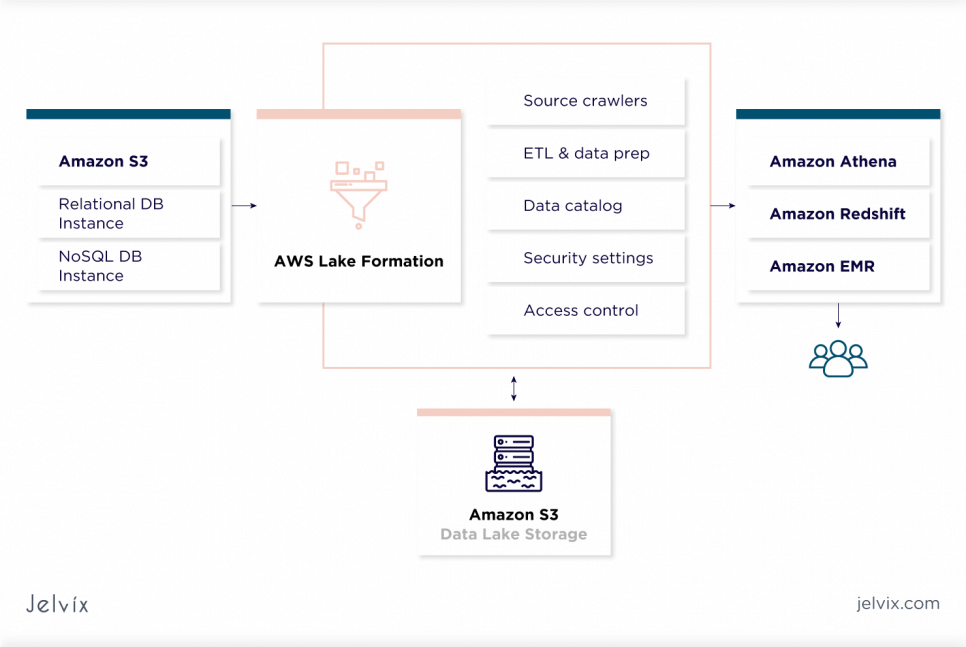

AWS Lake Formation

It is one of the simplest toolkits for creating a data lake. AWS Lake Formation is a part of the broader range of AWS and is extremely easy to integrate with ML services and AWS-based analytics.

Basic features:

- Lake Formation generates a detailed, searchable catalog of data and has a custom labeling position for user convenience.

- The audit trail helps identify the history of data access across various services.

- Another critical feature is analytics-based integration with other services. These are Athena for SQL, Redshift for data warehouses, or EMR for big data.

The Data Lake Issues

Despite the benefits of having low-cost, unstructured data lakes at your organization’s disposal, there are critical issues to consider.

A serious problem with data lakes is that they are so unstructured and overloaded with heterogeneous data that it is difficult to extract valuable information from them. Such lakes need to be “cleaned” and structured so that the storage does not turn into a dump of dead information.

While supposed to provide theoretical accessibility to everyone, data lakes can be challenging to work with. A practical accessibility issue can hinder the proper maintenance of data and contribute to turning a lake into a data graveyard. Here, it is crucial to maximize financing in data lake governance and diminish the risks of a failed deployment.

Benefits of Developing Your Data Lake

A proprietary data lake proffers benefits such as:

- Excellent flexibility and limitless ways to query data;

- The ability to accumulate massive amounts of “legitimate” data;

- The capacity to store raw data and refer to it as you enhance your knowledge;

- Many tools for understanding the meaning of data;

- The ability to extract a value from any data type;

- Eliminating scattered data;

- Democratic access to information due to a unique centralized presentation of data for the entire organization.

Read more about the most common software development strategies and take a look at their benefits and drawbacks.

How Do You Build a Data Lake?

Most vendors that develop and manage data lakes proffer storage capacity and tools for structuring the lakes and manipulating the data. If your company is just going to start its lake, entrust this task to professionals. Without proper management and a logical strategy for managing your data, it cannot be found, cleaned, or used.

- Decide what data lakes are for, which specialists/departments will use them, and how often they will contact the data lake for information. How will certain types of data be used, and what should be the result? All these issues need to be resolved before filing your information reservoir and releasing fish into it.

- Make a storage plan. The most important part of a clean data lake is metadata. This is essential service information that contains the date and time when files were created and changed, the names of the most recent users, and other information. In addition, metadata shows the structural identity of the data, its kind, and type. Based on this information, any data set can be easily fished out of the lake and applied to the company’s benefit. All of this requires a clear storage plan.

- Decide how many lakes you need. A single lake, where data from all departments and production processes will be dumped, may not satisfy your company’s needs. Sometimes, it is advisable to organize a separate lake for each department and direction. This can be convenient both for the employees and those who will manage the repositories and clean them up.

- Entrust the task to specialists. While Data Lake’s versatility and potential benefits are high, setting up a clean data lake that delivers real business value is challenging. As in any DS-project, first, it is necessary to assess its prospects, considering implementation costs.

Data Lake Implementation Stages

The creation and integration of data lakes into subsisting business processes consists of several important stages:

Phase 1. A data lake is created separate from the central IT systems of the business and becomes a landing zone for collecting raw data from all possible sources.

Phase 2. Data scientists analyze the accumulated data and test a variety of analytical prototypes. In this case, the data lake acts as a platform for deep analytics and ML experiments.

Phase 3. Deep convergence with mainstream business IT systems, where structured datasets from business IT systems are loaded and linked to data collected from other sources.

Phase 4. Combining with the data infrastructure and applying it in computationally intensive tasks such as ML, which reduces the load on the IT systems of the core business. Data-intensive apps can be built from the data lake.

Conclusion

Now is the time to think about what data you have and how you can use it to achieve your goals while minimizing the risks associated with the increased cost of data.

Jelvix brings you not only its expertise but a wide kit of innovative technologies for developing, managing, and maintaining a data lake. Our enterprise-class solutions will help you replace disparate data stores with a flexible, scalable platform that accumulates, stores, manages, and protects your business’s raw data.

With our help, all stakeholders in your organization will make smart decisions based on completely analyzed data from essential sources.

Need a qualified team?

Extend your development capacity with the dedicated team of professionals.