In 2023, more and more businesses are becoming data-driven. Companies rely on personalization to deliver better user experience, increase sales, and promote their brands. Big data helps to get to know the clients, their interests, problems, needs, and values better. The technology detects patterns and trends that people might miss easily.

To manage big data, developers use frameworks for processing large datasets. They are equipped to handle large amounts of information and structure them properly. The most popular tools on the market nowadays are Apache Hadoop and Spark. We have tested and analyzed both services and determined their differences and similarities.

Read more about best big data tools and take a look at their benefits and drawbacks.

What’s Hadoop?

Hadoop is a big data framework that stores and processes big data in clusters, similar to Spark. The architecture is based on nodes – just like in Spark. The more data the system stores, the higher the number of nodes will be. Instead of growing the size of a single node, the system encourages developers to create more clusters.

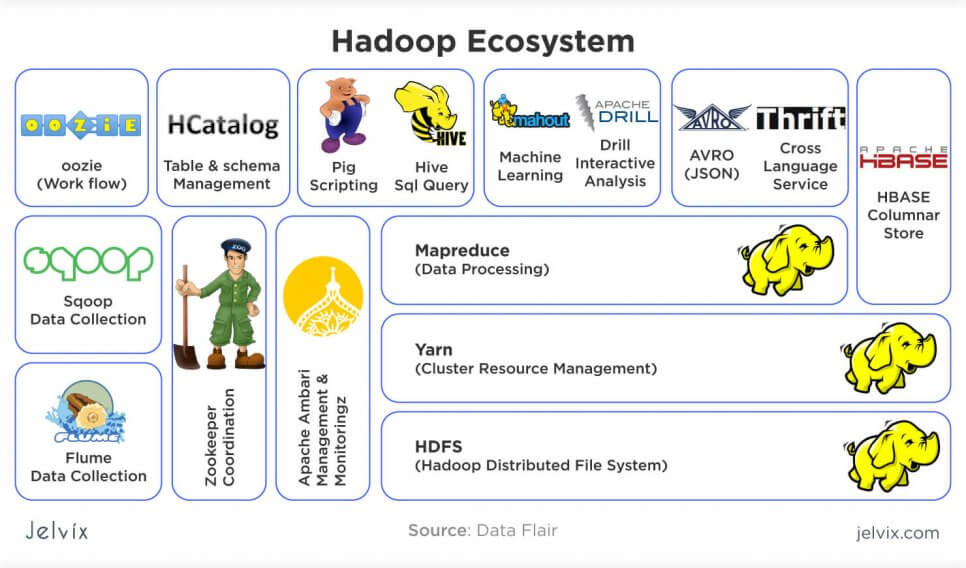

Hadoop is based on MapReduce – a programming model that processes multiple data nodes simultaneously. The Hadoop Distributed File System stores essential functionality and the information is processed by a MapReduce programming model.

The key features

- Fault tolerance: Hadoop replicates each data node automatically. Even if one cluster is down, the entire structure remains unaffected – the tool simply accesses the copied node.

- NameNodes: Hadoop always runs active nodes that step in if some became inactive due to technical errors. This constant activity of NameNodes secures the high availability of Hadoop.

- Built-in modules: Hadoop offers YARN, a framework for cluster management, Distributed File System for increased efficiency, and Hadoop Ozone for saving objects.

What’s Spark?

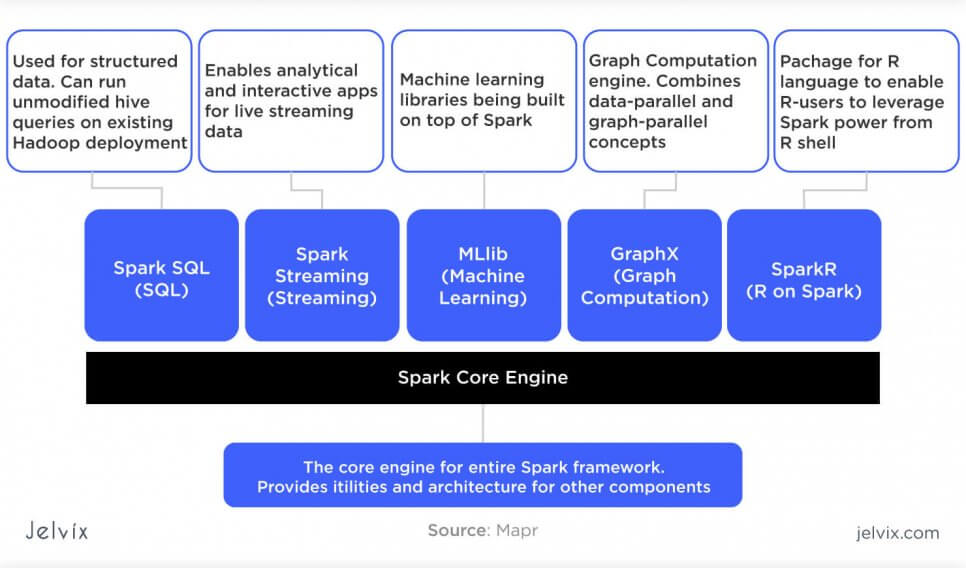

The framework was started in 2009 and officially released in 2013. It is written in Scala and organizes information in clusters. The system consists of core functionality and extensions: SparkSQL for SQL databases, Streaming for real-time data, MLib for machine learning, and others.

Apache Spark has a reputation for being one of the fastest Hadoop alternatives. The code on the frameworks is written with 80 high-level operators. These additional levels of abstraction allow reducing the number of code lines. Thus, the functionality that would take about 50 code lines in Java can be written in four lines.

For a big data application, this efficiency is especially important. When you are handling a large amount of information, you need to reduce the size of code. The heavier the code file is, the slower the final performance of an app will be. So, by reducing the size of the codebase with high-level operators, Apache Spark achieves its main competitive advantage.

Key features

- Spark currently supports Java, Scala, and Python; native version for other languages in a development stage;

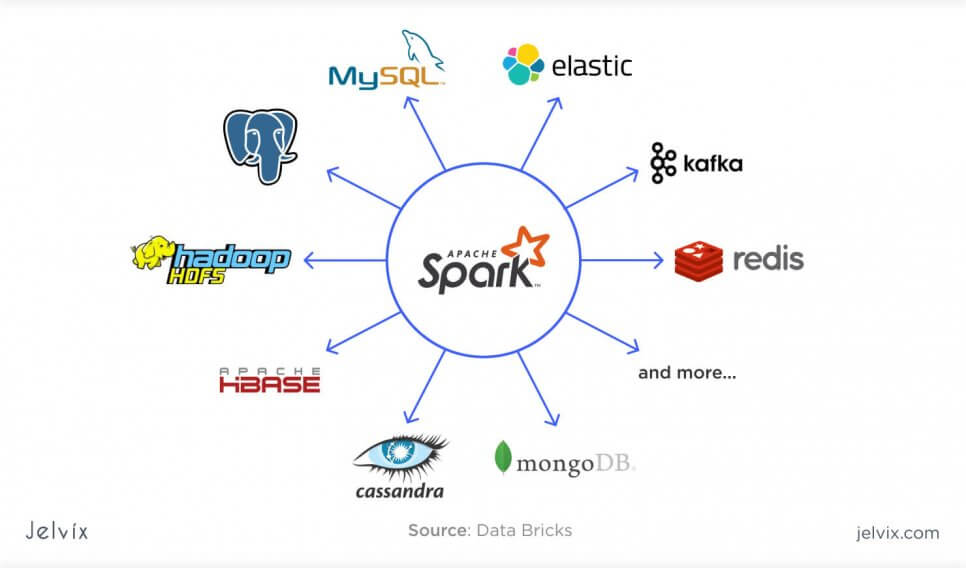

- The system can be integrated with many popular computing systems and databases (Amazon S3, Cassandra, Hive, etc.);

- The application supports other Apache clusters or works as a standalone application.

Both Hadoop and Spark shift the responsibility for data processing from hardware to the application level. The library handles technical issues and failures in the software and distributes data among clusters. Even if hardware fails, the information will be stored in different clusters – this way, the data is always available.

Underlining the difference between Spark and Hadoop

In a big data community, Hadoop/Spark are thought of either as opposing tools or software completing. Let’s take a look at the scopes and benefits of Hadoop and Spark and compare them.

Architecture

Hadoop vs Spark approach data processing in slightly different ways. At first, the files are processed in a Hadoop Distributed File System. This is where the data is split into blocks. Each cluster undergoes replication, in case the original file fails or is mistakenly deleted. Nodes track cluster performance and all related operations.

Hadoop architecture integrated a MapReduce algorithm to allocate computing resources. It tracks the resources and allocates data queries. MapReduce defines if the computing resources are efficiently used and optimizes performance. The results are reported back to HDFS, where new data blocks will be split in an optimized way.

In Spark architecture, all the computations are carried out in memory. Data allocation also starts from HFDS, but from there, the data goes to the Resilient Distributed Dataset. The data here is processed in parallel, continuously – this obviously contributed to better performance speed.

The data management is carried out with a Directed Acyclic Graph – a document that visualizes relationships between data and operations. Users can view and edit these documents, optimizing the process. The final DAG will be saved and applied to the next uploaded files.

Performance

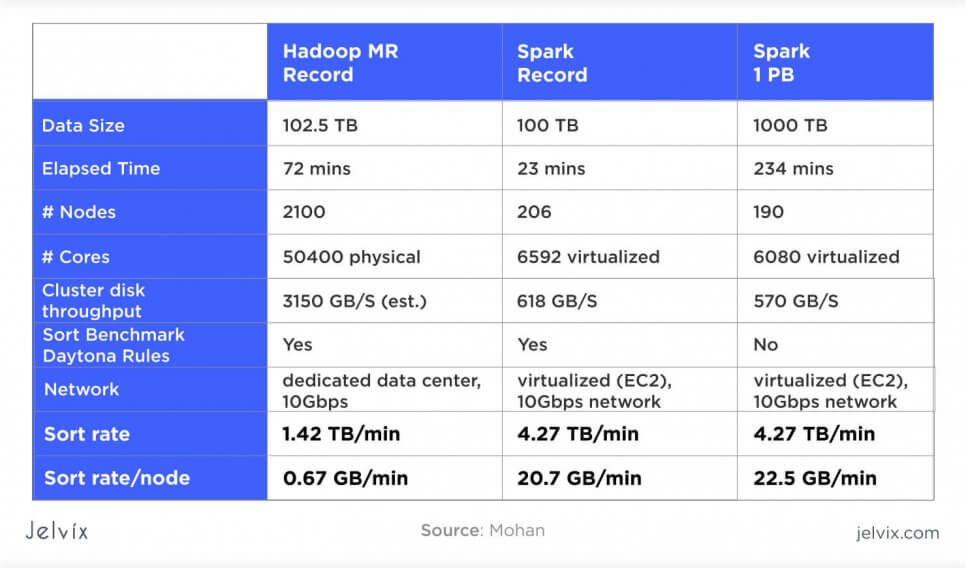

Spark rightfully holds a reputation for being one of the fastest data processing tools. According to statistics, it’s 100 times faster when Apache Spark vs Hadoop are running in-memory settings and ten times faster on disks.

Spark processes everything in memory, which allows handling the newly inputted data quickly and provides a stable data stream. This makes Spark perfect for analytics, IoT, machine learning, and community-based sites.

Hadoop is a slower framework, but it has its strong suits. During batch processing, RAM tends to go in overload, slowing the entire system down. If you need to process a large number of requests, Hadoop, even being slower, is a more reliable option.

So, Spark is better for smaller but faster apps, whereas Hadoop is chosen for projects where ability and reliability are the key requirements (like healthcare platforms or transportation software).

Languages

Hadoop is initially written in Java, but it also supports Python. Spark was written in Scala but later also migrated to Java. Additionally, the team integrated support of Spark Python APIs, SQL, and R. So, in terms of the supported tech stack, Spark is a lot more versatile. However, if you are considering a Java-based project, Hadoop might be a better fit, because it’s the tool’s native language.

Cost

Both tools are available open-source, so they are technically free. Still, there are associated expenses to consider: we determined if Hadoop or Spark differ much in cost-efficiency by comparing their RAM expenses. Hadoop requires less RAM since processing isn’t memory-based. On the other hand, Spark needs fewer computational devices: it processes 100 TB of information with 10x fewer machines and still manages to do it three times faster.

Overall, Hadoop is cheaper in the long run. Spark, on the other hand, has a better quality/price ratio.

Security

Spark protects processed data with a shared secret – a piece of data that acts as a key to the system. Passwords and verification systems can be set up for all users who have access to data storage. The system automatically logs all accesses and performed events.

Hadoop also supports Lightweight Directory Access Protocol – an encryption protocol, and Access Control Lists, which allow assigning different levels of protection to various user roles.

Compatibility

Both tools are compatible with Java, but Hadoop also can be used with Python and R. Additionally, they are compatible with each other. Spark integrates Hadoop core components like YARN and HDFS.

Usability

Both Hadoop and Spark are among the most straightforward ones on the market. Spark is generally considered more user-friendly because it comes together with multiple APIs that make the development easier. Developers can install native extensions in the language of their project to manage code, organize data, work with SQL databases, etc.

Hadoop also supports add-ons, but the choice is more limited, and APIs are less intuitive.

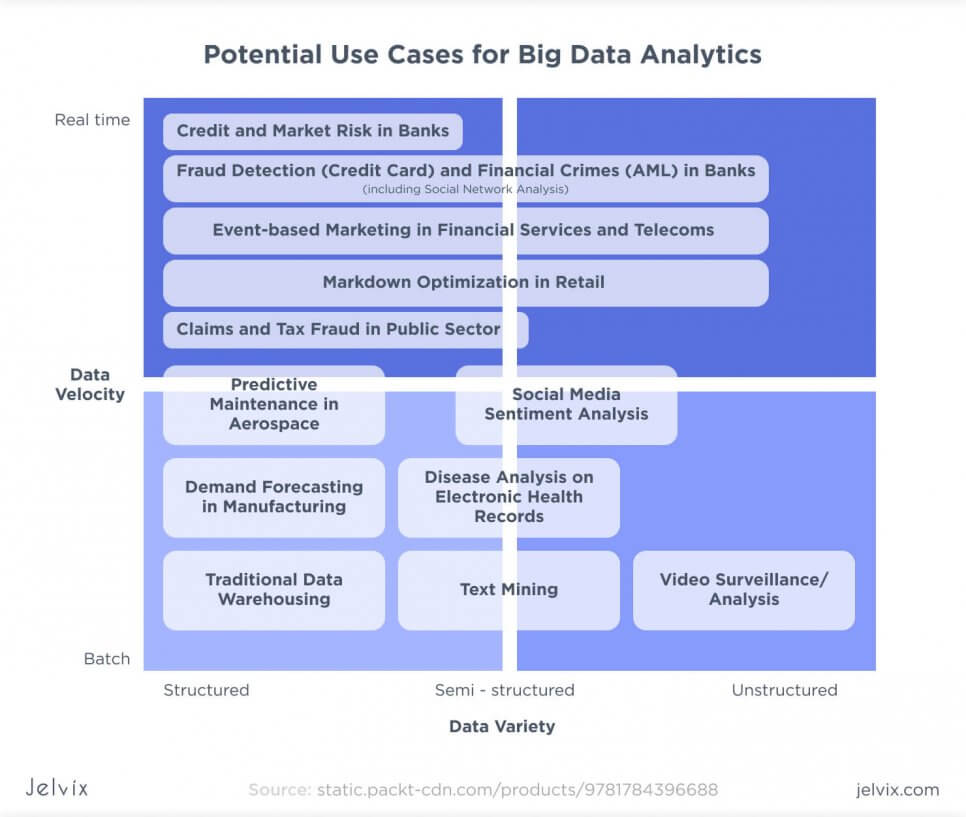

Use cases for Hadoop

Hadoop is resistant to technical errors. The tool automatically copies each node to the hard drive, so you will always have a reserve copy. Inevitably, such an approach slows the processing down but provides many possibilities. Due to its reliability, Hadoop is used for predictive tools, healthcare tech, fraud management, financial and stock market analysis, etc.

Risk forecasting

Hadoop is actively adopted by banks to predict threats, detect customer patterns, and protect institutions from money laundering. Enterprises use Hadoop big data tech stack to collect client data from their websites and apps, detect suspicious behavior, and learn more about user habits.

Banks can collect terabytes of client data, send it over to multiple devices, and share the insights with the entire banking network all over the country, or even worldwide. In case there’s a computing error or a power outage, Hadoop saves a copy of a report on a hard drive.

Industrial planning and predictive maintenance

Maintenance and automation of industrial systems incorporate servers, PCs, sensors, Logic Controllers, and others. Such infrastructures should process a lot of information, derive insights about risks, and help make data-based decisions about industrial optimization.

Hadoop usually integrates with automation and maintenance systems at the level of ERP and MES. The software processes modeling datasets, information obtained after data mining, and manages statistical models.

Fraud identification

To identify fraudulent behavior, you need to have a powerful data mining, storage, and processing tool. The bigger your datasets are, the better the precision of automated decisions will be. Hadoop helps companies create large-view fraud-detection models.

It appeals with its volume of handled requests (Hadoop quickly processes terabytes of data), a variety of supported data formats, and Agile. In Hadoop, you can choose APIs for many types of analysis, set up the storage location, and work with flexible backup settings.

Speed of processing is important in fraud detection, but it isn’t as essential as reliability is. You need to be sure that all previously detected fraud patterns will be safely stored in the database – and Hadoop offers a lot of fallback mechanisms to make sure it happens.

Stock Market Analysis

This is one of the most common applications of Hadoop. The software, with its reliability and multi-device, supports appeals to financial institutions and investors. The tool is used to store large data sets on stock market changes, make backup copies, structure the data, and assure fast processing.

Companies using Hadoop

Hadoop is used by enterprises as well as financial and healthcare institutions. It’s a go-to choice for organizations that prioritize safety and reliability in the project. Hadoop is sufficiently fast – not as much as Spark, but enough to accommodate the data processing needs of an average organization. Let’s take a look at how enterprises apply Hadoop in their projects.

Amazon Web Services

Amazon Web Services use Hadoop to power their Elastic MapReduce service. Their platform for data analysis and processing is based on the Hadoop ecosystem. The software allows using AWS Cloud infrastructure to store and process big data, set up models, and deploy infrastructures.

Cloudera

The enterprise builds software for big data development and processing. Cloudera uses Hadoop to power its analytics tools and district data on Cloud. The company enables access to the biggest datasets in the world, helping businesses to learn more about a particular industry, market, train machine learning tools, etc.

IBM

IBM uses Hadoop to allow people to handle enterprise data and management operations. The InfoSphere Insights platform is designed to help managers make educated decisions, oversee development, discovery, testing, and security development.

With automated IBM Research analytics, the InfoSphere also converts information from datasets into actionable insights. Hadoop is used to organize and process the big data for this entire infrastructure.

Microsoft

The company integrated Hadoop into its Azure PowerShell and Command-Line interface. Using Azure, developers all over the world can quickly build Hadoop clusters, set up the network, edit the settings, and delete it anytime.

Azure calculates costs and potential workload for each cluster, making big data development more sustainable. It’s a good example of how companies can integrate big data tools to allow their clients to handle big data more efficiently.

CERN

Switzerland-based Large Hadron Collider is one of the most powerful infrastructures in the world. As for now, the system handles more than 150 million sensors, creating about a petabyte of data per second. Scaling with such an amount of information to process and storage is a challenge.

This is why CERN decided to adopt Hadoop to distribute this information into different clusters. It improves performance speed and makes management easier. The usage of Hadoop allows cutting down the usage of hardware and accessing crucial data for CERN projects anytime.

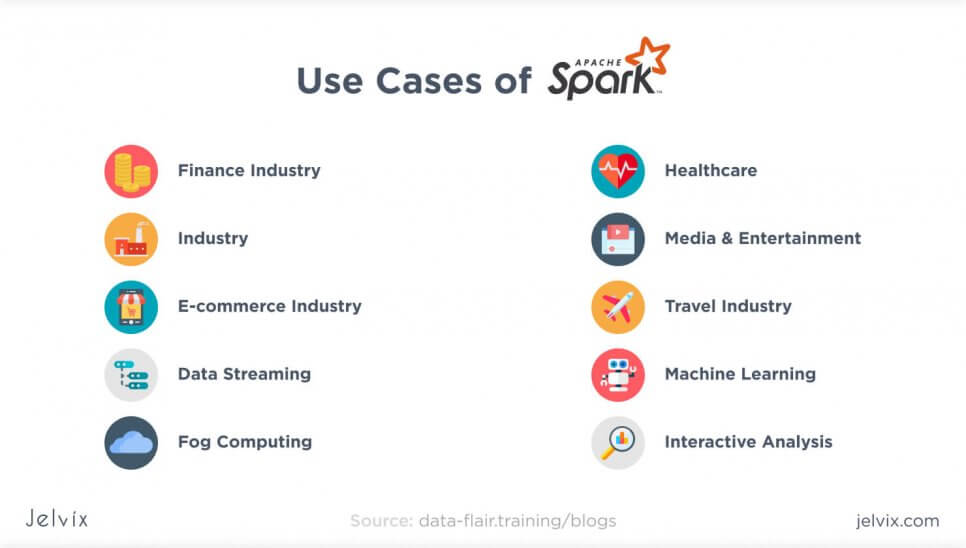

Use cases for Spark

Spark is mainly used for real-time data processing and time-consuming big data operations. Since it’s known for its high speed, the tool is in demand for projects that work with many data requests simultaneously. Let’s take a look at the most common applications of the tool to see where Spark stands out the most.

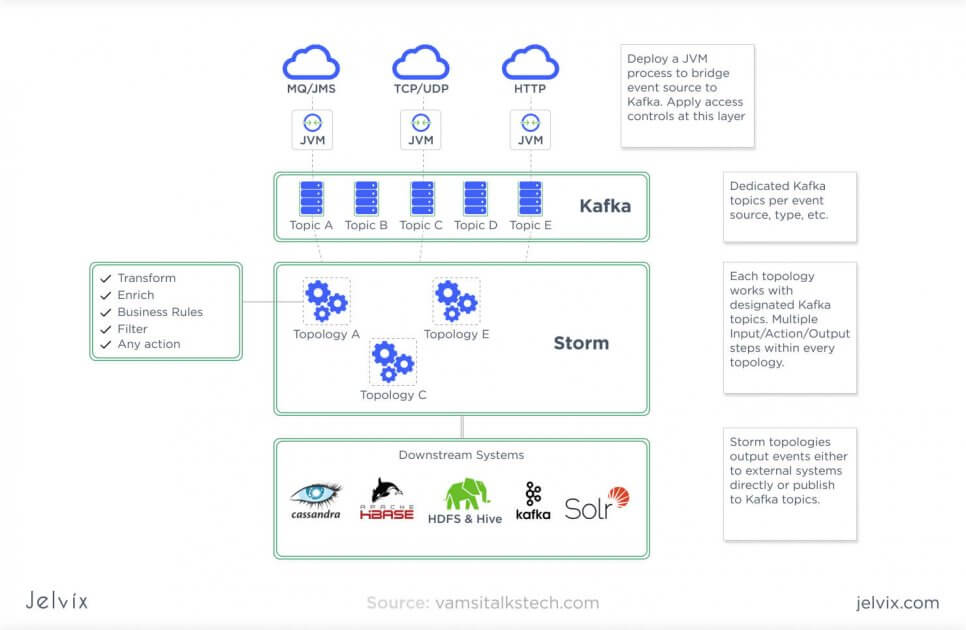

Real-time Big Data processing

Spark’s main advantage is the superior processing speed. It’s essential for companies that are handling huge amounts of big data in real-time. Spark Streaming allows setting up the workflow for stream-computing apps.

Spark Streaming supports batch processing – you can process multiple requests simultaneously and automatically clean the unstructured data, and aggregate it by categories and common patterns. Data enrichment features allow combining real-time data with static files.

This allows for rich real-time data analysis – for instance, marketing specialists use it to store customers’ personal info (static data) and live actions on a website or social media (dynamic data).

Machine learning software

Spark supports analytical frameworks and a machine learning library (MLib). It performs data classification, clustering, dimensionality reduction, and other features. The software is equipped to do much more than only structure datasets – it also derives intelligent insights. This makes Spark a top choice for customer segmentation, marketing research, recommendation engines, etc.

Another application of Spark’s superior machine learning capacities is network security. Spark Streaming allows setting up a continuous real-time stream of security checks. The tool always collects threats and checks for suspicious patterns.

Interactive requests

Spark is capable of processing exploratory queries, letting users work with poorly defined requests. Even if developers don’t know what information or feature they are looking for, Spark will help them narrow down options based on vague explanations.

Hadoop is based on SQL engines, which is why it’s better with handling structured data. However, compared to alternatives to Hadoop, it falls significantly behind in its ability to process explanatory queries.

The new version of Spark also supports Structured Streaming. This feature is a synthesis of two main Spark’s selling points: the ability to work with real-time data and perform exploratory queries. This way, developers will be able to access real-time data the same way they can work with static files.

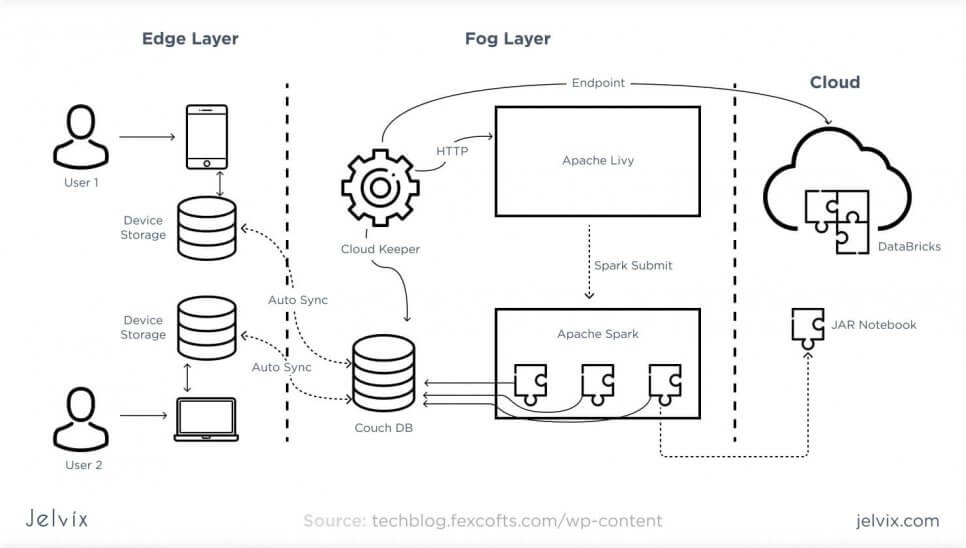

Fog and edge computing

The Internet of Things is the key application of big data. Connected devices need a real-time data stream to always stay connected and update users about state changes quickly. However, Cloud storage might no longer be an optimal option for IoT data storage. Cutting off local devices entirely creates precedents for compromising security and deprives organizations of freedom.

This is where the fog and edge computing come in. It’s a combined form of data processing where the information is processed both on Cloud and local devices. Developers and network administrators can decide which types of data to store and compute on Cloud, and which to transfer to a local network.

Apache Spark has the potential to solve the main challenges of fog computing. Fog computing is based on complex analysis and parallel data processing, which, in turn, calls for powerful big data processing and organization tools. Developers can use Streaming to process simultaneous requests, GraphX to work with graphic data and Spark to process interactive queries.

Companies using Spark

Spark is used for machine learning, complex scientific computation, marketing campaigns recommendation engines – anything that requires fast processing for structured data. Let’s see how use cases that we have reviewed are applied by companies.

UC Berkeley AMPLab

The University of Berkeley uses Spark to power their big data research lab and build open-source software. The institution even encourages students to work on big data with Spark. The technical stack offered by the tool allows them to quickly handle demanding scientific computation, build machine learning tools, and implement technical innovations.

Baidu

Baidu uses Spark to improve its real-time big data processing and increase the personalization of the platform. The company creates clusters to set up a complex big data infrastructure for its Baidu Browser. Spark allows analyzing user interactions with the browser, perform interactive query search to find unstructured data, and support their search engine.

TripAdvisor

TripAdvisor has been struggling for a while with the problem of undefined search queries. When users are looking for hotels, restaurants, or some places to have fun in, they don’t necessarily have a clear idea of what exactly they are looking for. The platform needs to provide a lot of content – in other words, the user should be able to find a restaurant from vague queries like “Italian food”.

To collect such a detailed profile of a tourist attraction, the platform needs to analyze a lot of reviews in real-time. They have an algorithm that technically makes it possible, but the problem was to find a big-data processing tool that would quickly handle millions of tags and reviews.

Spark, with its parallel data processing engine, allows processing real-time inputs quickly and organizing the data among different clusters. TripAdvisor team members remark that they were impressed with Spark’s efficiency and flexibility. All data is structured with readable Java code, no need to struggle with SQL or Map/Reduce files.

Alibaba Taobao

Taobao is one of the biggest e-commerce platforms in the world. The website works in multiple fields, providing clothes, accessories, technology, both new and pre-owned. The system should offer a lot of personalization and provide powerful real-time tracking features to make the navigation of such a big website efficient. Users see only relevant offers that respond to their interests and buying behaviors.

Alibaba uses Spark to provide this high-level personalization. The company built YARN clusters to store real-time and static client data. Such an approach allows creating comprehensive client profiles for further personalization and interface optimization. Spark, actually, is one of the most popular in e-commerce big data.

Combining Hadoop and Spark

You don’t have to choose between the two tools if you want to benefit from the advantages of both. Apache Spark is known for enhancing the Hadoop ecosystem. As it is, it wasn’t intended to replace Hadoop – it just has a different purpose.

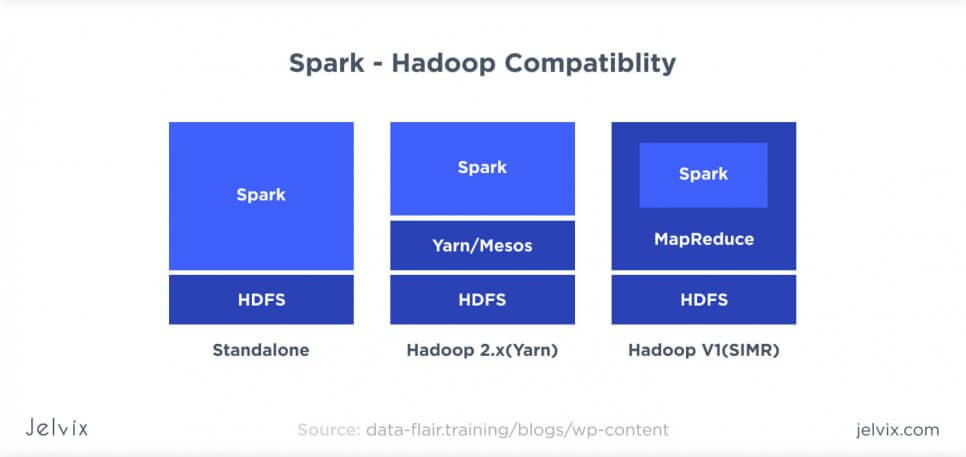

For every Hadoop version, there’s a possibility to integrate Spark into the tech stack. Here’s a brief Hadoop Spark tutorial on integrating the two.

- Standalone deployment: you can run Spark machine subsets together with Hadoop, and use both tools simultaneously. You’ll have access to clusters of both tools, and while Spark will quickly analyze real-time information, Hadoop can process security-sensitive data.

- YARN-based deployment: if you are working with Hadoop Yarn, you can integrate with Spark’s Yarn.

- Launching Spark in MapReduce: you can download Spark In MapReduce integration to use Spark together with MapReduce. You can use all the advantages of Spark data processing, including real-time processing and interactive queries, while still using overall MapReduce tech stack.

Conclusion

When you are choosing between Spark and Hadoop for your development project, keep in mind that these tools are created for different scopes. Even though both are technically big data processing frameworks, they are tailored to achieving different goals. The scope is the main difference between Hadoop and Spark.

- Hadoop forms a distributed data structure: companies using Hadoop choose it for the possibility to store information on many nodes and multiple devices. Just as described in CERN’s case, it’s a good way to handle large computations while saving on hardware costs.

- Hadoop is created to index and track data state as well as to update all users in the network on changes. It’s perfect for large networks of enterprises, scientific computations, and predictive platforms.

- Spark focuses on data processing rather than distribution. It doesn’t ensure the distributed storage of big data, but in return, the tool is capable of processing many additional types of requests (including real-time data and interactive queries).

- Spark is faster, but it doesn’t always mean better. Companies that work with static data and don’t need real-time batch processing will be satisfied with Map/Reduce performance. Spark is used for machine learning, personalization, real-time marketing campaigns – projects where multiple data streams have to be processed fast and simultaneously.

When we choose big data tools for our tech projects, we always make a list of requirements first. It’s important to understand the scope of the software and to have a clear idea of what big data will help accomplish. Once we understand our objectives, coming up with a balanced tech stack is much easier.

If you’d like our experienced big data team to take a look at your project, you can send us a description. We’ll show you our similar cases and explain the reasoning behind a particular tech stack choice.

Have a project in mind?

Extend your development capacity with the dedicated team of professionals.