Do you ever wonder what the difference between Docker and Kubernetes is, or which one is the best technology? The Docker vs Kubernetes debate is quite popular amongst the people who work with software containers.

But in order to get the best out of cloud-native development, software developers and data scientists need to get a clearer picture of what Kubernetes and Docker are, their differences, and if these two technologies can actually complement each other.

Kubernetes is a container orchestration technology that represents and manages containers within a web application technology for defining and running containers. Docker, on the other hand, is the technology responsible for making and running containers.

Today, containers are the standard de facto, amounting to 68% use in production. However, it’s important to note that Kubernetes does not make containers. Instead, it relies upon a container orchestration technology such as Docker to create them. In other words, Kubernetes and Docker more or less need each other.

According to Pavan Belagatti, “When talking about cloud-native, it is hard to ignore names like Docker and Kubernetes, which have revolutionized the way we create, develop, deploy and ship software at scale.”

In this article, we will explore the following:

- What Docker is, and why should you use it;

- What Kubernetes is and why you should use it;

- The difference between Kubernetes and Docker;

- Kubernetes and Docker, can they work together well?

But first, let’s dig a little deeper into the primary technology that brings these two technologies together – containers.

What are Containers?

A container is a logical subdivision where you can run applications isolated from the rest of the system. A container cannot view other containers or get incoming connections because each application gets its own private network and a virtual filesystem is not shared with other containers or the host unless you set that it can.

It is what you will want to run and host in Docker as it contains everything — runtimes, environment variables, files, standard input and output, operating system, and packages. Although other people have used Docker as another word for containers, the truth is that containers have been there way before Docker came into the limelight.

For instance, when chroot was introduced in the 70s, Linux and Unix already had containers, but over time, Linux containers were replaced by containerd, put into effect by Docker.

Running applications that have been containerized is so much better and more convenient than installing and configuring software. This is so because containers are portable, so you are able to build in one server guaranteed that it can work in any other server.

Conveniently, you are also able to run several copies of the same program simultaneously without having any overlap or conflict. But for all this to go according to the plan, you should have a container runtime, the software that enables you to run containers.

What is Docker?

Docker is a containerization framework. When we talk of its popularity in the field of cloud and application packaging, it has definitely won the hearts of many. Docker is an open-source framework that automates the deployment of applications in containers that are lightweight and portable.

Although there were so many Docker competitors, Docker made the concept of containers widely popular and accepted, which gave rise to the creation of platforms like Kubernetes. It uses most of the Linux kernel’s features, such as AppArmor profiles, namespaces, groups, etc., to isolate processes into configurable virtual environments.

Docker has managed to win the hearts of notable companies like HP, Microsoft, Red Hat, SaltStack, VMware, IBM, etc.

Understanding Docker Orchestration

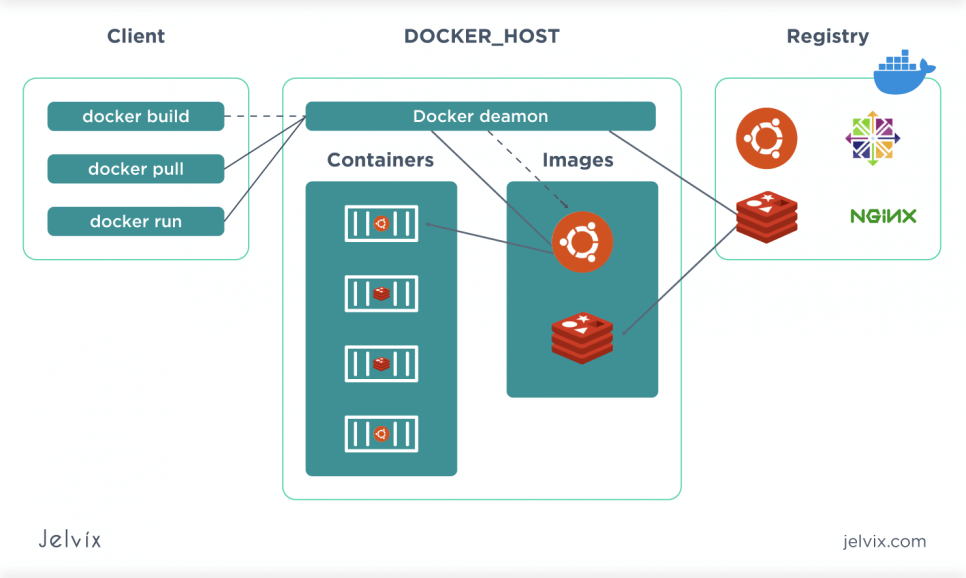

The technology behind Docker comprises two major components: the client command-line interface (CLI) tool and the container runtime. The purpose of the client command-line interface tool is to execute directives to the Docker runtime at the command line, while the Docker runtime creates containers and runs them on the OS. Gradle and Packer are some of the best docker containers.

Docker uses two main artifacts that are essential to container technology. One is the actual container, while the other is the container image — a template upon which a container is realized at runtime.

Because a container cannot exist outside of the operating system, a real or virtual machine with an OS should be present for Docker to function in a continuous automated integration and continuous deployment (CI/CD) process. The machine should also have the Docker runtime and daemon installed. Normally, in an automated CI/CD environment, a VM can be provisioned with a DevOps tool like Ansible and Vagrant.

Running containers was pretty hard work before Docker existed. Now, it has enabled the process to become much easier because Docker is a full tech stack that can do a lot of stuff.

Take a look at the list below. It will help you get an answer to this frequently asked question- what is Docker used for?

- Proxy requests to and from the containers;

- Manage container lifecycle;

- Monitor and log all container activity;

- Mount shared directories;

- Put resource limits on containers;

- Build images using the Docker file format;

- Push and pull images from registries.

Build and Deploy Containers Using Docker

Docker can help you build and deploy software within containers. With Docker, a developer can create, ship, and run applications. It allows you to create a file called a Dockerfile, which then defines the build process. When given to the ‘docker build’ command, it will make an immutable image.

Try to imagine the Docker image as a close-up photo of the application and all its dependencies. In order to start it up, a developer can use the ‘docker run’ command to run it anywhere. You can also make use of the cloud-based repository called the Docker Hub as a registry to keep and distribute the container images that would have been built.

What is Kubernetes?

Kubernetes is a powerful container management tool that groups containers that support microservice or single application into a pod. Generally, it’s an open-source toolkit used to build a fault-tolerant, scalable platform created to automate and manage containerized applications.

Rather than running containerized applications in a single server, Kubernetes issues them across a group of machines. The apps running in Kubernetes act like a single unit, although they may comprise an arrangement of containers paired loosely.

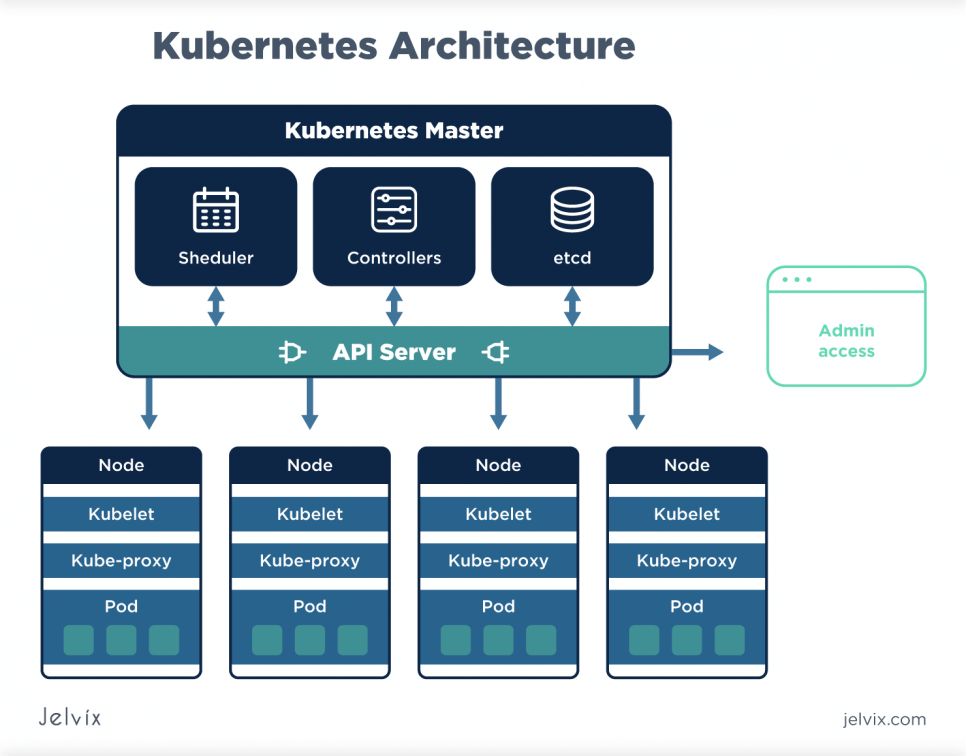

Pods, containers, and services (a Kubernetes abstraction that exposes a pod to the network) are hosted within a cluster of one or many computers that are either virtual or real. Kubernetes runs over a number of computers or nodes (as they are known in the Kubernetes language).

The collection of nodes is known as a Kubernetes cluster. You can also make specific resources so as to extend the potential of a Kubernetes cluster to meet a certain requirement.

You might ask: what is Kubernetes used for? Take a look at the list below:

Kubernetes can add these computing features to containers:

Auto-scaling: Kubernetes can adapt automatically to changing tasks by initiating and stopping pods whenever that needs to be done.

Rollouts: Kubernetes supports automated rollouts and rollbacks. This will make seemingly complex procedures like Canary and Blue-Green releases not worth bothering about.

Pods: pods are logical groups of containers that share resources like memory, CPU, storage, and network.

Self-healing: It monitors and restarts containers if they break down.

Load-balancing: requests are allocated to pods that are available.

Storage orchestration: A user is able to mount the network storage system as a local file system.

Configuration management and secrets: This feature allows all classified information such as passwords and keys to be kept under a module named Secrets in Kubernetes. The Secrets in Kubernetes are usually used when configuring the application without reconstructing the image.

Why is Kubernetes so Popular?

Kubernetes considers most of the operational needs for application containers. Here are some of the top reasons why Kubernetes has gained so much popularity:

- It is the largest open-source project globally;

- It’s an expert tool to monitor container health;

- Auto-scaling feature support;

- Huge community support;

- High availability by cluster federation;

- Great container deployment;

- Effective persistent storage;

- Multi-cloud support (Hybrid Cloud);

- Compute resource management;

- Real-world use cases are available.

Kubernetes Deployment

Kubernetes deployments are fault-tolerant, versatile, and scalable. It supports upgrading or modifying pods at runtime with no interruption of service. Developers can set Kubernetes to attach more pods at runtime when the demand is increasing. This will make the applications running under Kubernetes quite scalable.

Kubernetes can repair the containers and pods automatically if a VM malfunctions. This is done on another machine running within the specified Kubernetes cluster of machines, and this shows how Kubernetes is fault-tolerant.

Kubernetes is a complex technology that is made up of components — also called resources — beyond pods and services. Kubernetes ships with default resources that facilitate security, data storage, and network management. Kubernetes can help you manage a containerized application in a more efficient manner.

Kubernetes has several functionalities and code. The main responsibility of Kubernetes is container orchestration. Container orchestration means ensuring that all the containers which execute various workloads are scheduled to run physical or virtual machines.

The containers should be efficiently packed according to the constraints of the deployment environment as well as the cluster configuration. Moreover, Kubernetes should always be on the lookout for all running containers and replace unresponsive, dead, or every other unhealthy container.

Kubernetes uses Docker to manage containers and run images. However, Kubernetes can use other engines, for instance, rkt from the CoreOS. The platform can be deployed within any infrastructure, for example, in the server cluster, local network, data center, any kind of cloud – public (Microsoft Azure, Google Cloud, AWS, etc.), private, or even hybrid.

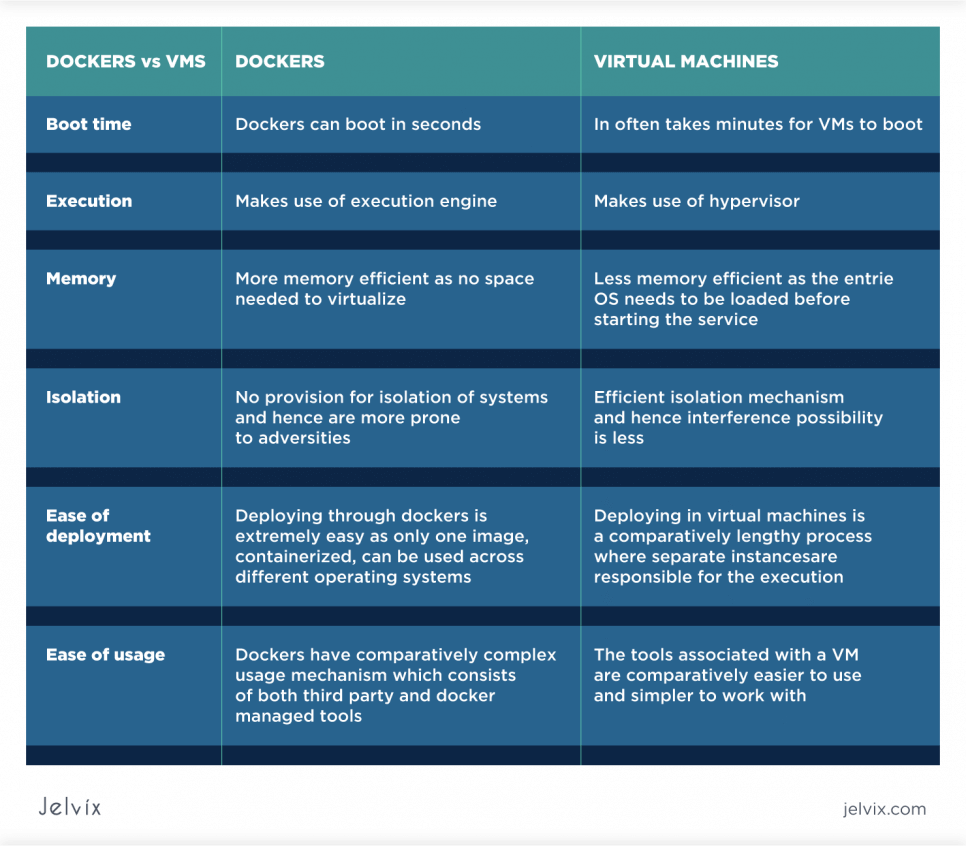

Docker vs Kubernetes: the Difference

Now that you know what Docker and Kubernetes are, it’s safe to say that these two different technologies were created to work together. These two are not competing against each other — both have their own purposes in DevOps and usually are used together.

With that in mind, take a look at the differences:

- Docker is used to isolate your app into containers. It is used to pack and ship your application. On the contrary, Kubernetes is a container scheduler. Its purpose is to deploy and scale applications.

- Another major difference between Kubernetes and Docker is that Kubernetes was created to run across a cluster, while Docker runs on a single node.

- The other difference between Kubernetes and Docker is that Docker can be used on its own, without Kubernetes, but to orchestrate, Kubernetes actually needs a container runtime.

Kubernetes is now regarded as the standard for container orchestration and management and orchestration. It offers an infrastructure-level framework for orchestrating containers at scale, as well as managing the developer or user interaction with them. In much the same way, Docker has now become the standard for container development and deployment.

It provides a platform for building, deploying, and running containers at a much more basic level. It is the foundation on which the Kubernetes framework is built upon.

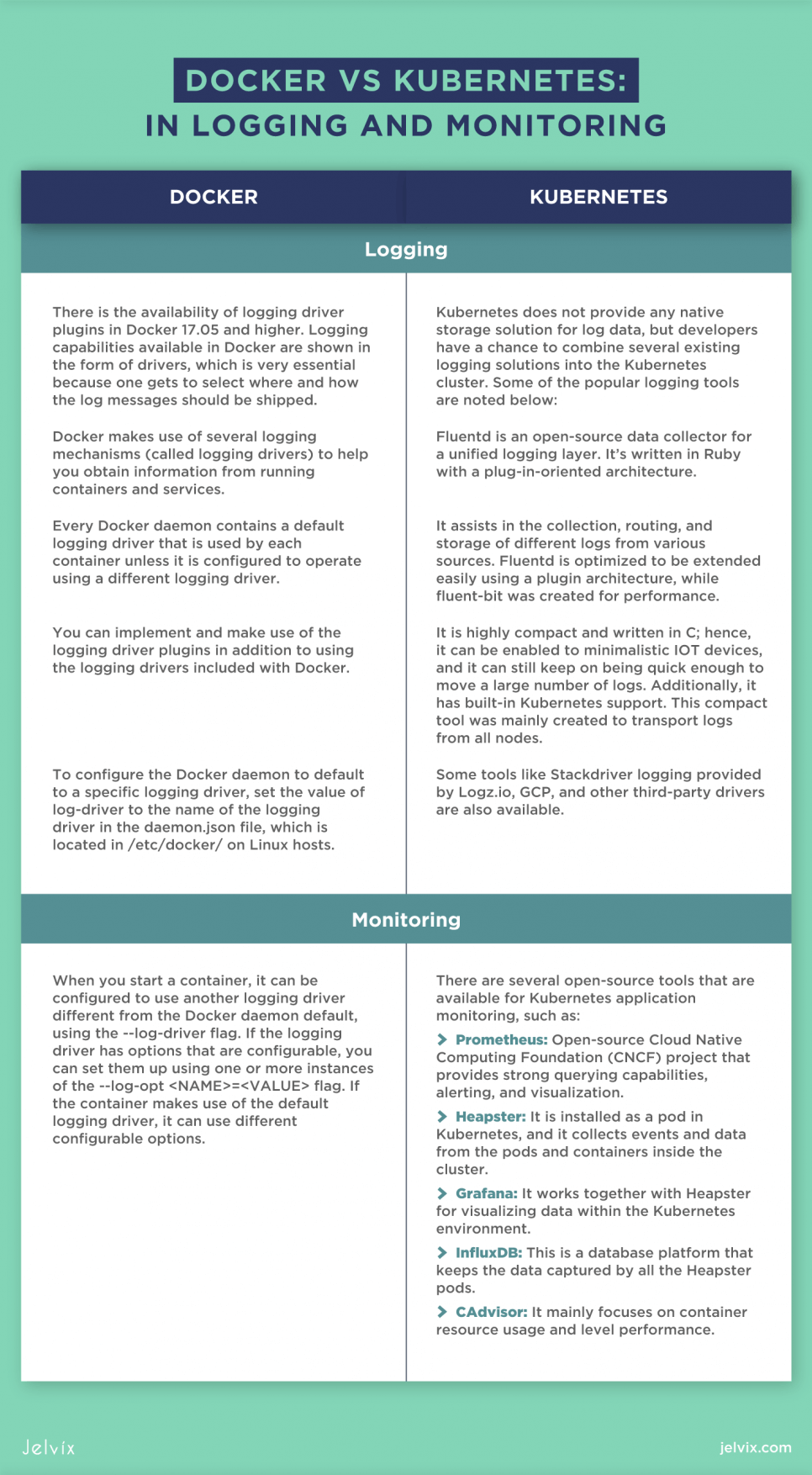

Docker vs Kubernetes: in Logging and Monitoring

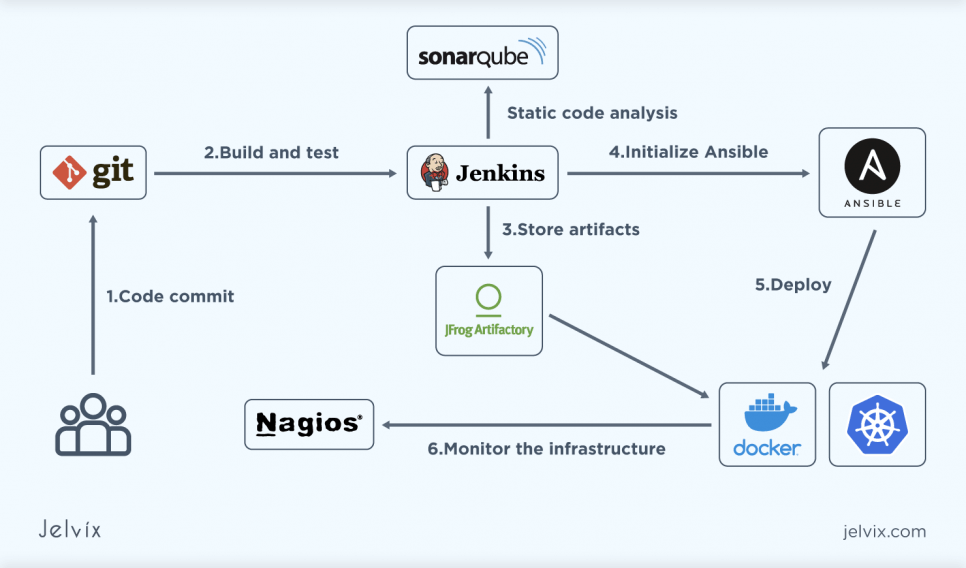

Here’s an example of CI/CD setup using Kubernetes and Docker:

- The code made by developers is pushed into Git.

- There is building and testing that occur with Maven in Jenkins for C.

- Using a deployment tool called Ansible, the Ansible playbooks are written so they can be deployed on AWS.

- JFrog Artifactory to be introduced as the repository manager after the build process from Jenkins. The artifacts will be kept in the Artifactory.

- Ansible is able to communicate with Artifactory, take the artifacts, and move them onto the Amazon EC2 instance.

- The SonarQube assists in code review and gives static code analysis.

- Docker is then introduced as a containerization tool. Similar to how it was done on Amazon EC2, the app is deployed on a Docker container by creating a Docker file and Docker images.

- When this setup has been achieved, Kubernetes is introduced to create a Kubernetes cluster. By using Docker images, we will have the ability to deploy.

- Lastly, Nagios is used to monitor the infrastructure.

Let's delve into the aspects of the DevOps engineer job description, cover key responsibilities and roles of the DevOps personnel in the company.

Conclusion

Although Kubernetes and Docker are different technologies, they work together well and make a very strong combination. You can run a Docker built on a Kubernetes cluster, but this does not mean Kubernetes is superior to Docker. Bringing these two frameworks together will ensure a well-integrated platform for container deployment, management, and orchestration at scale.

Need a qualified team?

Fill the expertise gap in your software development and get full control over the process.