By 2025, hundreds of FDA-cleared medical AI tools were already in active clinical use – from cardiology and dermatology to chronic care and radiology. Hospitals invested heavily, around USD 29 billion in 2024, expecting consistent performance. Instead, many teams faced the same silent failure: models that looked flawless in testing suddenly produced unstable outputs in production.

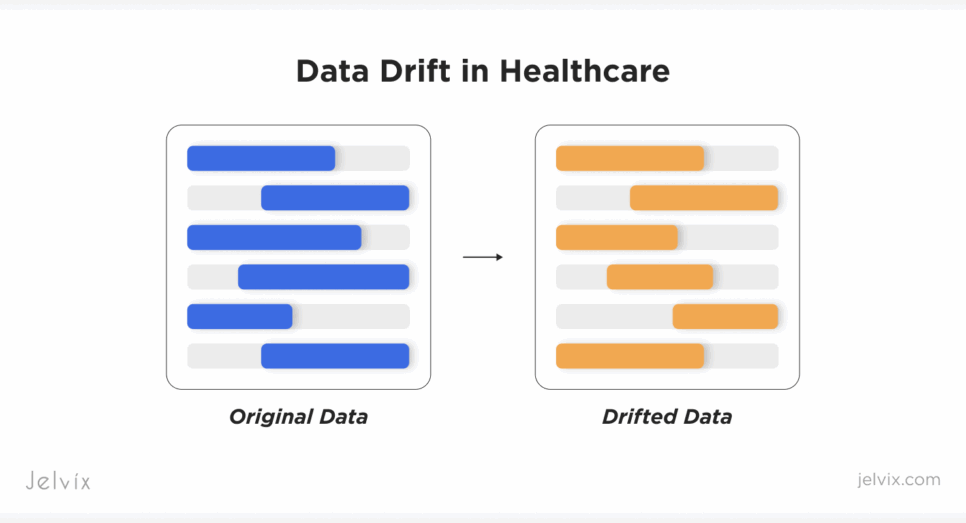

The reason is almost always the same. Data drift changes real-world inputs faster than the model can adapt, while hidden data bias baked into training data resurfaces at scale. These gaps create unpredictable behavior, uneven care, and growing concerns about the use of AI in healthcare.

Some organizations assume this degradation is unavoidable. It isn’t. With proper monitoring,

Including modern data drift detection pipelines supported by mature AI software development, teams can keep clinical models aligned with current patient data and reduce the long-term impact of bias.

If you want to prevent your medical AI from turning into another drift-driven cautionary tale, keep reading. This article explains what data drift is, why healthcare models degrade, how to detect and manage drift, and what it takes to build ethical and continuously monitored AI pipelines that stay accurate long after deployment.

The Anatomy of Model Degradation: Data Drift vs. Bias

Even the strongest clinical models degrade once they leave controlled training environments. The two forces behind this degradation – data bias and data drift – operate differently, but together they turn accurate algorithms into unreliable systems. Understanding data drift vs concept drift and how they interact is critical for building long-lasting healthcare AI.

Data Bias: The Training-Stage Problem

Bias enters long before a model reaches production. It starts with the datasets used to train it and is one of the most common forms of bias in data science.

Many healthcare datasets overrepresent certain demographics while underrepresenting others. Oncology datasets may overweight outcomes from large academic centers but lack data from rural populations. Cardiology models often rely on device readings calibrated for specific age ranges or ethnic groups. Behavioral-health models may inherit gaps from incomplete registry data or missing social determinants of health inputs.

Once embedded, these imbalances become part of the model’s logic. The system performs well on the groups it “knows” and poorly on the groups it rarely sees. This is how AI bias in healthcare can become embedded in the model before deployment.

Data Drift: The Production-Stage Problem

Bias is visible during development. Drift is not, and this unseen change is a major reason why AI models fail in healthcare once deployed.

When a model enters a live clinical setting, real-world inputs start to change. New hypertension cuffs produce slightly different readings. EHR fields get reformatted. Updated oncology protocols introduce new lab panels. Patient populations age, diversify, and develop new comorbidities. Many of these shifts stem from upstream system changes and gaps in how to improve interoperability in healthcare, which directly affect the data the model receives.

These changes accumulate quietly. Without continuous monitoring of AI data drift – and without clear methods for how to detect data drift in medical models – the system continues to predict with confidence even as its true accuracy deteriorates. Left unmanaged, drift creates clinical risk, exposes organizations to compliance gaps, and forces emergency rollbacks that interrupt care workflows.

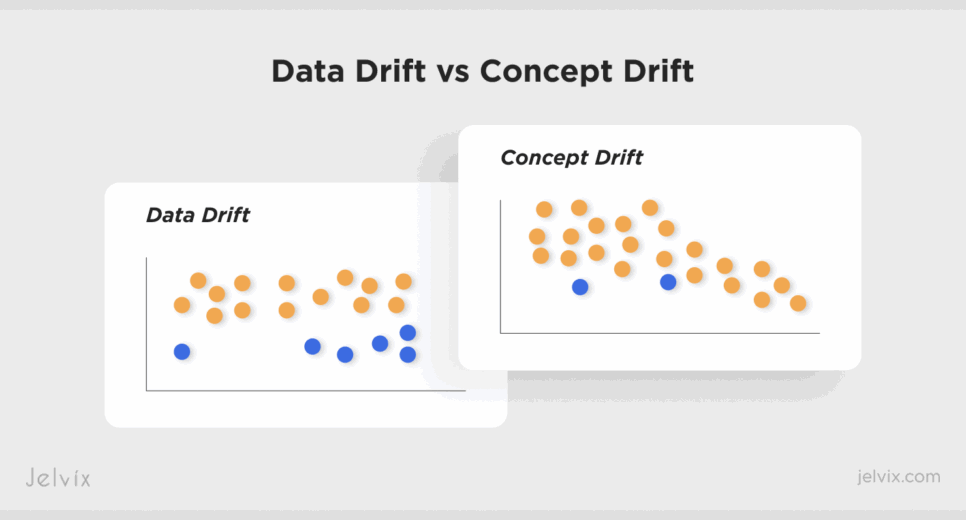

Two Types of Drift That Break Medical AI

Different kinds of drift affect clinical systems in different ways. Some distort the meaning of medical variables; others distort their distribution. Distinguishing them is essential, and not just for troubleshooting, but for building reliable detection and recovery pipelines.

Concept Drift: Clinical Meaning Changes

Concept drift appears when the relationships between clinical inputs and outcomes evolve. The model’s logic becomes misaligned with current medical practice, even if the data format itself looks unchanged.

This type of drift often shows up after:

- New treatments are introduced. Oncology biomarkers may respond differently once immunotherapies or targeted drugs become standard.

- Clinical guidelines change. Updated hypertension thresholds (such as changes in systolic/diastolic cutoffs) shift how blood-pressure readings map to risk categories.

- Disease patterns evolve. A rise in multi-morbidity or new variants of infectious diseases can change correlations that the model relied on during training.

Concept drift is especially dangerous because the model continues to operate with outdated assumptions. The predictions look reasonable, calibration may still appear stable, and confidence scores remain high, but the clinical meaning behind these outputs is no longer correct. Addressing concept drift often requires deeper review, retraining with updated data, and direct clinician involvement.

Feature Drift: Input Distributions Shift

Feature drift occurs when the numerical properties of the inputs change, while their clinical meaning remains constant. The variable still represents the same concept, for example, heart-rate variability or creatinine level, but the values entering the model differ enough to cause performance degradation. These discrepancies often arise from subtle schema or documentation changes in clinical systems, which rules like the FHIR interoperability standard are designed to reduce.

This drift typically arises from:

- New device models. Wearables, BP cuffs, and glucometers may report values with different rounding logic, calibration profiles, or baseline offsets.

- Updated lab equipment. A new analyzer may systematically shift reference ranges or sensitivity.

- EHR workflow changes. Documentation habits, structured fields, and coding patterns shift after IT updates or new clinical protocols.

- Population shifts. A hospital serving a broader age range, a new ethnic composition, or a higher comorbidity load changes the numerical landscape of the data.

Feature drift often accumulates slowly, which makes it easy to overlook without automated AI data drift monitoring. Unlike concept drift, this type usually does not require rethinking the clinical meaning behind the model. Instead, it calls for recalibration, fine-tuning, or partial retraining based on the updated distributions.

Looking to stay ahead of the curve with AI you can actually justify and audit? Explore how Jelvix delivered explainable, clinician-friendly AI solution for real-world healthcare.

Building the End-to-End AI Model Monitoring Architecture

Detecting drift on its own does not solve the problem. Healthcare teams need an architecture that continuously captures signals, validates them, and turns them into safe actions, a foundational requirement in modern healthcare software development. This architecture involves not only MLOps but also strong AI model governance, built on best practices for monitoring clinical AI.

When ingestion, real-time processing, or orchestration is missing, even the strongest drift-detection models stop working in real clinical environments. The real objective is to build a pipeline that notices changes before clinicians encounter them.

Defining the Baseline

The baseline is the model’s anchor. It describes how the model behaves under stable conditions and how its input data should look when nothing unusual is happening.

To build a baseline, teams must document performance metrics such as AUC, sensitivity, specificity, PPV/NPV, calibration curves, and class-level performance. At the same time, they must map out the statistical properties of each feature: ranges, distributions, device IDs, missingness patterns, categorical frequencies, and correlations. This helps differentiate normal variation from early data decay that may signal emerging problems.

In healthcare, this matters because upstream systems often change silently. A lab’s reference range might tighten, a new ICD-10 code may replace an older version, or a wearable manufacturer may release a firmware update that shifts readings by a few percent.

With a baseline in place, teams know exactly when these changes begin influencing the model. Without one, drift signals blend into normal operational noise, and degradation becomes visible only after clinicians report inconsistencies.

Continuous Drift Detection Framework

To monitor a model in production, teams need more than statistical tests. They need Machine learning operations (MLOps), the operational layer that keeps machine-learning models running safely over time and prevents the early stages of AI model collapse.

In healthcare, MLOps is the set of tools, workflows, and processes that manage how a model is deployed, monitored, updated, retrained, validated, and rolled back. It ensures the model you ship is the one clinicians can trust weeks or months later.

A drift-detection framework built on MLOps continuously compares new clinical data with the model’s baseline environment. The system looks for signals that the model is seeing something new or unexpected.

Statistical tests form the technical core of this monitoring:

- KS tests show when continuous features no longer follow their historical shape.

- PSI picks up shifts in population characteristics, such as changes in age mix or the emergence of new comorbidities.

- KL divergence catches early, subtle changes that often precede full model degradation.

These tests matter, but the context matters even more. Drift detection becomes reliable when tied to operational events. If a hospital installs a new blood-pressure device, updates its lab analyzer, or introduces a new EHR template, the monitoring system automatically flags the change as a potential drift event and evaluates the model’s behavior under the new conditions.

This is where Big Data services and engineering support MLOps. Healthcare data arrives from many sources, at different speeds, with frequent schema changes and occasional missing records. A drift-detection pipeline must handle:

- continuous ingestion,

- high-volume streaming,

- evolving schemas,

- late-arriving or incomplete data,

- asynchronous updates from clinical systems.

Without this foundation, data drift signals get lost, delayed, or misinterpreted, and the model continues making decisions that no longer match the real clinical environment.

Automated Mitigation and Recovery

Detecting drift is only the first step. The real work begins when the system decides how to respond. Some changes require full retraining, especially when clinical meaning shifts and the model’s original logic no longer applies. Others call for partial retraining or fine-tuning, which is faster and safer when only feature distributions drift.

Healthcare AI cannot rely on manual fixes or ad-hoc decisions. The mitigation layer defines how the model adapts, how new versions are validated, and how teams protect clinicians from AI model collapse by responding quickly and consistently.

Retraining Pipelines

A retraining pipeline is an automated workflow that refreshes the model using the most recent, high-quality data. It collects drifted features, prepares updated datasets, retrains the model, evaluates performance, and packages the new version for validation.

Automated retraining pipelines for healthcare AI must be reproducible, fully logged, and transparent, so that teams can explain exactly how and why a model has changed. A well-designed pipeline shortens response time from weeks to hours and eliminates risky manual steps, such as hand-built datasets or one-off training scripts.

Full vs. Partial Retraining

Not all drift requires the same response. Full retraining is needed when the underlying clinical relationships have changed. Examples include new treatment protocols, altered biomarker behavior, or updated disease definitions. In these cases, the model’s logic must be rebuilt from the ground up.

Partial retraining or fine-tuning is suitable when the data distribution shifts but the meaning remains intact. For example, new device calibration or changes in demographic composition can often be handled by adjusting weights rather than reconstructing the entire model.

Dividing drift into these two categories prevents unnecessary retraining and reduces downtime.

Human-in-the-Loop Validation

Before any updated model reaches clinicians, it must pass clinical review. Automated tests confirm statistical performance, but human experts confirm clinical relevance. Data scientists check calibration, drift correction, and subgroup behavior. Clinicians assess whether outputs make sense in real scenarios and whether new data bias in AI appears.

This step is essential because healthcare AI affects treatment plans, risk scores, triage decisions, and diagnostic pathways. No model update should be deployed without explicit human approval.

Rollback Mechanisms

Even validated models can behave unpredictably once exposed to real-world data. A safe monitoring architecture includes instant rollback capabilities. If a newly deployed model shows signs of instability – unexpected predictions, lower accuracy, new disparities – the system must immediately revert to the last stable version.

Rollback protects clinical workflows, reduces operational risk, and ensures that model updates do not interrupt care.

Data Observability and Alerting

A drift-monitoring system is only useful if teams can check what is happening and respond quickly. Data observability provides that visibility. It connects the model’s behavior to real clinical operations and ensures that both technical and clinical teams understand when, where, and why drift occurs – a key requirement for effective AI model governance.

Integrating with Hospital EHR and PV Systems

Healthcare data rarely flows from a single source. A monitoring layer must integrate with EHR systems, laboratory information systems, pharmacy and PV platforms, imaging archives, and device telemetry. Strong clinical interoperability is essential here because every upstream system change affects the data the model relies on.

This integration allows the system to track how upstream changes influence the model. For example:

- an EHR update that modifies a field’s format,

- a LIS analyzer that shifts reference ranges,

- a pharmacy system that introduces new drug codes with different interaction profiles.

When these changes appear, observability captures them immediately, lowering the risk of silent failures and reflecting how hospitals manage model degradation in real clinical workflows. It also ensures that all drift signals are mapped back to their clinical context.

Real-Time Dashboards and Alerts

Monitoring requires information that is easy to interpret and available in real time. Dashboards give data teams a clear view of:

- model performance over time,

- feature-level drift signals,

- subgroup performance (age, sex, ethnicity, comorbidity clusters),

- data-quality anomalies,

- volume shifts that may indicate upstream system issues.

Alerting systems notify teams as soon as data drift crosses a threshold or when specific patient groups begin receiving inconsistent predictions. Alerts can target different roles: engineers for infrastructure issues, data scientists for distribution changes, and clinical teams for meaningful drops in reliability.

These tools help organizations respond before clinicians encounter incorrect or unstable outputs and before data bias in AI actually impacts health delivery.

Clinical Governance Layer

Technical monitoring alone is not enough. Healthcare requires a governance layer that evaluates the clinical impact of every drift signal and retraining event.

This layer records:

- drift detections,

- retraining decisions,

- validation outcomes,

- bias analyses,

- deployment timestamps,

- rollback events.

It creates a full, auditable history of the model’s life cycle – something regulators expect, and clinical teams rely on.

Governance also establishes clear decision rights: who approves model updates, who investigates drift alerts, and who signs off on clinical relevance.

By combining observability, alerting, and governance, organizations move from reactive troubleshooting to a proactive model of stewardship, which is the foundation of safe and reliable medical AI.

Seeing your AI model struggle because patient data is still siloed across systems? Discover how the right integration fixes it.

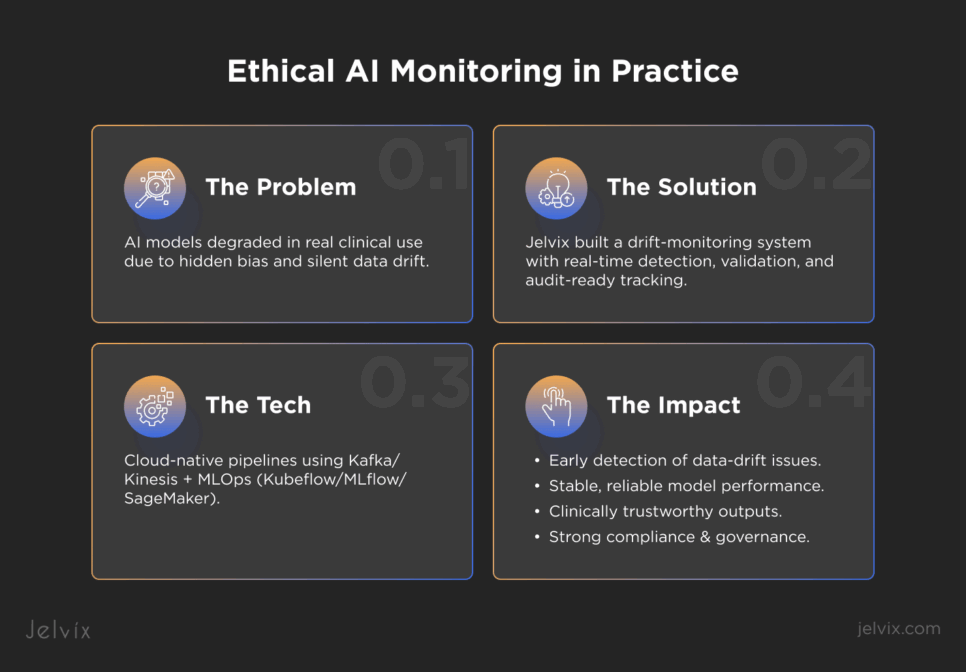

Jelvix Case Study: Ethical AI Model Monitoring in Practice

Before looking at the architecture itself, it can be insightful to see how data drift appears in real healthcare products. The following example comes from a recent Jelvix project that involves implementing healthcare predictive analytics. For confidentiality reasons, the client’s name is not disclosed, but the challenges reflect what many healthcare AI teams face once their models move from controlled testing into real clinical use.

The Client Challenge

A healthcare technology firm developing AI-driven tools for diagnostics and treatment faced a critical problem: its models were at risk from hidden biases and unmonitored drift. Although validated in controlled environments, the systems began to show signs of data decay once deployed in real clinical settings. Device changes, evolving patient demographics, and shifting documentation workflows created instability that the client struggled to detect.

These challenges reflected early stages of AI model collapse, where performance deteriorates silently and unpredictably. The stakes were high – patient safety, clinician trust, regulatory liability, and long-term compliance readiness were all on the line.

Jelvix Architectural Solution

Our team deployed a dedicated drift-monitoring module embedded within the analytics platform. This module included event-driven pipelines that detect changes, for example, new lab analyzers, EHR schema updates, or shifts in patient mix. Continuous validation routines check both model performance and data integrity in production. Every action is logged in audit-ready trails so that every drift detection, retraining event, and rollout decision is traceable and compliant.

Technology Stack

We built the solution using a cloud-native architecture optimized for scale and resilience. Data ingestion and streaming used Apache Kafka or Amazon Kinesis to handle high-throughput clinical data flows. Machine‐learning models and monitoring pipelines were implemented in Python and managed via MLOps tools such as Kubeflow, MLflow, and AWS SageMaker. The infrastructure is fully containerized and orchestrated to ensure seamless updates and rollback capability.

Results

The impact of the monitoring system became clear once it was deployed in real clinical conditions. Each improvement addressed a different part of model reliability and helped the client maintain safe, predictable AI performance.

Early-Warning Detection

The monitoring architecture allowed the team to identify data issues long before they influenced clinical decisions. Drift signals surfaced in real time, helping prevent silent data decay and allowing prompt mitigation. Instead of reacting to clinician complaints, the system proactively identified problems, reducing operational risk and improving patient safety.

Stable Model Performance

Continuous comparison between baseline behavior and live inputs kept the model stable even as hospital conditions evolved. The platform distinguished routine variability from meaningful model drift vs data drift, which ensured that performance stayed within expected clinical ranges. This stability was particularly important when devices, populations, or documentation patterns changed.

Clinically Reliable Outputs

With drift detection and validation in place, clinicians received outputs that remained predictable and clinically coherent. Subgroup checks reduced hidden risks related to data bias in AI, and consistent calibration helped prevent incorrect triage, risk scoring, or treatment recommendations. As a result, clinicians’ trust in the AI system increased.

Ethical Alignment and Compliance

Audit-ready logs, transparent retraining workflows, and structured governance supported regulatory-grade AI model governance. The client could demonstrate exactly how and when the model changed, why decisions were made, and how bias checks were performed. This strengthened compliance and aligned the system with emerging ethical and regulatory expectations for medical AI.

How Jelvix Helps Healthcare Businesses Get From Model Building to Model Maintenance

Building a medical AI model is only the beginning. The real work and the real responsibility start once the model enters a live clinical environment.

Medical AI only succeeds when it is continuously monitored. Accuracy at launch says very little about how a system will behave once data sources change, patient populations shift, and new data drift signals begin to accumulate. Without governance, even a strong model can begin to inherit silent bias and lose the stability clinicians rely on.

The future of healthcare AI depends on continuous validation, drift-aware pipelines, and MLOps practices built to the standards expected by regulators. This is how organizations prevent long-term data bias, maintain trust, and keep their systems aligned with real clinical conditions. In practice, continuous monitoring is the only way to make medical AI safe.

If your organization is scaling medical AI, now is the time to secure that investment. Jelvix helps teams build ethical, reliable Big Data and MLOps architectures that keep models stable long after deployment. Secure your AI investment with expert guidance – connect with us to build a production-ready, compliance-aligned AI ecosystem.

Ready to secure your medical AI in real-world conditions?

Work with Jelvix to build a reliable, compliant, production-ready AI architecture.