If you have read the news recently or have just been very observant about the things going on around you, you probably already know that software is eating the world. In a decade or so from now, many experts believe that artificial intelligence and machine learning, in turn, will eat the world of software.

The potential of artificial intelligence is truly immense because of new strides in artificial neural nets and advancements in machine learning. As a matter of fact, in recent years, companies like Microsoft and Darktrace have been able to use machine learning to detect malicious activity on their networks almost instantly.

Out of the many different AI and machine learning applications, one of the use-cases that will be impacted the most is cybersecurity and cybersecurity audits. Because there is no doubt that different attacks on security infrastructure will become more advanced, AI will be needed to keep these threats at bay more easily. Here is a deeper look at how AI and ML can impact cybersecurity.

What is Cybersecurity

Before we can go deeper into the potential impact of Artificial Intelligence, we have first to define what cybersecurity is in the first place. To put it simply, cybersecurity is any infrastructure set up to make sure that a company and its sensitive data are kept safe from harmful cyberattacks that come from blackhat entities.

Cybersecurity is particularly important because it protects all kinds of data from all manners of exploitation. For example, leaked data can be used to apply for credit fraudulently. This data could also be the infrastructure systems of an entire school which hackers can shut down unless a ransom is paid to restore services.

Because of this, it is not difficult to tell why having robust cybersecurity services in place is of the utmost significance. Not only do they keep data private, but they also prevent damage control losses of millions of dollars because of the damage that may be caused by the attack.

Having cybersecurity standards in place is so important that enterprise clients are now looking for cybersecurity compliance certifications like SOC 2 and ISO 27001 to feel comfortable sharing their data with a company.

Potential Future Impact of AI and ML on Cybersecurity

To understand how useful artificial intelligence can be for cybersecurity, we have to first take a look at some of the biggest challenges that cybersecurity experts are currently facing. First of all, scouring the systems infrastructure of a company to find potential threats manually can be very tedious work and is very prone to human error.

Smaller companies won’t be able to do this very effectively at all because they will undoubtedly lack the manpower that it takes to pull something like this off, so they are naturally easier threats for malicious actors to prey upon.

The second problem cybersecurity experts face is that even modern cybersecurity infrastructure is stepping up to threats in a very reactive way instead of proactive. In other words, most systems won’t be able to detect potential threats until these threats have already acted upon their systems. This is not ideal because even though this reactionary system allows earlier action, it does not prevent the attacks at all.

The third biggest challenge is finding the time and resources for a cybersecurity assessment. The problem with SOC 2 and ISO 27001 audits is that they take months to complete, and they are also very expensive.

Artificial intelligence can help fix all of these problems because it can understand potential threats in a system with just as much context and intuition as a human being but will not burn out after having to search through millions of data points to find these threats. They also allow for far more proactive cybersecurity that can detect threats before they get a chance to do any harm in the first place.

Lastly, they can help by automating the entire auditing process for SOC 2 and ISO 27001 in a way that would be far less expensive and far less time-consuming. This way, not only large enterprise companies will be able to get these coveted security compliance certifications but startups as well.

Proactive Steps to Apply AI to Cybersecurity Without Compromising Privacy

The major drawback of using artificial intelligence in cybersecurity is that when you think about cybersecurity, you automatically think about protecting data and ensuring that sensitive information remains secure.

The very fact that artificial intelligence actually works against this because it is working by taking in millions of data points to improve itself provides a lot of difficult challenges as far as implementation is concerned.

First of all, you have to think that some of the largest technology companies in the world with some of the best implementations of artificial intelligence, such as Facebook and Google, have all had very serious security breaches in the past.

One would think that if these companies could not keep private information secure through the scope of those very simple use-cases, how secure will this information be when paired with incredibly sophisticated machine learning algorithms?

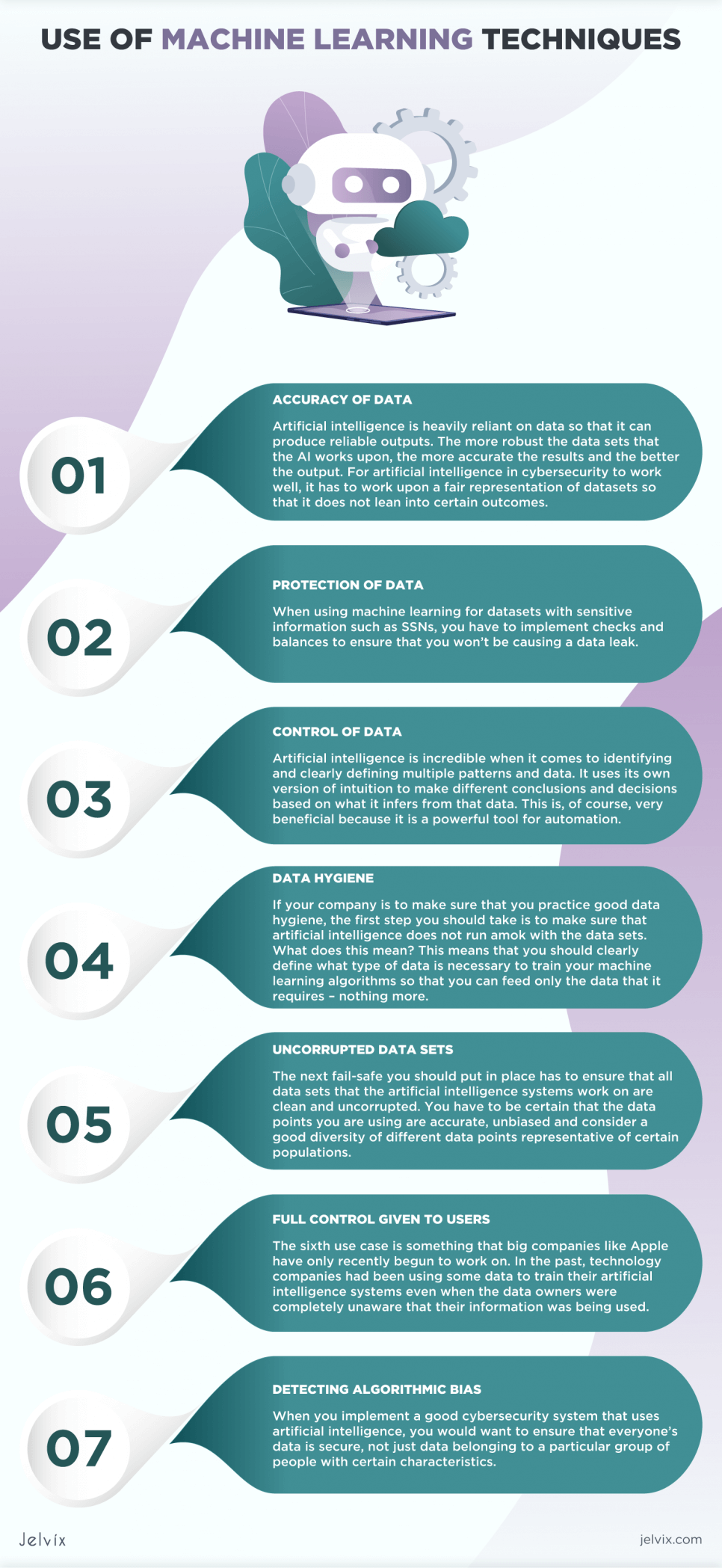

Responsible Use of Machine Learning Techniques

In order for a cybersecurity firm to make sure that the implementation of artificial intelligence and machine learning techniques is ethical and does not breach user privacy or create false outcomes in any way, one has to consider some use cases that may be impacted by AI. Here are the most notable ones:

Accuracy of Data

Artificial intelligence is heavily reliant on data so that it can produce reliable outputs. The more robust the data sets that the AI works upon, the more accurate the results and the better the output. For artificial intelligence in cybersecurity to work well, it has to work upon a fair representation of datasets so that it does not lean into certain outcomes.

This is something that people who are more inclined towards statistics would understand fairly well; it is not enough for the sample size to be large – it must also accurately capture the sample population’s demographics.

Protection of Data

Another very important consideration given the size of the data sets that artificial intelligence algorithms work with is ensuring that every single privacy risk is accounted for and that sensitive information that the machine learning algorithms work with has no possible breaches.

You have to remember that artificial intelligence is incredibly intuitive and that the data you make anonymous can still easily be dispelled by artificial intelligence. It has even been shown that as artificial intelligence works with vast sets of data where the information is made anonymous — up to 95% of the information was still labeled accurately by the power of the machine learning algorithms alone.

When using machine learning for datasets with sensitive information such as SSNs, you have to implement checks and balances to ensure that you won’t be causing a data leak.

Control of Data

Artificial intelligence is incredible when it comes to identifying and clearly defining multiple patterns and data. It uses its own version of intuition to make different conclusions and decisions based on what it infers from that data. This is, of course, very beneficial because it is a powerful tool for automation.

However, there are a few risks involved when the algorithms begin to be more biased because of our aforementioned corrupt data. They could assign bad credit scores for certain people that fit into certain criteria, for example, even though those bad credit scores are not really warranted.

So then, how are all of these issues remedied? After all, you do not want these very powerful algorithms to work against you because that can really do harm. Cybersecurity professionals can utilize a few methods to minimize these threats so that the power of AI can still be used, but privacy would be protected altogether.

It all starts with a properly defined and well-implemented data governance strategy on the part of your company. Traditionally, the strategies did not involve artificial intelligence in any way, but because of the rising threat of the harmful side effects of AI — you have to take these things into consideration.

Data Hygiene

If your company is to make sure that you practice good data hygiene, the first step you should take is to make sure that artificial intelligence does not run amok with the data sets. What does this mean? This means that you should clearly define what type of data is necessary to train your machine learning algorithms so that you can feed only the data that it requires – nothing more.

This kind of typecasting will make sure that your artificial intelligence system works as intended and you do not give it exposure to data that it does not really require. Suppose you want to process cybersecurity protocols using previous transaction data, for example.

In this case, you do not want to feed your machine learning algorithms with every piece of information about the users involved with these transactions because they often include very sensitive data points like Social Security numbers at home addresses. If the AI does not need it, don’t give it to it.

Uncorrupted Data Sets

The next fail-safe you should put in place has to ensure that all data sets that the artificial intelligence systems work on are clean and uncorrupted. You have to be certain that the data points you are using are accurate, unbiased and consider a good diversity of different data points representative of certain populations.

There should be a clear auditing process involved when it comes to seeing if your machine learning algorithms work with unbiased data points because if left unchecked and unaccounted for, your artificial intelligence simply will not work as intended. Machine learning algorithms work with unbiased data points because if left unchecked and on account, your AI artificial intelligence simply will not work as intended.

Full Control Given to Users

This use case is something that big companies like Apple have only recently begun to work on. In the past, technology companies had been using some data to train their artificial intelligence systems even when the data owners were completely unaware that their information was being used.

One way of making sure that you are not breaching user privacy is to simply ask permission from the user for them to know what information is being used to train machine learning algorithms.

You have to let users know what exactly your algorithms aim to do with their data. Give users control over their own information, which is a very basic but profound step to take, and make sure that your AI cybersecurity systems are secure.

Read our guide to learn more about the Top Machine Learning as a Service Companies.

Detecting Algorithmic Bias

Throughout this guide, we have discussed the dangers of algorithmic bias when it comes to implementing artificial intelligence, but what do we mean by this exactly? To put it simply — you have to make sure that the AI systems are trained with datasets that have a fair representation of the target demographic so that bias does not occur.

Suppose your artificial intelligence systems are unable to recognize traits that are more associated with minorities, for example. In this case, your cybersecurity systems will not be able to identify particular threats for consumers that have these traits.

When you implement a good cybersecurity system that uses artificial intelligence, you would want to ensure that everyone’s data is secure, not just data belonging to a particular group of people with certain characteristics.

Keeping Things Open Source

Of course, a minor step that you can take to make sure that your AI cybersecurity systems do not harm sensitive data is just to keep things open source. You may think that this is counterintuitive because open-source cybersecurity systems are easier to hack, but when you keep your software open-source, they can be regulated far easier.

This is a good way to make sure that certain standards are being met and that there are no holes in your implementation. This does not mean that you have to give away the entire codebase to your cybersecurity systems; just make sure that you are pairing with fully licensed, completely regulated, and trustworthy AI.

Not to mention, keeping things open source is also an ideal way to make sure that companies are using data sets ethically because they know third-party users will be able to access that information.

Future Considerations

The most exciting thing to keep in mind is that the solutions discussed earlier are already existent and are being practiced by various cybersecurity teams. Imagine what can be made possible through even further advancements in artificial intelligence and machine learning.

One potential application is fully autonomous penetration testing. A penetration test is useful for a company to be able to identify potential holes in their cybersecurity infrastructure so that they can work on them and patch them up before malicious actors can exploit them. Penetration testing is very expensive, but these costs can be greatly reduced if carried out entirely by artificial intelligence.

Because machine learning techniques are incredible when it comes to correlating past output and current input, these systems will also undoubtedly get better at identifying which transactions a company makes are fraudulent. Many payment gateways are already using these techniques when a particular transaction matches the details of a previous fraudulent transaction.

As the computing power that is required to train these advanced machine learning algorithms is becoming cheaper and more robust, we can expect that their incredible effects on cybersecurity will also be enhanced. Whatever the case, it is also important for the company to establish ethics in AI.

If these algorithms become too powerful, they can have the opposite effect and actually cause cybersecurity problems, such as breaking encryptions.

Whatever innovations we see in the world of machine learning, artificial intelligence, and cybersecurity in the future, one thing is guaranteed — your data can be more secure in the future than it ever has been before.

Gabe Nelson is a content specialist of over 7 years of experience, currently working with office1.com. Just out of high school he set off crab fishing on the Bering sea in Alaska. From there he went back home to finish his college degree at the University of Montana.He has written hundreds of content pieces in numerous niches. Currently, he lives in Missouri with his wife and kids.

Need a certain developer?

Use our top talent pool to get your business to the next level.