Reliable healthcare datasets have become the critical infrastructure behind modern medicine. From developing public health strategies to altering medical care, access to clean, valuable health datasets today defines competitive advantage.

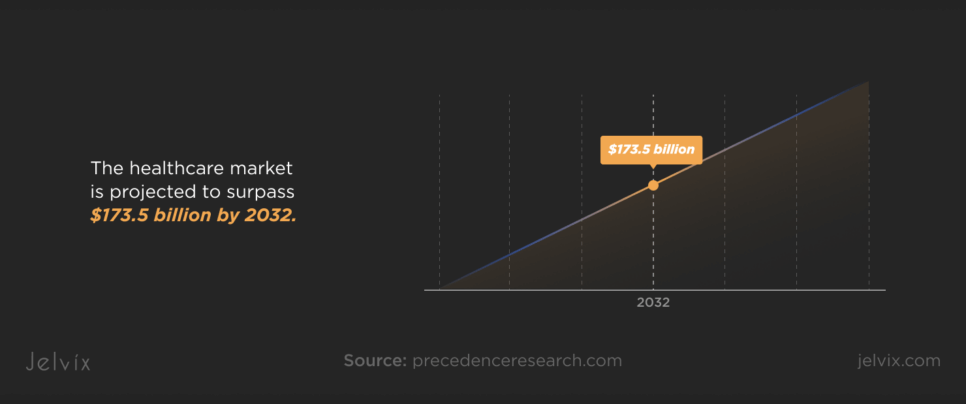

Healthcare analytics is transforming the industry’s economic core. Valued at $43.6 billion in 2023, the market is projected to surpass $173.5 billion by 2032, according to Precedence Research.

Healthcare generates roughly 2,314 exabytes of new data. This volume changes everything: operational strategy, patient care, and regulatory frameworks. Raw data, by itself, though, is insufficient. Advancement depends on obtaining not only vast but also well-curated, labeled, interoperable health datasets. The institutions defining the next decade of healthcare are those poised to convert unprocessed data into decision-ready insights.

Among constant noise, just a few sources regularly offer the depth, accuracy, and usability that researchers, developers, and legislators need. And we’ll map out those sources.

What Are Healthcare Data Sets and Why Are They Important?

Healthcare data sets are organized collections of information pulled from hospitals, labs, insurance systems, and clinical research. Structured and ready for real-world application across healthcare systems, they capture everything from patient histories and lab findings to imaging records and billing files.

Not every bit of medical information is ready to direct decisions. Even the richest datasets end up isolated, caught inside systems unable to communicate without standard formats. Medical templates, information models, and encoding specifications help to transform dispersed data into something significant—tools that promote research, enable better health networks, and drive treatment ahead.

From electronic health records (EHRs), national health surveys, clinical trial archives, insurance claims, and biobanks, healthcare data sets examples span quite a spectrum. Every one of them has a different function: helping AI development, improving patient care, directing public health policy, or simplifying procedures.

These days, types of healthcare data go much beyond hospital records. Wearable devices, genomics laboratories, and population health studies contribute to a fast-expanding ecosystem of real-time, sophisticated data streams.

HIPAA and the HITECH Act, among other U.S. rules, define the criteria not only for safeguarding personal health information but also for organizing it in ways that maintain security, shareability, and usefulness throughout a healthcare system.

Precision medicine has raised the bar even higher. None of the tailored medicines, AI-powered diagnostics, or outbreak predictions happen without quick access to deep, labeled datasets created for scalability. Data in healthcare is dynamic; it is the basis for creating systems that learn, adapt, and provide more accurate, faster solutions.

Open-source medical imaging datasets expose the results that come from an excellent data structure. Stored in DICOM or NIfTI, clean, anonymised sets of X-rays, MRIs, CT scans, and ultrasounds let researchers train and hone models free from ethical or technical constraints.

For many AI healthcare projects, these public imaging sets offer a critical head start, often pushing models 70–80% of the way toward clinical readiness before real-world validation with proprietary data is needed.

In short, access to the right healthcare data sets determines how fast new therapies and systems move from pilot projects into clinical practice. Not all datasets are created equal—but understanding where to find them, and how to use them, is now a baseline skill for serious innovation.

Let's explore smart health data management principles and practical approaches to better understand the core processes, benefits for healthcare providers and emerging trends in the industry.

Examples of Data Sets in Healthcare

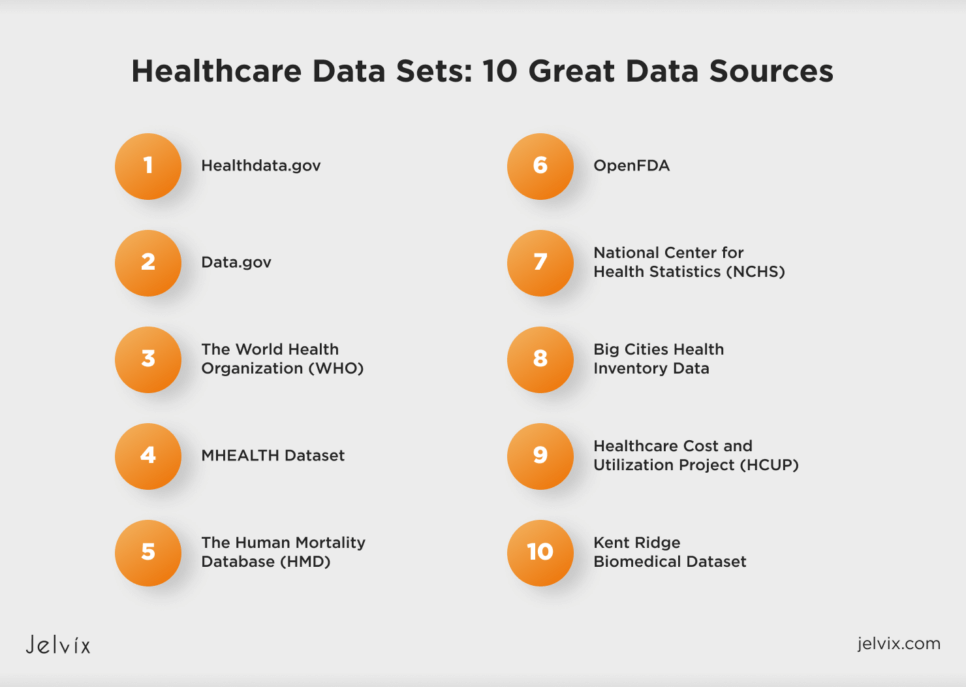

Healthdata.gov

Healthdata.gov was developed by the U.S. Department of Health and Human Services as a public platform to centralize open healthcare data from across the country. It hosts more than 3,000 datasets, covering Medicare Provider Charge Data, hospital-acquired infection rates, nursing facility performance, and opioid prescribing trends.

Healthdata.gov aggregates federal, state, and local data into a single, standardized hub instead of isolating federal reports. Users may, for instance, cross-reference national Medicare spending patterns with state health department or city-level emergency response measurements or localized disease monitoring data.

The platform is popular among different specialists. It attracts health economists who model policy impacts, epidemiologists who track disease trends, and developers building healthcare apps and analytics tools. One of the main advantages is the availability of raw, downloadable files—often in machine-readable formats like CSV and JSON—as well as the platform’s dedication to regular data updates, which allow almost real-time analysis and modeling.

Data.gov

Although Data.gov serves as the broader U.S. government’s open data portal, healthcare continues to be one of its richest categories. Designed under President Obama’s 2009 Open Government Initiative, the platform was meant to demolish data silos among federal agencies.

Hospital safety scores, Medicare and Medicaid spending, opioid overdose trends, clinical trial data, epidemiological monitoring, food and drug safety reports, and healthcare provider directories comprise just a few of the subjects covered in the healthcare section.

Users can also find data on healthcare facility inspections, behavioral health programs, substance abuse treatment outcomes, environmental health risks, and medical workforce distribution.

Unlike specialized research repositories, Data.gov prioritizes breadth over depth. It is a preferred resource for policy analysts tracking national trends, public health researchers modeling large-scale outcomes, and developers building healthcare-focused applications. With thousands of datasets available in machine-readable formats like CSV, XML, and JSON, the platform is designed for easy integration into analytical models and public dashboards.

The World Health Organization (WHO)

The WHO Global Health Observatory (GHO) is the world’s largest centralized source of internationally comparable health data. Unlike U.S.-oriented portals, GHO gathers and harmonizes data from all around the globe. It enables cross-national research on environmental hazards, population changes, disease trends, and the performance of health systems.

Launched to give lawmakers, scientists, and NGOs a truly global health intelligence platform, GHO tracks everything from vaccination rates and life expectancy to noncommunicable disease burdens, healthcare access indicators, and mortality causes.

Among its most unique characteristics are the integrated visualization tools. All within the platform itself, users can dynamically compare health indicators across nations, investigate patterns over time, and create unique charts and maps instead of only downloading static datasets. This makes the GHO an active tool for global health surveillance, policy evaluation, and strategic design on par with the data store.

MHEALTH Dataset

The MHEALTH Dataset was built for one purpose: to capture how the human body moves and reacts in everyday activities. Volunteers wore body sensors while walking, running, sitting, and climbing stairs—activities designed to simulate real-world motion.

MHEALTH is valuable not only for the range of activities it records. It provides a whole, integrated perspective of physical health in action by synchronizing motion data from accelerometers and gyroscopes with vital indications like heart rate (via ECG monitors).

Using this information, researchers in mobile health, wearable technology, rehabilitation, and sports medicine create algorithms able to identify activity patterns, detect falls, and even forecast early signs of health deterioration.

MHEALTH is often one of the first data sets in healthcare chosen for developing health monitoring applications or AI-based fitness solutions, as it ties raw movement to clinical signals in real-time.

The Human Mortality Database (HMD)

The depth and historical reach of the records in the Human Mortality Database (HMD) distinguish it. Created through a collaboration between Germany’s Max Planck Institute for Demographic Research and the University of California, Berkeley, it aggregates comprehensive mortality and population statistics across several dozen countries.

The data for numerous countries goes back to the early 19th century, allowing researchers to examine patterns in longevity, death rates, and shifts in demographics over periods of 100 years or more. Few healthcare data sources can equal this degree of historical continuity.

Therefore, HMD is a vital tool for everyone studying the long-term consequences of wars, diseases, healthcare innovations, or social reforms on population health.

National Center for Health Statistics (NCHS)

The National Center for Health Statistics (NCHS), part of the CDC, runs some of the largest and most respected health surveys in the United States. Two of its key projects—the National Health Interview Survey (NHIS) and the National Health and Nutrition Examination Survey (NHANES)—have significantly shaped what is known about the nation’s health.

NHIS collects self-reported data from households on many health topics, including chronic conditions, access to healthcare, and insurance coverage. It offers a year-by-year look at how different factors influence health outcomes across different population groups.

Combining personal interviews with physical examinations, laboratory tests, and medical imaging, NHANES advances the research arena. NHANES collects objective health measurements, including blood pressure, cholesterol levels, and environmental chemical exposure, rather than relying on self-reporting surveys.

These databases, taken together, create a layered, detailed picture of the nation’s health, connecting how individuals live with how their bodies react, and therefore provide a basis for innumerable public health policies and clinical standards.

Big Cities Health Inventory Data

Unlike national datasets that often blur regional differences, Big Cities Health Inventory Data presents city-level data on issues like asthma rates, opioid-related hospitalizations, violent injuries, maternal health, and chronic disease prevalence.

Its emphasis on local differences that more general systems sometimes average out sets it apart. The healthcare data collection records air pollution surges, housing instability trends, and mental health crises where they really occur, providing a clearer, ground-level view of urban health concerns.

The power of the inventory is in exposing how, block by block, city by city, health outcomes differ. One of the only databases meant to grasp public health at the real scale of urban living, it offers a framework for examining how social and environmental elements interact differently in New York, Los Angeles, Chicago, and other major hubs.

Healthcare Cost and Utilization Project (HCUP)

Unlike numerous data sets that concentrate primarily on specialty treatment, the Healthcare Cost and Utilization Project (HCUP) spans whole hospital ecosystems—from inpatient procedures to outpatient visits and emergency interventions—delivering a rare, continuous view of how healthcare services are actually used.

Additionally, its framework lets researchers dissect healthcare use in hundreds of different ways: patient demographics, procedure types, diagnosis patterns, geographical differences, and historical changes.

Not only does this tiered approach record what happened, but it also helps reveal why, transforming hospital data into a tool for addressing important concerns like cost drivers, treatment quality, and system-wide inequities.

Kent Ridge Biomedical Dataset

Machine learning studies in biomedicine can benefit a lot from the Kent Ridge Biomedical Dataset Repository. Primarily focusing on gene expression profiles, proteomics data, and genomic sequences, it offers a suite of high-dimensional datasets. Reputable scientific publications have highlighted this since it is invaluable for classification tasks.

The repository includes datasets related to various cancer types, such as:

- Breast Cancer;

- Colon Tumor;

- Leukemia (ALL vs. AML);

- Lung Cancer;

- Prostate Cancer;

- Central Nervous System Tumors.

Usually comprising thousands of features, each dataset reflects gene expression levels and is set up to support binary classification challenges—that is, malignant against normal tissue. The datasets are curated to ensure quality and consistency, making them suitable for benchmarking machine learning algorithms in biomedical research.

OpenFDA

OpenFDA streamlines access to several of the Food and Drug Administration’s most important data sources. Through a searchable API, users directly access structured datasets on drug adverse events, device failures, product recalls, and labeling information instead of dealing with complicated reports and fractured systems.

Because the platform focuses on transparency without compromising on data integrity, it has become a core resource for teams working on drug surveillance, device performance analytics, and consumer safety technologies—anywhere high-trust healthcare data is essential.

Best Healthcare Datasets for Machine Learning

Not every healthcare dataset is built to power machine learning. Some are too narrow, others are too messy to trust in clinical environments. What separates a useful dataset from a wasted opportunity isn’t just size or popularity—it’s the structure, the labeling, and the ability to adapt to the specific demands of AI development.

Learn how we built an AI-driven FHIR data model that boosts cancer diagnostic accuracy through real-time patient data analysis.

What makes a dataset effective for machine learning? How structure affects model design, why open-source imaging collections are critical for early research, and where ethical lines need to be drawn when real patient data is involved—these are the main questions.

Structured vs Unstructured Data in AI Models

In healthcare, the way data is built often matters more than how much of it there is.

Structured data—for example, numeric lab values or coded diagnoses—follows fixed formats that algorithms can easily scan, sort, and learn from. Every piece fits into an expected column or row, making this type of data the backbone for models that require fast and reliable inputs.

Unstructured data moves differently. Handwritten physician notes, medical images, and audio files from patient interviews—these formats are not quite in line with tables. In these raw records, you can find richer clinical nuances: the tiny shift in a scan that numbers alone would overlook, or the delicate observation of a clinician.

In practice, a hybrid approach is used. Structured datasets build the scaffolding, offering stability and consistency. Unstructured data enables machine learning systems to extend beyond strict pattern recognition, incorporating deeper clinical knowledge and capturing the complexity of genuine patient experiences within the model.

Which Data Sets Work Best for Supervised Learning?

Supervised learning depends on certainty. The best healthcare datasets for this method are those where outcomes are recorded, verified, and directly mapped to patient data.

Clinical trials, for example, generate medical data sets built for supervision. There, treatments are assigned, results are monitored, and outcomes are logged in structured formats. Similarly, curated imaging archives tie diagnostic labels to scans under standardized criteria, removing ambiguity from the training process.

In supervised learning, the cleaner the relationship between input and outcome is, the stronger the model becomes.

The Importance of Open-Source Medical Imaging Data

Although machine learning research heavily relies on medical imaging, privacy and legal restrictions make high-quality imaging datasets challenging to obtain.

Clean, anonymised collections of MRIs, CT scans, X-rays, and ultrasounds available from open-source medical imaging datasets present a unique chance for researchers to be free from complicated licensing or compliance constraints.

These datasets typically come pre-labeled or include metadata that accelerates model development. For early-stage AI projects, they are critical for prototyping and testing algorithms before scaling up with proprietary clinical datasets.

Especially useful are platforms providing DICOM and NIfTI formatted images since they enable interoperability between healthcare systems and research environments.

Ethical Considerations When Using Real Patient Data

Using actual patient data in machine learning is an ethical responsibility as much as a technical difficulty. If improperly handled, privacy violations, biased algorithms, and misuse of health data can erode confidence and inflict actual harm.

Rigorous adherence to HIPAA, GDPR, and other laws is the bare minimum. Development of ethical AI also requires transparency on how patient data is used, de-identification techniques that really work, and fairness testing to guarantee models do not reinforce systematic prejudices in healthcare delivery.

Developers working with healthcare datasets must think beyond technical performance and account for the real-world consequences their models could trigger.

The Smart Way to Choose – Download Your Guide to Selecting the Right Healthcare Tech Partner!

The Future of Healthcare Data for Machine Learning

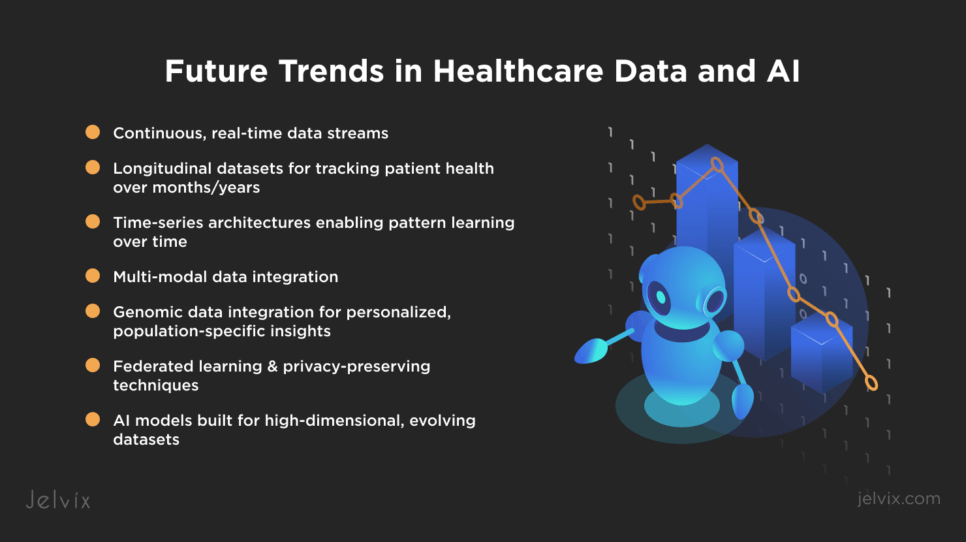

Healthcare datasets are shifting toward continuous, dynamic streams. Now producing high-frequency data points, wearable devices, remote patient monitoring systems, and IoT-enabled health sensors capture heart rate variability, blood glucose levels, oxygen saturation, movement patterns, and environmental exposures in real time.

Future AI models won’t be trained only on isolated episodes. They will need longitudinal datasets that chart patient trajectories across months or years. Time-series data architectures will become standard, enabling models to learn from sequence patterns rather than single-point observations.

Essential are also multi-modal datasets comprising structured fields (laboratory, drugs), unstructured clinical notes, imaging tests, and sensor outputs. Training on integrated representations helps models to grasp both clinical narratives and biological signals, enhancing prediction accuracy and personalization.

Integration of genomic data will increase model granularity even more by adding tailored treatment markers and population-specific changes into the learning loop.

Concurrent with this are privacy issues driving the acceptance of distributed techniques, such as federated learning, in which algorithms learn across remote datasets without aggregating sensitive patient data onto centralized servers.

In the next wave of healthcare AI, the strongest models won’t just be bigger. They’ll be built to handle high-dimensional, multi-source, privacy-protected data streams—evolving alongside the patients they aim to serve.

Conclusion

Innovation doesn’t start with ideas alone. It starts with access to clean, comprehensive, and intelligently organized data. And this information should be trusted to guide real-world decisions without introducing noise, bias, or gaps.

As machine learning models grow more sophisticated, the healthcare datasets feeding them must evolve too. Healthcare data sets and standards are becoming richer, more multi-dimensional, and more ethically managed to match the complexity of human health itself.

At Jelvix, we work at this intersection, where deep healthcare expertise meets real-world machine learning development. We do connect models to data. But there’s a move. We structure, refine, and scale solutions that move healthcare forward. If you’re looking to build a solution that truly understands healthcare, let’s start a conversation.

FAQs

What makes a healthcare dataset reliable for AI use?

If a dataset is accurate, current, well-labeled, and originates from reliable sources, it is AI-ready. Moreover, standardized formats and minimal missing data also boost reliability.

Are these datasets free for commercial use?

Many come from government or academic sources. Always verify the license terms to ensure commercial usage rights and attribution rules are clear.

How can I ensure HIPAA compliance when using public data?

Only utilize de-identified datasets and confirm the absence of any personally identifiable information (PII). Focus on datasets designated as HIPAA-safe for public use.

What are the best formats for training machine learning models?

Formats such as CSV, JSON, and XML are preferred. DICOM is typically used for imaging. Opt for formats that facilitate easy preprocessing and parsing.

Can I combine multiple datasets for deeper insights?

Yes, ensure data compatibility and agree on structure, variables, and units. Always validate merged data to prevent bias or duplication.

Need high-quality professionals?

Reach new business objectives with the dedicated team of professionals.