Explainable AI in healthcare is essential because even the most accurate model becomes clinically unusable when its decisions cannot be interpreted. This is the core paradox facing modern medical AI. Deep-learning systems often outperform human specialists but operate as “black boxes” whose internal reasoning cannot be inspected by anyone (even system designers).

Research continues to show that AI that can’t be explained can produce errors that no human would ever make, and because the errors can’t be audited, the potential harm that could be caused isn’t taken seriously.

This opacity is called the Black Box Problem, where the intelligence is visible as inputs and outputs with no justification for the actions in between. Such opacity introduces significant ethical concerns of AI in healthcare, since clinicians cannot comprehend or debunk its suggestions, regulators cannot validate the decision paths, and patients cannot make informed decisions.

In patient-centered care, the absence of transparency emulates a new version of medical paternalism: the system determines what is done “in the best interests,” and physicians and patients cannot interrogate the logic. Hospitals cannot assure fairness, monitor bias, or comply with FDA, MDR, or EU AI Act standards—which, in short, are becoming more demanding for model reasoning to be auditable in high-risk medical applications.

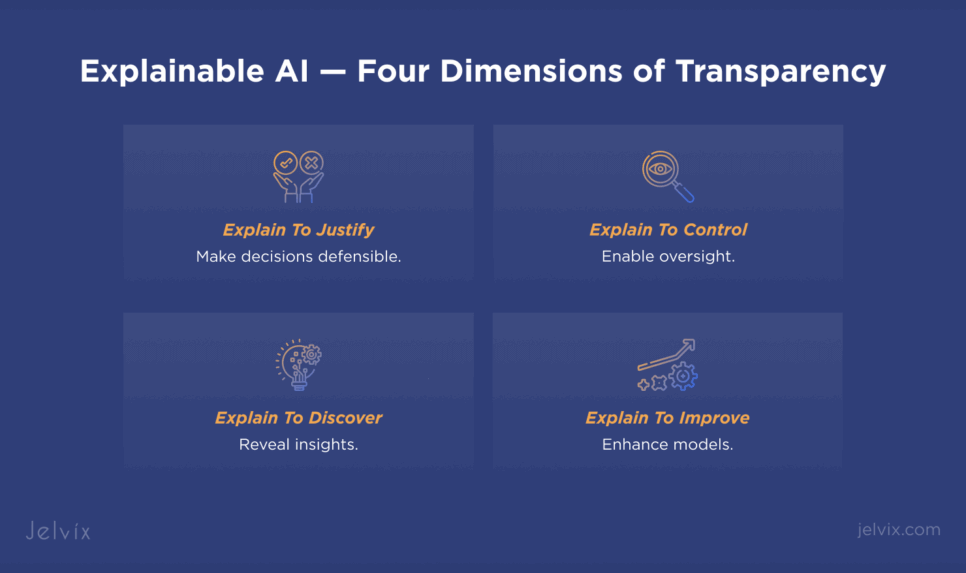

Explainable AI solves these challenges by making model decisions interpretable, traceable, and clinically meaningful, transforming opaque analytics into trustworthy medical intelligence. For organizations investing in AI software development, explainability is no longer optional.

The moment AI systems influence diagnosis, triage, resource allocation, or quality reporting, every output must be justified and auditable. This is where technical principles turn into clinical and organizational mandates, and where the challenges become visible in real projects.

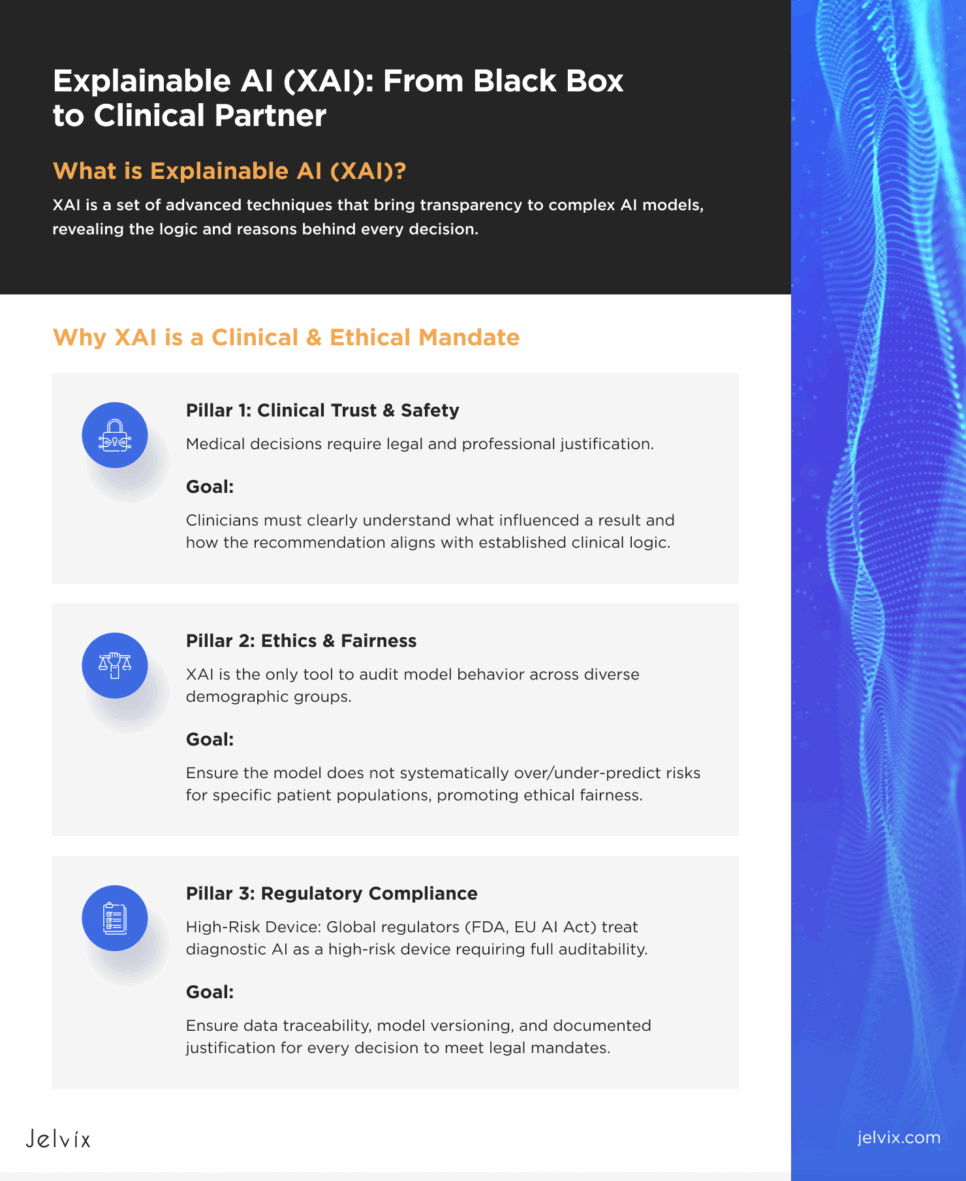

Why Explainable AI is a Clinical and Ethical Mandate

Explainable AI (XAI) is a clinical and ethical mandate because healthcare decisions require justification, and any system that cannot reveal its reasoning introduces many barriers. This was the central challenge in the case Jelvix AI experts worked with: the client had a high-performing analytics engine but no visibility into why a risk score changed, which input features drove a recommendation, or how the model behaved across different patient cohorts. Introducing model explainability became the non-negotiable foundation for adoption, safety, and credibility.

Ethical Audits & Fairness in AI

Ethical AI systems must expose how models behave across demographics because data bias directly shapes clinical outcomes and resource allocation. Without AI explainability tools, there is no way to show whether the model systematically overpredicts or underpredicts risk for certain patient populations, for example.

This is where the difference between explainable vs interpretable AI became essential. Interpretable models (like linear regression) were too weak for the platform’s predictive goals. The solution required advanced explainable techniques layered on top of complex architectures (global attribution heatmaps, demographic parity checks, and fairness dashboards that ran at training and production time). These ethical audits helped the client identify where models used irrelevant or ethically sensitive signals (e.g., socioeconomic proxies hidden in clinical notes) and ensured that demographic groups received consistent, justifiable recommendations.

Fairness auditing became a continuous process. Monthly bias drift checks, demographic stratification dashboards, and threshold-optimization workflows helped prevent harm before it reached production. This directly reduced institutional risk and aligned the platform with emerging ethical guidelines from regulatory bodies and medical associations.

When chatbots lack ethical safeguards, they create compliance and trust issues. See how Jelvix addressed this by developing an explainable, user-centric AI chatbot for reliable healthcare use.

Regulatory Compliance & Legal Liability

Regulators worldwide interpret AI as a medical device that must provide clear auditability, documented reasoning, and consistent behavior under real-world conditions.

- In the U.S., the FDA’s SaMD and CDS guidelines require traceable inputs, version-controlled model updates, clear documentation of how algorithms reach conclusions, and evidence that clinicians can independently review recommendations.

- The EU MDR and the EU AI Act classify diagnostic AI as “high risk,” mandating robust risk-management systems, documented model behavior, transparent training data characteristics, and per-decision justification that can withstand audit.

- The UK MHRA’s Change Programme requires transparent model updates and evidence of clinical safety.

- Canada’s Health Canada guidelines require demonstrable fairness, data governance, and explainable outputs.

- Singapore’s HSA guidelines emphasize end-to-end traceability, lifecycle monitoring, documentation of model intent and limitations, and human-in-the-loop safeguards to ensure clinicians remain accountable for final decisions.

Across all jurisdictions, model explainability is a shared expectation.

Physician Adoption & Clinical Trust in AI Data Integration

Clinician adoption decreases sharply when AI systems do not provide clear reasoning behind their outputs. And this is precisely why clinicians distrust black box AI. Specialists reject tools that provide numerical outputs without meaningful justification because such systems cannot be reconciled with evidence-based medicine, peer review, or professional accountability.

Understanding how to implement explainable AI in healthcare starts with recognizing that trust is a human factor. Clinicians do need full interpretability or algorithmic transparency. But the priority stands on understanding:

- what influenced a result,

- why the model highlighted certain risk factors,

- how the recommendation aligns with familiar clinical logic.

Trust also depends on whether explainability feels integrated into real clinical practice rather than layered on top as a technical feature. The Jelvix team learned early that adoption improves when explanations appear in the same format, terminology, and workflow clinicians already use.

This approach, which centers on clinical interoperability, aligns with how specialists naturally evaluate risk and supports a sense of partnership. Once explainability mirrors usual thinking, and once physicians can verify or challenge recommendations, the solutions move from being viewed as a “black box” to a decision-support partner. Trust grows organically, and adoption follows because clinicians feel the system respects their expertise instead of bypassing it.

The Engineering Toolkit of XAI: Choosing the Right Method for Healthcare Data Analytics

Each toolkit reveals a different aspect of model reasoning, and healthcare teams need both global and local visibility to ensure safe, transparent healthcare predictive analytics and AI assistance.

Global Explainability

Global explainability methods help teams understand how a model behaves at the system level. They reveal which predictors matter most and how they shape overall logic. In data analytics in healthcare, this is essential for validating that the model reflects real clinical knowledge. Among the used techniques are

- feature importance analysis;

- permutation tests;

- partial dependence plots;

- global SHAP summaries.

These, and many more, make model behavior transparent so that data scientists, clinicians, and compliance officers can inspect the logic behind population-wide predictions.

Global explainability is what allows health systems to answer foundational safety questions:

- Does the model rely on clinically meaningful features?

- Is any variable behaving suspiciously or over-weighted?

- Are there hints of demographic bias or misaligned risk patterns?

In short, global explainability ensures the model’s logic is aligned with clinical reality, making it safe to deploy before local explanations are used at the patient level.

Local Explainability

Local explainability methods focus on individual predictions, revealing why a specific patient received a specific score or alert. These methods translate opaque model output into patient-level reasoning that clinicians can incorporate into decision-making.

Two of the most widely used approaches are SHAP and LIME, which provide case-level explanations by showing which factors influenced a model’s output and by how much. These tools do not replace clinical judgment. Still, they make the model’s reasoning sufficiently visible to clinicians, auditors, and compliance teams.

LIME

LIME explains individual predictions by perturbing input values and observing how the model responds, producing a simplified surrogate explanation for that case. This method is fast, model-agnostic, and works well for:

- Early experimentation during model development.

- Rapid debugging of unexpected predictions.

- Providing quick insight when computational resources are limited.

LIME is widely used in the prototyping and validation phases of healthcare models, as it provides an accessible way to challenge assumptions and inspect local reasoning. When teams explore how to integrate AI into early-stage clinical workflows, LIME often serves as a practical starting point.

SHAP

SHAP is a game-theory–based method that provides consistent, mathematically grounded explanations for individual predictions, showing how each feature contributed to the score.

SHAP is preferred in healthcare because it delivers:

- Consistency: The same change in a feature always leads to the same direction of impact.

- Clinical clarity: Explanations follow an additive format similar to how physicians reason through risk.

- Fairness visibility: SHAP can be aggregated across subgroups to detect demographic bias.

- Production reliability: Stable explanations that do not vary randomly with small data changes.

SHAP’s outputs align with how healthcare professionals expect justification to be structured. This makes SHAP one of the most reliable XAI tools for integrating AI into environments that require transparency.

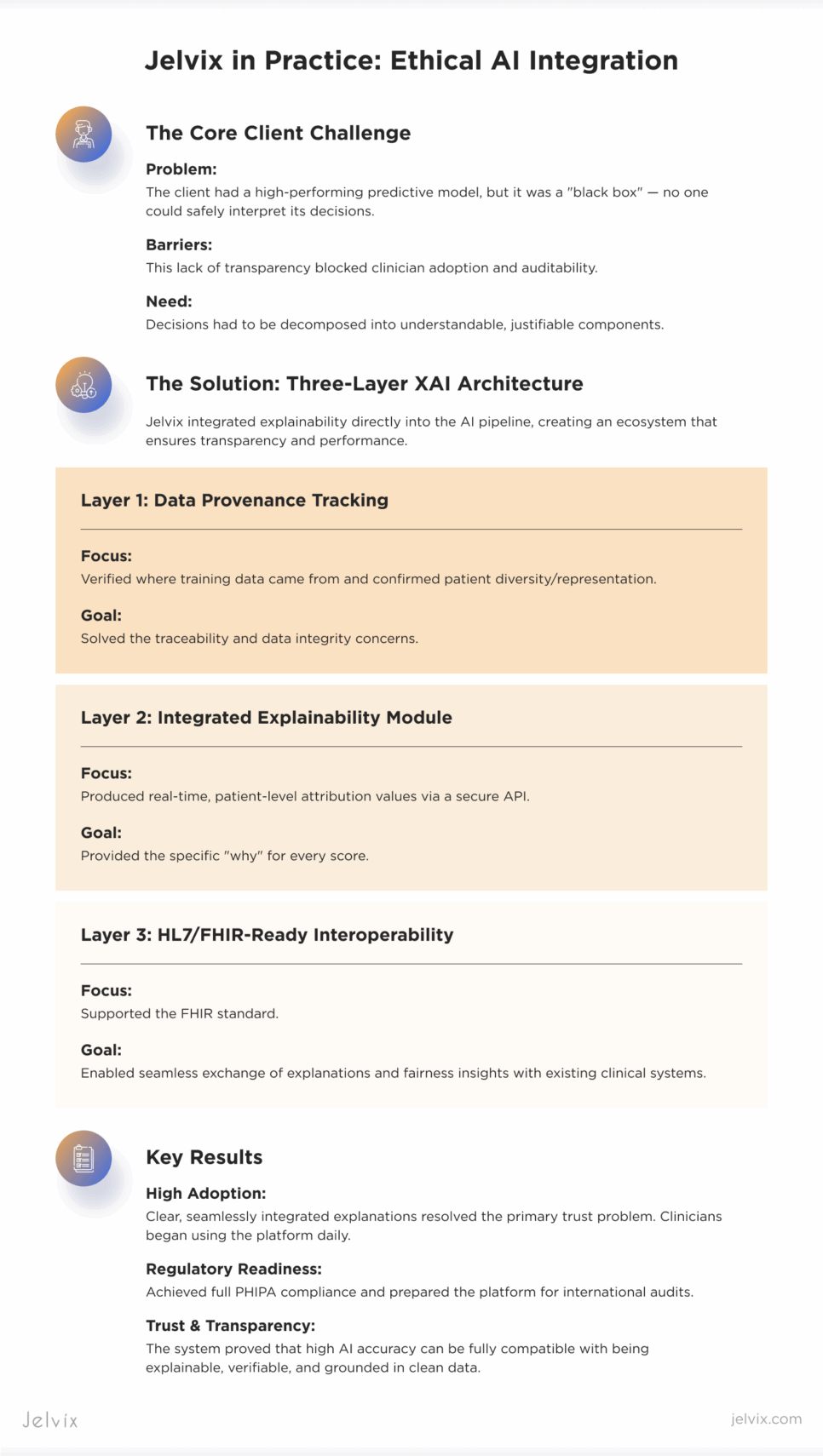

Jelvix in Practice: Integrating AI Into a Healthcare Analytics Platform

Integrating explainability into real-world healthcare software development requires more than just know-how. It requires designing an ecosystem where AI accuracy, transparency, privacy, and clinician trust function together. The Ethical AI case illustrates how Jelvix turned an intricate AI problem, a high-value model that no one could safely interpret, into a safe, working, production-ready, regulation-aligned platform hospitals could rely on.

The Client Challenge

The client needed a risk-modeling platform whose decisions could be decomposed into models understandable components. Their teams would not be able to accept outputs based solely on a list of probabilities; they would require a transparent approach to explain how patient data, representation gaps, and demographic trends influenced their AI’s behavior.

Auditors also raised similar concerns. They needed outputs that could be explained and defended in compliance reviews, and traceability of data origins, fairness metrics, and documentation about where model assumptions came from.

Clinicians also require interpretability. They wouldn’t trust opaque scores that didn’t have a good reason why they were, particularly if they were to play a role in diagnostic planning or quality reporting. For adoption to occur, AI outputs need to come with context.

The AI Architecture

Jelvix created the production architecture, incorporating explainability directly into the AI pipeline.This allowed the platform to produce patient-level attribution values in real time without straining model performance.

The pipeline was divided into three functional layers:

Data Provenance Tracking: Each dataset entered into the platform was annotated with origin metadata, representation summaries, and lineage history. This solved the client’s core AI problem: hospitals now had a way to verify where training data came from and whether it included diverse patient groups.

Integrated Explainability Module: All AI analytics modules produced interpretable components such as factor summaries, demographic breakdowns, imbalance alerts, and source-quality indicators. The findings were then made available through a secure API.

HL7/FHIR-Ready Interoperability: The system also supported the FHIR interoperability standard, enabling the exchange of AI explanations, data lineage, and fairness insights with clinical systems without custom coupling.

The architecture followed a privacy-by-design approach:

- PHIPA-compliant access controls;

- encryption at all layers;

- role-based permissions ensured that transparency never compromised security.

Synthetic dataset support enabled safe experimentation and validation even before real clinical data became available.

By embedding traceability, fairness analysis, and interpretability into the architecture itself, Jelvix delivered a platform where AI accuracy could be trusted, not because it was high, but because it was explainable, documented, and verifiably grounded in clean, representative data.

AI-Driven UX/UI

Because the platform’s users included clinicians, researchers, administrators, and auditors, Jelvix designed an interface that translated complex analytical outputs into clear, clinician-friendly visuals. The goal was to make AI reasoning understandable at a glance, without requiring technical knowledge or data-science interpretation skills.

The interface visualized key transparency components generated by the analytics engine, including:

- Factor breakdown charts showing which data elements contributed most to an insight.

- Diversity and representation dashboards highlighting demographic coverage and data gaps.

- Bias alerts and imbalance indicators flag potential risks in training data.

- Provenance timelines tracing exactly where each dataset originated and how it was transformed.

- Quality-of-source scoring that allowed clinicians to see whether an insight was derived from complete, balanced, or limited data.

Instead of exposing raw technical metrics, these visual elements were presented in familiar formats: bar charts, color-coded indicators, summary tables, and narrative explanation blocks. Each visualization was designed to answer a simple but crucial question: “Why did the system produce this insight, and can I trust it?”

The UI followed clinical-dashboard conventions. Healthcare users could scan explanations quickly, compare cases, and review data integrity without cognitive overload. Insights were contextualized, showing how an individual dataset or model behaved relative to broader cohorts. This design approach helped turn AI outputs into models understandable components, supporting clinician confidence and enabling auditors to validate system behavior without deep technical expertise.

Results

High Adoption. Once explanations were clear and embedded seamlessly into their workflows, clinicians used the platform daily. The system’s clear, explainable reasoning resolved the primary AI problem — lack of trust. That resulted in quick uptake by research staff, administrators, and clinical units alike.

Regulatory Readiness. The platform achieved full PHIPA compliance and was prepared for an international audit. Tracking of data provenance, explainability, and user-friendly access control was proven to be resilient and suitable for use in controlled clinical settings.

Trust and Transparency. SHAP integrations, data traceability, and simple UX design enabled such models to live in an environment where AI accuracy and explainability were compatible. Because users were finally able to connect how data fed into recommendations, it bridged the transparency gaps that had blocked deployment.

The result: a healthcare organization well equipped with an ethical, explainable AI foundation, ready for scaling, oversight, and use in the clinical world.

Conclusion: From Model Building to Trust Building

Explainability is a continuous MLOps discipline that helps maintain transparency, accountability, and alignment to clinical expectations over time for any AI system.

Future healthcare AI must be built on a transparency-first foundation, where decision-makers can see, ask questions about, and confirm each insight generated by the system. The industry is turning toward well-defined systems with clear data lineage, balanced representation, secure interoperability, and outputs that clinicians can understand and defend. Trust will become increasingly important for adoption than any accuracy measure as AI is integrated into chronic care management software, diagnostic support, and population analytics.

This is where Jelvix delivers long-term value. By integrating interpretability into architecture, protecting privacy, and converting complex analytics into clinician-friendly insights, Jelvix supports healthcare organizations in moving from model building to trust building.

The Ethical AI case illustrates that explainability can be successfully operationalized, turning AI into a perfectly usable system by taking it out of a black box. As healthcare institutions expand their digital ecosystems, Jelvix is a partner in developing AI technologies that are powerful, provably fair, interpretable, and poised for real-world clinical impact. If your organization is planning its next AI initiative, book a consultation with Jelvix experts to assess your readiness and design a transparency-first approach.

Build Transparent Solutions

Partner with Jelvix to build fully auditable Explainable AI systems