Have you ever wondered how to choose the best Big Data engine for business and application development? The market for Big data software is humongous, competitive, and full of software that seemingly does very similar things.

Big Data is currently one of the most demanded niches in the development and supplement of enterprise software. The high popularity of Big Data technologies is a phenomenon provoked by the rapid and constant growth of data volumes.

Massive data arrays must be reviewed, structured, and processed to provide the required bandwidth. Data processing engines are getting a lot of use in tech stacks for mobile applications, and many more.

So what Big Data framework will be the best pick in 2024? What should you choose for your product? Which one will go the way of the dodo? Let’s find out!

Top Big Data frameworks: what will tech companies choose in 2023?

Nowadays, there’s probably no single Big Data software that wouldn’t be able to process enormous volumes of data. Special Big Data frameworks have been created to implement and support the functionality of such software. They help rapidly process and structure huge chunks of real-time data.

What are the best Big Data tools?

There are many great Big Data tools on the market right now. To make this top 10, we had to exclude a lot of prominent solutions that warrant a mention regardless – Kafka and Kafka Streams, Apache TEZ, Apache Impala, Apache Beam, Apache Apex. All of them and many more are great at what they do. However, the ones we picked represent:

- Most popular like Hadoop, Storm, Hive, and Spark;

- Most promising like Flink and Heron;

- Most useful like Presto and MapReduce;

- Also, most underrated like Samza and Kudu.

We have conducted a thorough analysis to compose these top Big Data frameworks that are going to be prominent in 2024. Let’s have a look!

1. Hadoop. Is it still going to be popular in 2024?

Apache Hadoop was a revolutionary solution for Big Data storage and processing at its time. Most of Big Data software is either built around or compliant with Hadoop. It’s an open-source project from the Apache Software Foundation.

What is Hadoop framework?

Hadoop is great for reliable, scalable, distributed calculations. However, it can also be exploited as common-purpose file storage. It can store and process petabytes of data. This solution consists of three key components:

- HDFS file system, responsible for the storage of data in the Hadoop cluster;

- MapReduce system, intended to process large volumes of data in a cluster;

- YARN, a core that handles resource management.

How does precisely Hadoop help to solve the memory issues of modern DBMSs? Hadoop uses an intermediary layer between an interactive database and data storage. Its performance grows according to the increase of the data storage space. To grow it further, you can add new nodes to the data storage.

Hadoop can store and process many petabytes of info, while the fastest processes in Hadoop only take a few seconds to operate. It also forbids any edits to the data, already stored in the HDFS system during the processing.

As we wrote in our Hadoop vs Spark article, Hadoop is great for customer analytics, enterprise projects, and creation of data lakes. Or for any large scale batch processing task that doesn’t require immediacy or an ACID-compliant data storage.

Does a media buzz of “Hadoop’s Death” have any merit behind it?

It was revolutionary when it first came out, and it spawned an industry all around itself. Now Big Data is migrating into the cloud, and there is a lot of doomsaying going around. So is the end for Hadoop? Think about it, most data are stored in HDFS, and the tools for processing or converting it are still in demand.

To top it off cloud solution companies didn’t do too well in 2019. Cloudera had missed the revenue target, lost 32% in stock value, and had its CEO resign after the Cloudera-Hortonworks merger. Another big cloud project MapR has some serious funding problems.

Hadoop is still a formidable batch processing tool that can be integrated with most other Big Data analytics frameworks. Its components: HDFS, MapReduce, and YARN are integral to the industry itself. So it doesn’t look like it’s going away any time soon.

But despite Hadoop’s definite popularity, technological advancement poses new goals and requirements. More advanced alternatives are gradually coming to the market to take its shares (we will discuss some of them further).

2. MapReduce. Is this Big Data search engine getting outdated?

MapReduce is a search engine of the Hadoop framework. It was first introduced as an algorithm for the parallel processing of sizeable raw data volumes by Google back in 2004. Later it became MapReduce as we know it nowadays.

This engine treats data as entries and processes them in three stages:

- Map (preprocessing and filtration of data).

- Shuffle (worker nodes sort data, each one corresponds with one output key, resulting from the map function).

- Reduce (the reduce function is set by the user and defines the final result for separate groups of output data).

The majority of all values are returned by Reduce (functions are the final result of the MapReduce task). MapReduce provides the automated paralleling of data, efficient balancing, and fail-safe performance.

It has been a staple for the industry for years, and it is used with other prominent Big Data technologies. But there are alternatives for MapReduce, notably Apache Tez. It is highly customizable and much faster. It uses YARN for resource management and thus is much more resource-efficient.

3. Spark. Is it still that powerful tool it used to be?

Our list of the best Big Data frameworks is continued with Apache Spark. It’s an open-source framework, created as a more advanced solution, compared to Apache Hadoop. The initial framework was explicitly built for working with Big Data. The main difference between these two solutions is a data retrieval model.

Apache Spark — Computerphile

Hadoop saves data on the hard drive along with each step of the MapReduce algorithm. While Spark implements all operations, using the random-access memory. Due to this, Spark shows a speedy performance, and it allows to process massive data flows. The functional pillars and main features of Spark are high performance and fail-safety.

It supports four languages:

- Scala;

- Java;

- Python;

- R.

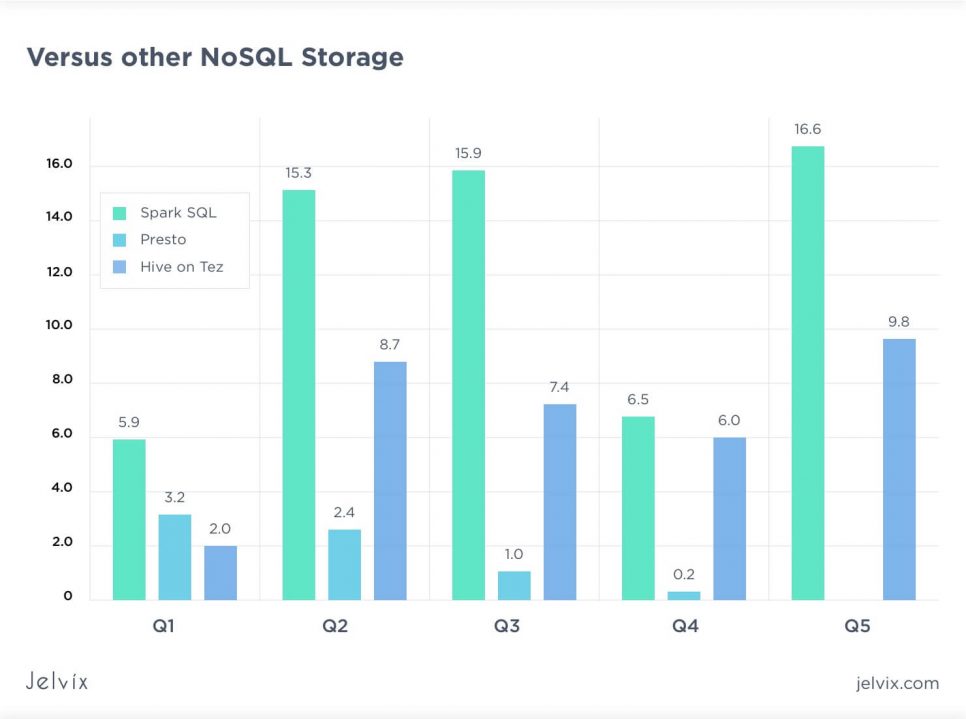

It has five components: the core and four libraries that optimize interaction with Big Data. Spark SQL is one of the four dedicated framework libraries that is used for structured data processing. Using DataFrames and solving of Hadoop Hive requests up to 100 times faster.

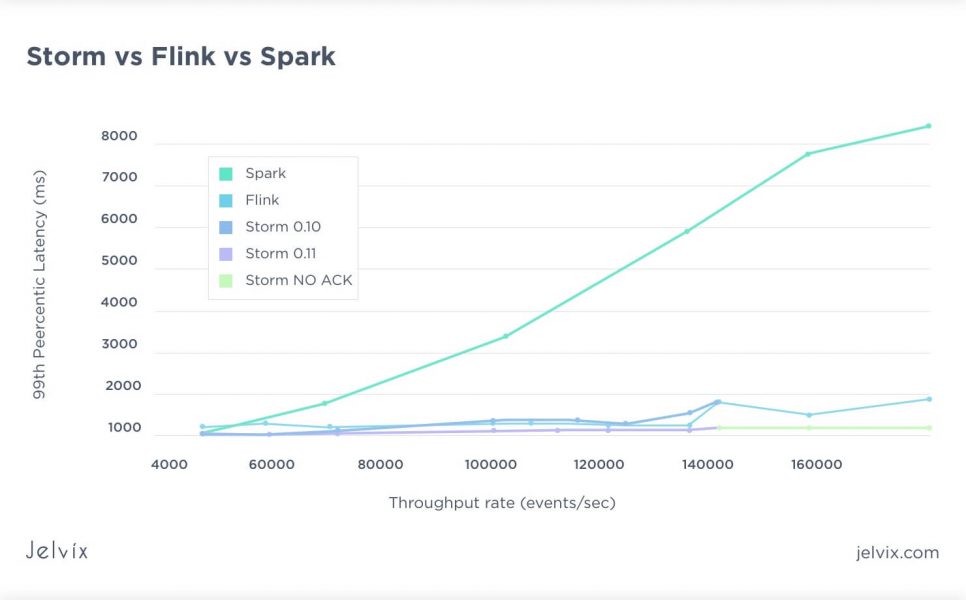

Spark has one of the best AI implementation in the industry with Sparkling Water 2.3.0. Spark also features Streaming tool for the processing of the thread-specific data in real-time. In reality, this tool is more of a micro-batch processor rather than a stream processor, and benchmarks prove as much.

Fastest Batch processor or the most voluminous stream processor?

Well, neither, or both. Spark behaves more like a fast batch processor rather than an actual stream processor like Flink, Heron or Samza. And that is OK if you need stream-like functionality in a batch processor. Or if you need a high throughput slowish stream processor. It’s a matter of perspective.

Spark founders state that an average time of processing each micro-batch takes only 0,5 seconds. Next, there is MLib — a distributed machine learning system that is nine times faster than the Apache Mahout library. Also, the last library is GraphX, used for scalable processing of graph data.

Spark is often considered as a real-time alternative to Hadoop. It can be, but as with all components in the Hadoop ecosystem, it can be used together with Hadoop and other prominent Big Data Frameworks.

4. Hive. Big data analytics framework.

Apache Hive was created by Facebook to combine the scalability of one of the most popular Big Data frameworks. It is an engine that turns SQL-requests into chains of MapReduce tasks.

The engine includes such components as:

- Parser (that sorts the incoming SQL-requests);

- Optimizer (that optimizes the requests for more efficiency);

- Executor (that launches tasks in the MapReduce framework).

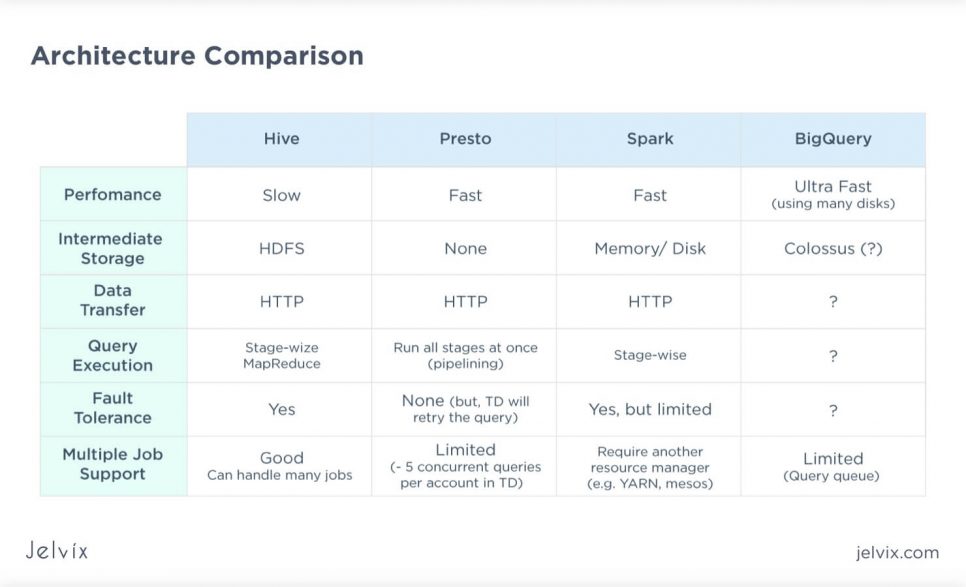

Hive can be integrated with Hadoop (as a server part) for the analysis of large data volumes. Here is a benchmark showing Hive on Tez speed performance against the competition (lower is better).

Hive remains one of the most used Big data analytics frameworks ten years after the initial release.

Hive 3 was released by Hortonworks in 2018. It switched MapReduce for Tez as a search engine. It has machine-learning capabilities and integration with other popular Big Data frameworks. You can read our article to find out more about machine learning services.

However, some worry about the project’s future after the recent Hortonworks and Cloudera merger. Hive’s main competitor Apache Impala is distributed by Cloudera.

5. Storm. Twitter first big data framework

Apache Storm is another prominent solution, focused on working with a large real-time data flow. The key features of Storm are scalability and prompt restoring ability after downtime. You can work with this solution with the help of Java, as well as Python, Ruby, and Fancy.

Storm features several elements that make it significantly different from analogs. The first one is Tuple — a key data representation element that supports serialization. Then there is Stream that includes the scheme of naming fields in the Tuple. Spout receives data from external sources, forms the Tuple out of them, and sends them to the Stream.

There is also Bolt, a data processor, and Topology, a package of elements with the description of their interrelation. When combined, all these elements help developers to manage large flows of unstructured data.

Speaking of performance, Storm provides better latency than both Flink and Spark. However, it has worse throughput. Recently Twitter (Storm’s leading proponent) moved to a new framework Heron. Storm is still used by big companies like Yelp, Yahoo!, Alibaba, and some others. It’s still going to have a large user base and support in 2024.

6. Samza. Streaming processor made for Kafka

Apache Samza is a stateful stream processing Big Data framework that was co-developed with Kafka. Kafka provides data serving, buffering, and fault tolerance. The duo is intended to be used where quick single-stage processing is needed. With Kafka, it can be used with low latencies. Samza also saves local states during processing that provide additional fault tolerance.

Samza was designed for Kappa architecture (a stream processing pipeline only) but can be used in other architectures. Samza uses YARN to negotiate resources. So it needs a Hadoop cluster to work, so that means you can rely on features provided by YARN.

This Big Data processing framework was developed for Linkedin and is also used by eBay and TripAdvisor for fraud detection. A sizeable part of its code was used by Kafka to create a competing data processing framework Kafka streams. All in all, Samza is a formidable tool that is good at what it’s made for. But can Kafka streams replace it completely? Only time will tell.

7. Flink. A true hybrid Big data processor.

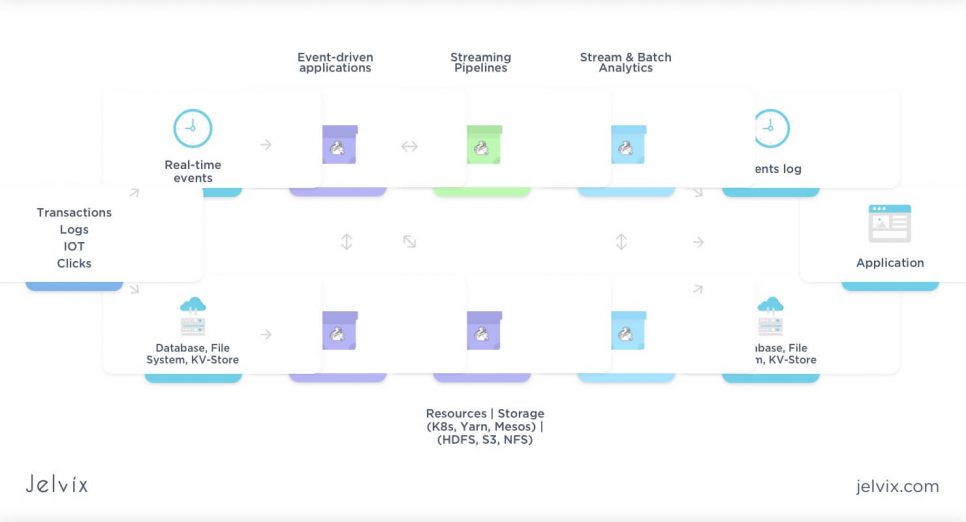

Apache Flink is a robust Big Data processing framework for stream and batch processing. First conceived as a part of a scientific experiment around 2008, it went open source around 2014. It has been gaining popularity ever since.

Flink has several interesting features and new impressive technologies under its belt. It uses stateful stream processing like Apache Samza. But it also does ETL and batch processing with decent efficiency.

Best for Lambda Pipeline

It’s an excellent choice for simplifying an architecture where both streaming and batch processing is required. It can extract timestamps from the steamed data to create a more accurate time estimate and better framing of streamed data analysis. It also has a machine learning implementation ability.

As a part of the Hadoop ecosystem, it can be integrated into existing architecture without any hassle. It has the legacy of integration with MapReduce and Storm so that you can run your existing applications on it. It has good scalability for Big Data.

Ok. But what is it good for?

Flink is a good fit for designing event-driven apps. You can enact checkpoints on it to preserve progress in case of failure during processing. Flink also has connectivity with a popular data visualization tool Zeppelin.

Alibaba used Flink to observe consumer behavior and search rankings on Singles’ Day. As a result, sales increased by 30%. Financial giant ING used Flink to construct fraud detection and user-notification applications. Moreover, Flink also has machine learning algorithms. To read more on FinTech mobile apps, try our article on FinTech trends.

Flink is undoubtedly one of the new Big Data processing technologies to be excited about. However, there might be a reason not to use it. Most of the tech giants haven’t fully embraced Flink but opted to invest in their own Big Data processing engines with similar features. For instance, Google’s Data Flow+Beam and Twitter’s Apache Heron. Meanwhile, Spark and Storm continue to have sizable support and backing. All in all, Flink is a framework that is expected to grow its user base in 2024.

8. Heron. Will this streaming processor become the next big thing?

Apache Heron. This is one of the newer Big Data processing engines. Twitter developed it as a new generation replacement for Storm. It is intended to be used for real-time spam detection, ETL tasks, and trend analytics.

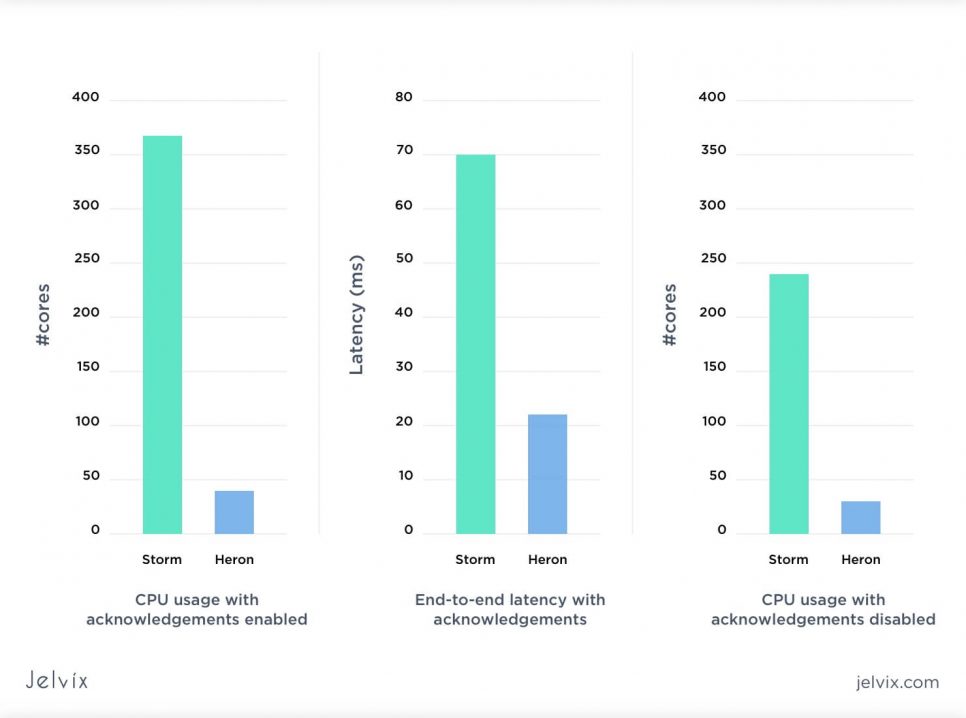

Apache Heron is fully backward compatible with Storm and has an easy migration process. Its design goals include low latency, good and predictable scalability, and easy administration. Developers put great emphasis on the process isolation, for easy debugging and stable resource usage. Benchmarks from Twitter show a significant improvement over Storm.

This framework is still in a development stage, so if you are looking for technology to adopt early, this might be the one for you. By having excellent compatibility with Storm and having a sturdy backing by Twitter, Heron is likely to become the next big thing soon. And some have already caught up with it, namely Microsoft and Stanford University.

9. Kudu. What use cases does this niche product have?

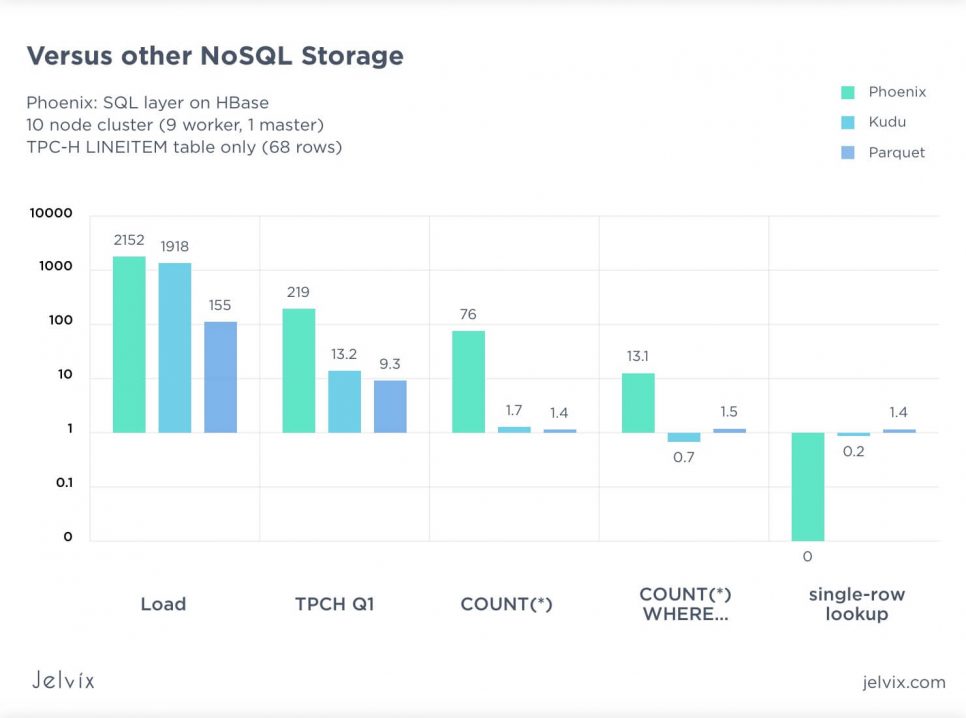

Apache Kudu is an exciting new storage component. It’s designed to simplify some complicated pipelines in the Hadoop ecosystem. It is an SQL-like solution, intended for a combination of random and sequential reads and writes.

Specialized random or sequential access storage is more efficient for their purpose. While Hbase is twice as fast for random access scans, and HDFS with Parquet is comparable for batch tasks.

There was no simple way to do both random and sequential reads with decent speed and efficiency. Especially for an environment, requiring fast constant data updates. Until Kudu. It is intended to integrate with most other Big Data frameworks of the Hadoop ecosystem, especially Kafka and Impala.

Projects built on Kudu

Kudu is currently used for market data fraud detection on Wall Street. It turned out to be particularly suited to handle streams of different data with frequent updates. It is also great for real-time ad analytics, as it is plenty fast and provides excellent data availability.

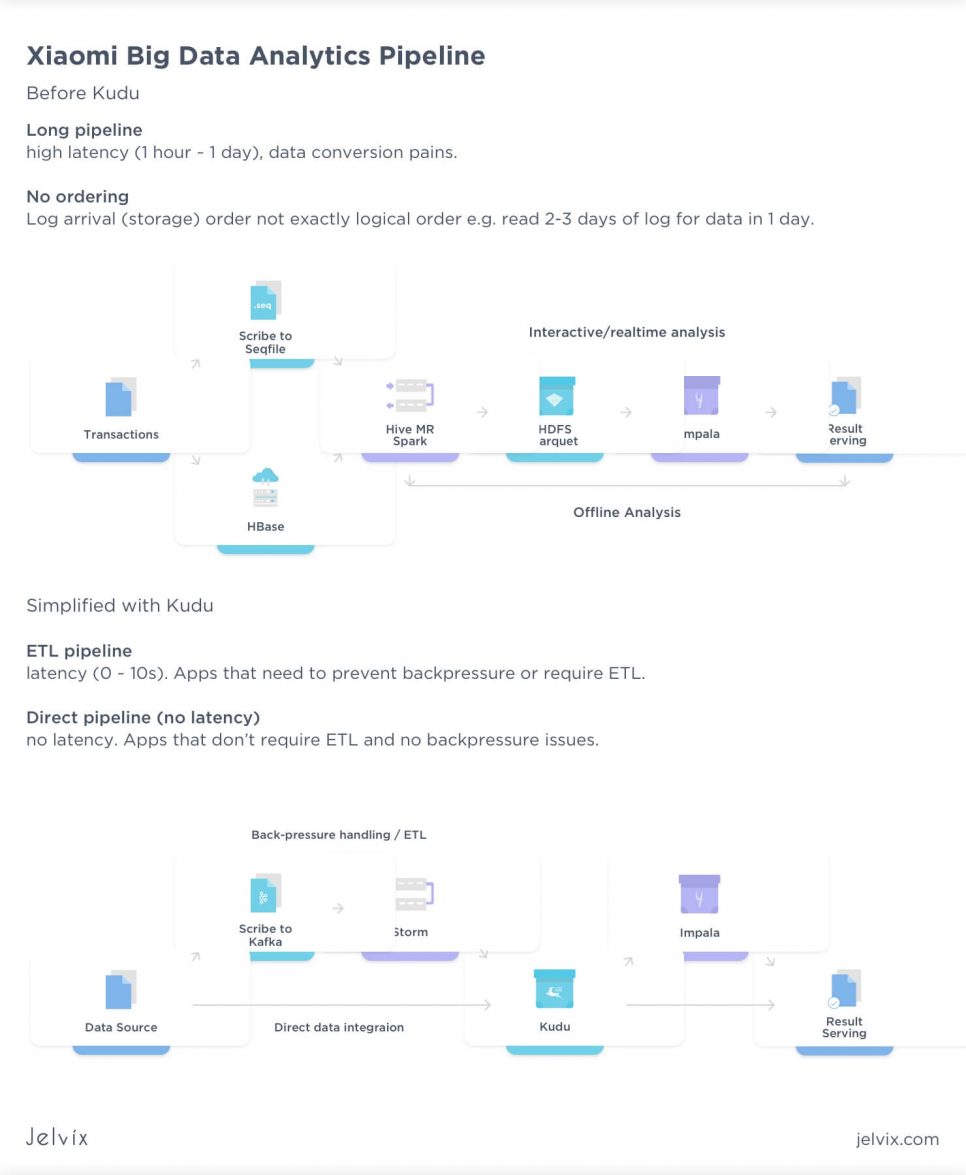

Kudu was picked by a Chinese cell phone giant Xiaomi for collecting error reports. Mainly because of its ability to simplify and streamline data pipeline to improve query and analytics speeds.

10. Presto. Big Data query engine for small data queries

Presto is a faster, flexible alternative to Apache Hive for smaller tasks. Presto got released as an open-source the next year 2013. It’s an adaptive, flexible query tool for a multi-tenant data environment with different storage types.

Industry giants (like Amazon or Netflix) invest in the development of it or make their contributions to this Big Data framework. Presto has a federated structure, a large variety of connectors, and a multitude of other features.

One of the first design requirements was an ability to analyze smallish subsets of data (in 50gb – 3tb range). It is handy for descriptive analytics for that scope of data. To read up more on data analysis, you can have a look at our article. Presto also has a batch ETL functionality, but it is arguably not so efficient or good at it, so one shouldn’t rely on these functions.

Let's discuss which IT outsourcing trends will change the industry.

How to choose Big Data technology?

A tricky question. To sum up, it’s safe to say that there is no single best option among the data processing frameworks. Each one has its pros and cons. Also, the results provided by some solutions strictly depend on many factors.

In our experience, hybrid solutions with different tools work the best. The variety of offers on the Big Data framework market allows a tech-savvy company to pick the most appropriate tool for the task.

Which is the most common Big data framework for machine learning?

Clearly, Apache Spark is the winner. It’s H2O sparkling water is the most prominent solution yet. However, other Big Data processing frameworks have their implementations of ML. So you can pick the one that is more fitting for the task at hand if you want to find out more about applied AI usage, read our article on AI in finance.

Do you still want to know what framework is best for Big Data?

While we already answered this question in the proper way before. Those who are still interested, what Big Data frameworks we consider the most useful, we have divided them in three categories.

- The Storm is the best for streaming, Slower than Heron, but has more development behind it;

- Spark is the best for batch tasks, useful features, can do other things;

- Flink is the best hybrid. Was developed for it, has a relevant feature set.

However, we stress it again; the best framework is the one appropriate for the task at hand.

Although there are numerous frameworks out there today, only a few are very popular and demanded among most developers. In this article, we have considered 10 of the top Big Data frameworks and libraries, that are guaranteed to hold positions in the upcoming 2024.

The Big Data software market is undoubtedly a competitive and slightly confusing area. There is no lack of new and exciting products as well as innovative features. We hope that this Big Data frameworks list can help you navigate it.

What Big Data software does your company use? Here at Jelvix, we prefer a flexible approach and employ a large variety of different data technologies. We take a tailored approach to our clients and provide state-of-art solutions. Contact us if you want to know more!

Need a certain developer?

Use our talent pool to fill the expertise gap in your software development.