Once upon a time, collecting and processing data took a huge amount of time, but the development of the modern world and technologies has made it possible to create entire sets of tools for optimizing this. Application of stack in the data structure is becoming easier to implement into familiar business processes, so most companies spend a lot of money on it. For example, Netflix spent $9.6 million per month on AWS data storage in 2019.

Despite the usefulness of the technology, there will always be certain stumbling blocks — and they need to be considered by everyone whose business deals with the data engineering tech stack. In the article, readers will learn the basics of this technology, its key characteristics, and its evolution checkpoints. We will also discuss platform examples, potential problems, and the future in general.

What Is a Modern Data Stack? Explanation And Fundamentals

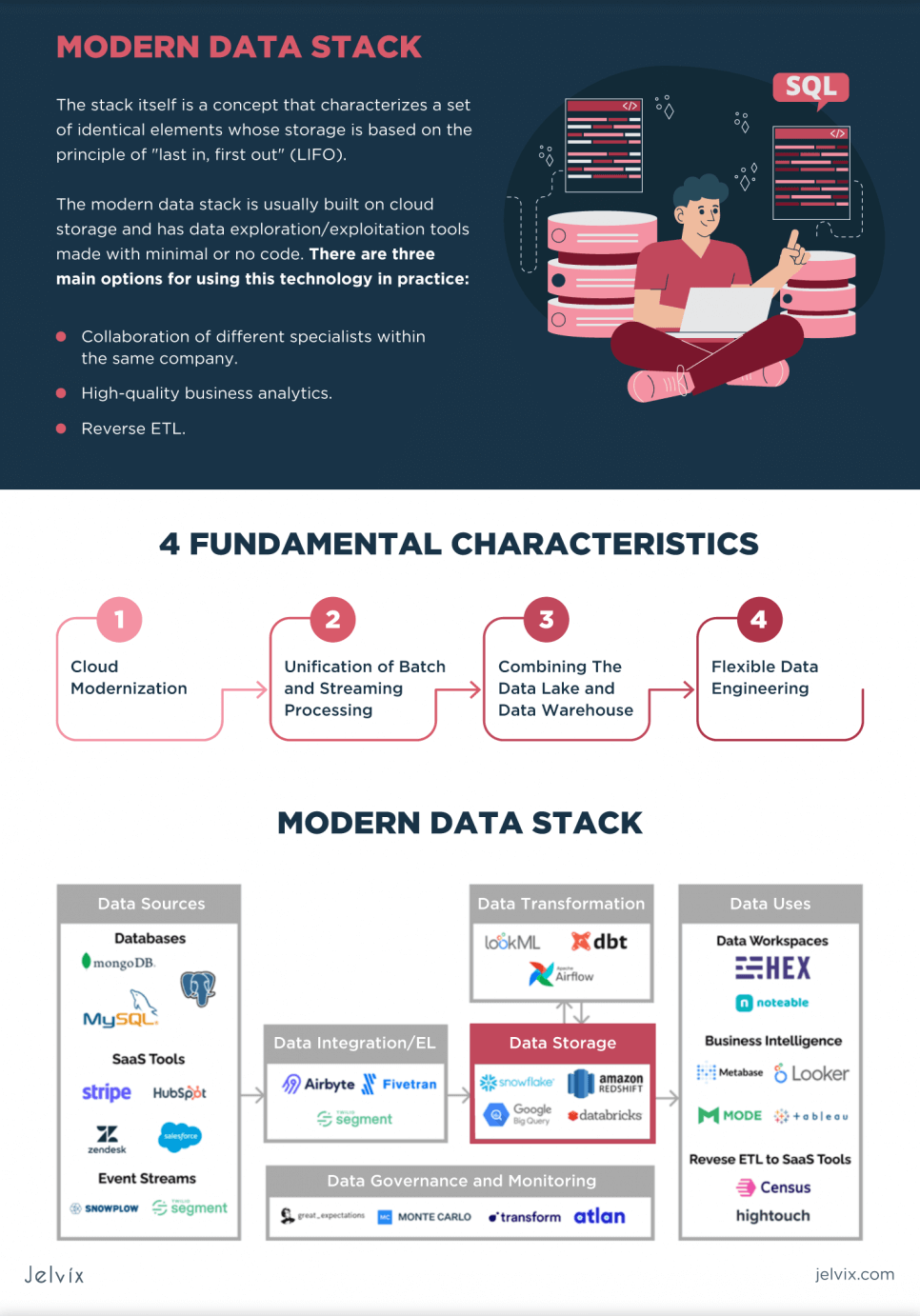

Let’s start with what is a data stack. A stack itself is a concept that characterizes a set of identical elements whose storage is based on the principle of “last in, first out” (LIFO). And over the past few decades, data has become such a valuable resource that it has become an essential part of sustaining the economy. Data must be appropriately stored, efficiently collected and processed, and analyzed. This is how data stacks were born and became the main tool for data management.

The modern data stack is usually built on cloud storage and has data exploration/exploitation tools made with minimal or no code. There are three main options for using this technology in practice:

- Collaboration of different specialists within the same company. For example, the CEO needs the complete understanding of the organization, the support service needs the customer data, and the marketing department needs the fullest info on metrics. Mutual access to all relevant data helps improve collaboration between departments and teams.

- High-quality business analytics. The technology makes data understandable and accessible to those who make major decisions. This results in a more thorough analysis of the information.

- Reverse ETL. ETL (Extract-Transform-Load) and reverse ELT include data integration processes that allow getting information from a third-party resource, transforming it, and then saving it in a specific store for easy handling later.

The technology is especially relevant for companies in the financial and medical sectors, where it’s mandatory to operate and safely store a lot of data. For instance, as in the 24/7 patient monitoring software developed by Jelvix. Although now, the data stack is used in almost any industry where there is a need for data management.

Another example of using this technology is the implementation of DWH design for CI/CD projects on Airflow. Collaborating with potential clients, Jelvix specialists use various multidimensional modelling techniques. These include a slowly changing dimension, the dimension hierarchy, advanced fact tables, advanced table dimension techniques, etc.

And for a deeper understanding of the technology, let’s explore its four fundamental characteristics.

Cloud Modernization

For a technology to be considered a modern data stack, it’s not enough to be cloud-based, cloud-managed, or cloud-hosted — it must be cloud-native. For example, Amazon EMR and Azure Databricks are inconvenient for use in a modern data stack, as they are cloud-based or cloud-managed. But Snowflake is cloud-native, which allows improving its performance and reliability.

Unification of Batch and Streaming Processing

This feature has been the main direction of technology evolution over the past years. Hadoop was a pioneer in massive data processing. Then specialists began to use Spark, which pushed MapReduce, the mechanism built into Hadoop, into the background. And we can’t forget Storm and its launch of streaming data processing. These innovations helped unify batch and stream data processing, refusing to use separate systems or a lambda architecture.

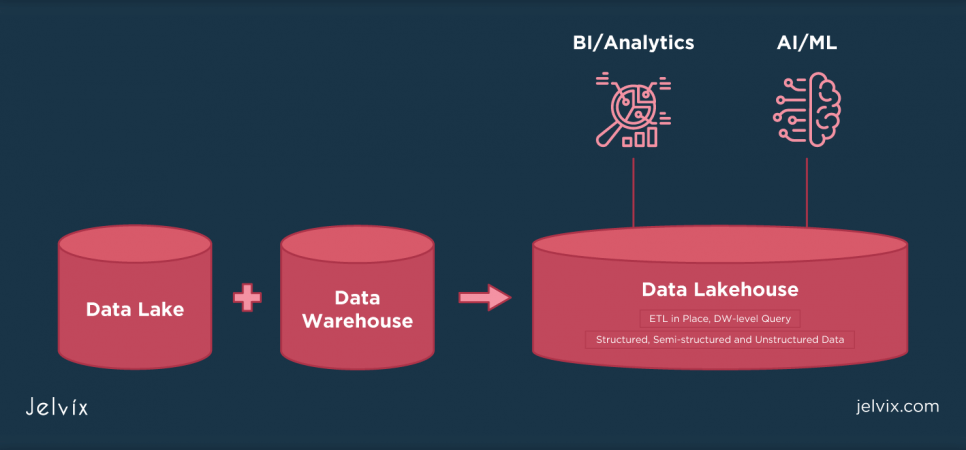

Combining The Data Lake and Data Warehouse

Data Lake supports various types of data: structured, semi-structured, and unstructured, but query processing takes a lot of time. Data Warehouse has high performance, but only for structured data. The modern data stack brings these two technologies together in the Data Lakehouse, which guarantees flexibility and high performance simultaneously.

Flexible Data Engineering

Platforms running on a current data stack are flexible services that support complex information processing:

- integration;

- storing;

- processing;

- analytics and business intelligence;

- observing;

- managing, and so on.

For instance, Spark and Flink are relevant for data processing, Fivetran and Airbyte are good for ELT, Census is ideal for reverse ELT, Databricks Delta Lake, and Snowflake can be used for storage and queries.

How Did the Modern Data Stack Start?

Before the stack in data structure took on the form we are used to, it had to go through three stages of evolution. They are sometimes referred to as Cambrian Explosion I (2012 to 2016), Rollout (2016 to 2020), and Cambrian Explosion II (2020 to present).

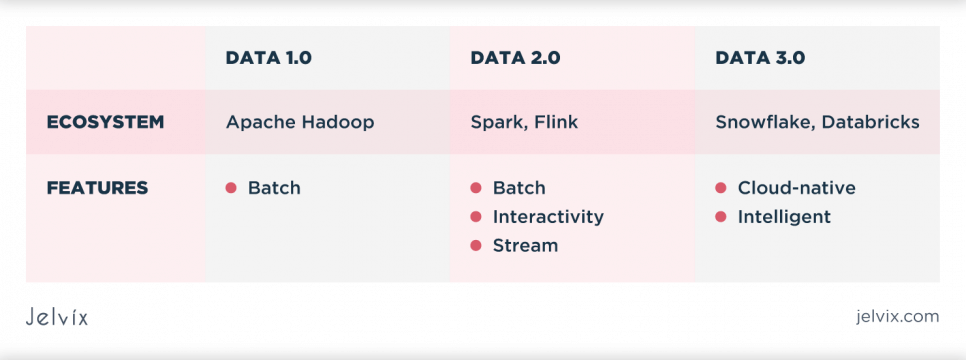

On the other hand, the active development of this sphere didn’t begin in 2012, but earlier, in the nineties — it was Data 1.0. Everything that was included in the technology from the beginning of 2000 to 2020 is called Data 2.0. And the technology that is actively used in data-driven enterprises since 2020 is called Data 3.0. However, let’s discuss each of the branches of evolution in more detail.

| Data 1.0 | Data 2.0 | Data 3.0 | |

|---|---|---|---|

| Ecosystem | Apache Hadoop | Spark, Flink | Snowflake, Databricks |

| Features |

|

|

|

Data 1.0

Initially, Hadoop was a tool for storing data and running MapReduce tasks. Later, Hadoop became a large stack of technologies, one way or another related to the processing of big data (not only with MapReduce). The main focus of the ecosystem has been on batch processing even though cloud solutions exist (Azure HDInsight and Azure Databricks).

Data 2.0

The second stage was characterized by the processing of big data in real-time. Hadoop fades into the background, clearing the way for Spark and Flink. Interestingly, Spark specialized in batch processing, while Flink specialized in streaming, although systems now support both functions. If we talk specifically about data collection, then Apache Kafka and Amazon Kinesis can be called the most popular at this stage.

Data 3.0

The modern data stack, which is called Data 3.0, means entire clouds of data. Its main features include security, high scalability, ease of use, and, of course, cloud-nativity. This ecosystem is driven by Snowflake, Databricks, and leading cloud providers:

- AWS;

- Azure;

- GCP.

The variety of possibilities in this modern technology is amazing and allows finding its application in most areas. Experts can use cloud storage, query data, conduct business intelligence, process huge amounts of data in a short period, and manage information more rationally.

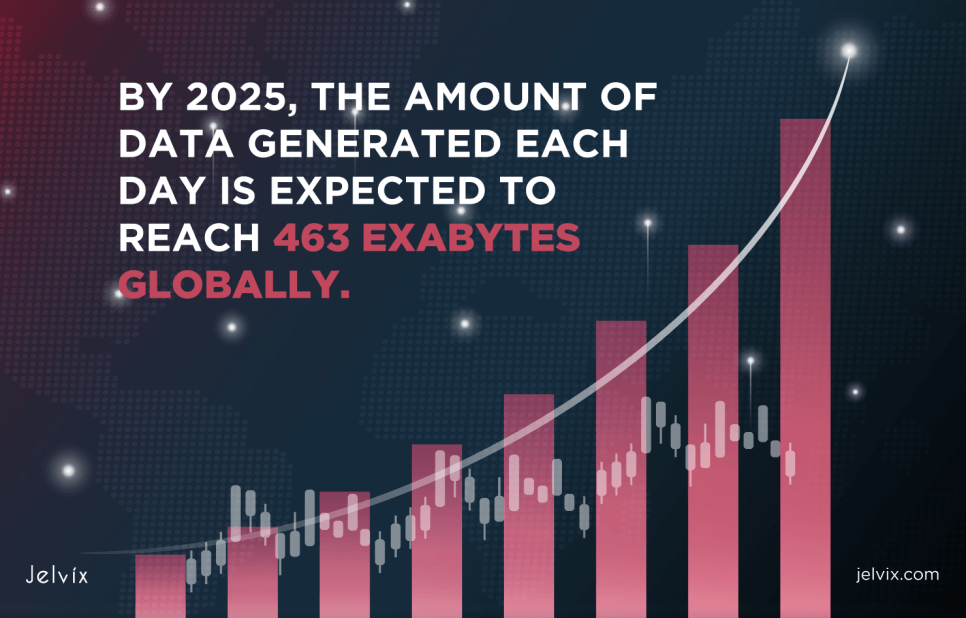

And considering Raconteur’s 2020 prediction that 463 ZB of data will be generated every day by 2025, the data management stack will continue to evolve as part of the Cambrian Explosion II or a completely new evolution stage.

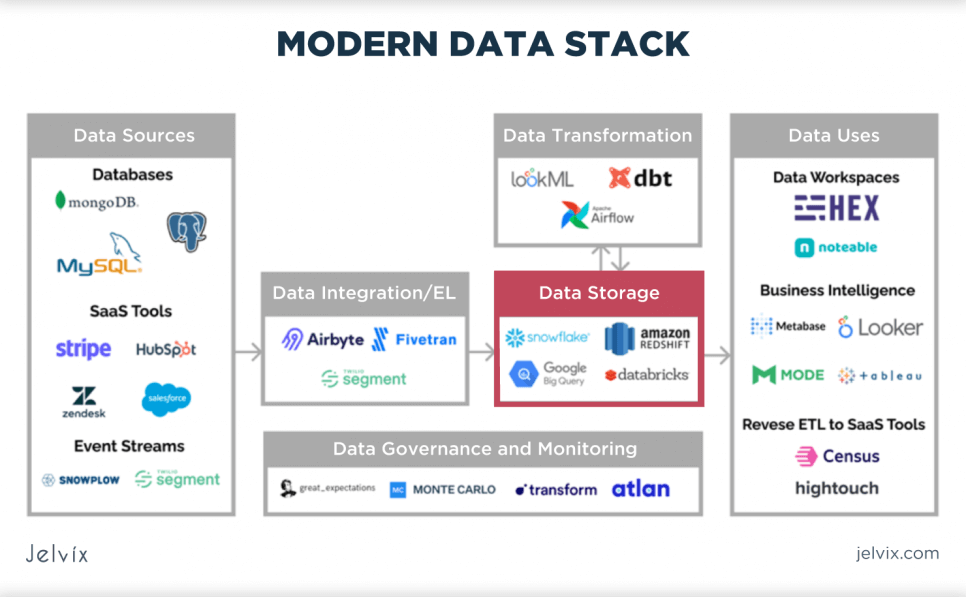

Modern Data Platforms

Before moving on to the development paths of full stack data science and the problems that may be encountered along the way, it is also worth touching on the topic of various platforms. They, as a rule, consist of technological blocks, each of which performs certain functions. For example:

- Resource (where does the data come from?): logs, third-party API services, storage of company files and objects, OLTP databases.

- Receiving and transporting (automated process): Fivetran, Hevo Data, Stitch, Pulsar, and Upsolver.

- Storage (in data lakes or data lakehouses): Amazon S3, Google Cloud Storage, Redshift, Azure Data Lake Storage Gen2, and Snowflake.

- Query and Processing: Databricks, Pandas, Dask, Confluent, and Flink.

- Transformation: AWS Glue, dbt, Matallion, and Domo.

- Analytics and data output: Looker, Mode, and Tableau.

In general, a modern platform should be flexible, scalable, affordable, and able to implement technologies that would meet the growing needs of a contemporary client.

What Problems Can It Face?

Although companies are actively implementing data management systems to track customer behavior and optimize business processes, the entire path of development of this technology can hardly be called problem-free. Despite the giant leap during the Cambrian Explosions that brought Data 3.0 into existence as we know it, there are three major hurdles to keep in mind when predicting the future of the big data technology stack.

Complexity

Advances in technology have caused the data stack to become quite complex as a price to pay for its flexibility, openness, and inclusiveness. Despite the multifunctionality, it is difficult to quickly and easily integrate into the system due to the many mismatched parameters:

- programming languages;

- protocols;

- rules and infrastructure.

There are risks that the technology will simply return to its starting point. Just remember Hadoop — the ecosystem had dozens of useful tools for various purposes, each of which needed to be updated regularly. Customers did not like these regular upgrades and the need to integrate interfaces.

The Huge Size of Cloud Storage

We talked a lot about the fact that the modern data stack involves working with cloud data. The second problem is that it will be difficult to avoid cloud silos due to the use of different providers. That is, engineers will have to move away from organizational data warehouses to giant cloud silos — there will be a need to deal with service-level agreements and make sure that the system will meet other critical requirements. On the other hand, the development of technology will likely entail the use of multi-cloud metadata and orchestration, which opens up completely new opportunities.

Far Behind in Data Science

The third difficulty in the evolution of the data stack is that modern technology is primarily focused on data engineering. But the tools for science are not so developed — more precisely, they are very behind. Now data is the main part of artificial intelligence and machine learning, but the development of feature/parameter/model management features should not be underestimated.

And due to a large gap between engineering and science, experts are not sure whether it will be possible to rationally combine AI-specific storage with business intelligence data marts. Or, for instance, will it be possible to create a unified catalog that guarantees easy tracking of data, models, signals, and parameters?

Although the development of artificial intelligence is already introducing data-centric technologies, we will probably soon find out whether it will be possible to work effectively with this problem.

Let's find out how to choose mobile app technology stack.

Key Prospects: the Future of the Modern Data Stack

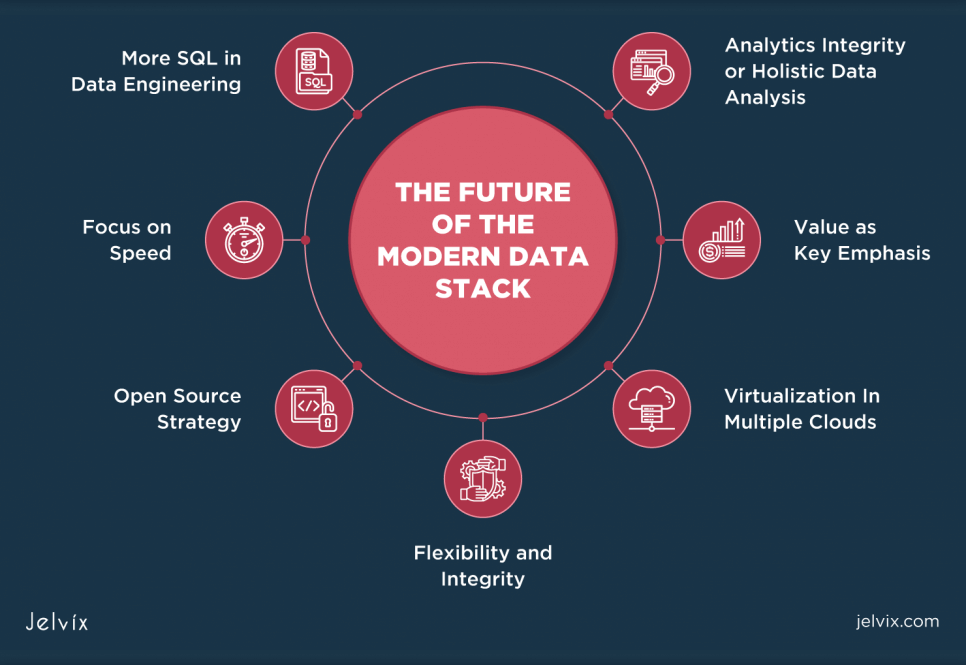

So, having dealt with the main characteristics, stages of evolution, and possible problems in the way of development, we can move on to the prospects of the stack data-centered future. Can we expect Data 4.0 or even Data 10.0? In general, yes. At the moment, there are several interesting paths for this technology — they include the integrity of analytics, virtualization in multiple clouds, the openness of the data platform, and other directions.

Analytics Integrity or Holistic Data Analysis

Despite the abundance of opportunities for data analysis in the existing stack, the technology can still develop in this area, for example, by using advanced holistic data analytics. Foremost, companies can implement intellectual analysis and business intelligence, which will help to achieve more effective results. In addition, the evolution of this branch will enable the implementation of predictive and prescriptive analytics through improved processing of past and current parameters. And this is a direct way to refine the data strategy.

Virtualization In Multiple Clouds

The scalability and reliability of the modern data analytics stack are the merits of cloud technology. However, there are several cloud providers. The problem is that they do not have shared secure tunnels or domain layers — there are no direct connections. Secondly, some data has certain strict storage requirements (local or regional).

All this leads most companies to prefer moving to virtual multi-clouds. This technology will allow them to securely and efficiently combine clouds from the most trusted providers. Moreover, the virtual cloud will exist on top of private and public ones.

In addition, double virtualization is also of interest. It can be implemented in two ways:

- Creation of a consensus metasystem, whose levels will have certain functions of control, observation, and discovery capabilities.

- Virtualization of a multi-cloud resource through a single orchestration.

Value as Key Emphasis

You can never say that 100% of the business value is derived from the data. The development of this technology can go in the direction of optimizing this process. For example, business processes are always regulated by numerous specialists or teams, which means that we need to have separate data management tools for each of the groups.

Another way to add value is to create tools with little or no code. This technique allows the creation of a product that is easy to manage and implement, which guarantees a pleasant experience for companies and their employees with technology. It will not be superfluous to integrate & unificate data stacks to improve productivity and reduce costs.

Flexibility and Integrity

For future technology to be truly useful, specialists should continue to work on simplifying integration and increasing the reliability and flexibility of the stack. Firstly, it is worth short-circuiting the process of data flow to the warehouse through ETL, ELT, and reverse ETL. This development path can improve engineering productivity and data quality. After that, the use of data origin, semantics, statistics, and metrics will become much more efficient.

Open Source Strategy

Most of the modern data companies should start with open source but eventually, move to the cloud. This fact suggests that the future of technology should work closely with both the cloud and open source. This approach will maximize user engagement and increase the convenience of the stack for business. And remembering that venture capital investors are paying more attention to startups that prefer open-source strategies, this trend becomes even more important.

Focus on Speed

The importance of speed is easy to understand by looking back at the history of the data stack. At first, experts threw back Hadoop, preferring Spark due to its greater performance. The same thing happened to Redshift when it lost to Snowflake. And you can already see how much attention the Firebolt is getting due to its speed potential. Thus, the obvious path of data stack evolution will be in the direction of speed optimization.

More SQL in Data Engineering

SQL is indispensable in the field of data management — it is simple, understandable, and based on widely used standards, so it is most often used in the data stack. More systems and platforms will likely support this technology in the future. Of course, queries are often used now, but later SQL will be applied to get predictive analytics results.

You can find out some more information about the prospects of the modern data stack, including predictions for the current year and the near future in the video:

Final Words

To sum it up, technology has come a long way and is in no hurry to stop. After Snowflake became an incredible commercial success, the stack continued to grow rapidly. The path of development has some difficulties and many prospects — and everyone will be interested in keeping an eye on its evolution!

And if your project needs such technology, be sure to ask Jelvix for a consultation — our specialists will quickly offer various options for dealing with the task and bringing the desired result to life. Currently, clients are totally satisfied with the work of our team due to its professionalism and the rational implementation of analytical systems that 100% meet the requirements of a particular business.

In addition, specialists can not only create an ideal analytical platform with fine dashboards with the appropriate business metrics but also provide it with additional functionality. It will allow your staff to manage data arrays through web applications and use features to improve data quality, as well as verify and test their integrity.

Contact Jelvix now — let’s find a great solution for your business together!

Need a certain developer?

Use our talent pool to fill the expertise gap in your software development.