Today, data lies at the core of most business processes. Sites and applications process users’ information to provide better services, give personalized insights, form predictions, and make responsible decisions.

According to Gartner, the cost of poor data quality amounts to values between 9 and 14 million. Even pre-seed startups waste about $18,000 per year – and many of them don’t even use that much data. No matter what level your business is at, poor data quality will quickly create problems, if left unaddressed.

In this guide, we’ll talk about data quality definition, the challenges of data quality management, and find a way to solve them. You’ll know which data can be considered high-quality and will be able to eliminate the poor-quality files.

Data Quality Management Definition

Data quality management is a process that involves sorting through data, determining the criteria for high-quality information, and developing strategies and tools for eliminating data that failed to meet these requirements.

Data quality criteria always derive from specific business objectives. Therefore, data quality specialists should be directly involved in conversations about business challenges and priorities. Your data team should be aware of business priorities, needs of end customers, and current problems.

Why is Data Quality Important?

The results of proper data quality management are obvious both to the team and end-users. Investments in this aspect of software development bring immediate results. Here are just a couple of benefits.

Increased Data Accuracy Leads to Better Insight

Your system can’t be reliable if it’s powered by faulty data. Predictions, insights, and personalization will contain mistakes – in fact, you would be better off with no data at all than with wrong or mistaken one.

- Accurate data minimizes misunderstanding: you avoid conflicts, technical issues, and even lawsuits. In some fields, you will generate a wrong prediction (like telling a user to take a wrong turn in navigator), while in some other cases, data flows can have disastrous consequences. In healthcare, data mistakes can lead to failed transplants, and ultimately, cause patient’s death.

- Quality data provides more information. It should correspond to specific criteria – so the business doesn’t collect information just for the sake of it, but knows how to put each byte to use. If customers don’t fill forms completely, the system should have ways to motivate users to provide complete information.

- You can make relevant business decisions. Even good statistical and demographic data is useless if it’s too old. Society and its trends are constantly changing, so insights from 2015 won’t do much good in 2023. With a good data quality management system in place, you’ll be able to update data on a regular basis and easily review it at any moment.

- A company can collect only valid data. A data quality management system helps teams to set criteria for considering information valid. You can put requirements (like the length of password), define formats (for instance, supporting 24- and 12-hour time), and set up requirements for the content. Information that doesn’t meet these criteria will not be stored.

- You can operate with a united data framework. Data versions, formats, and structures should be consistent across many databases and storage. If that’s the case, you’ll be able to synchronize it, comply with automated reports, and share insights across departments. Keeping track of formats and data structure is hard if you don’t have a consistent data quality management system in place.

Data quality management is essential – there are no exceptions. It doesn’t matter which field you are working in, or if you’ve adopted big data or not – you are still using tons of data. Even simple registration information or CRM reports should be monitored and classified – otherwise, you are setting the company up for costly mistakes.

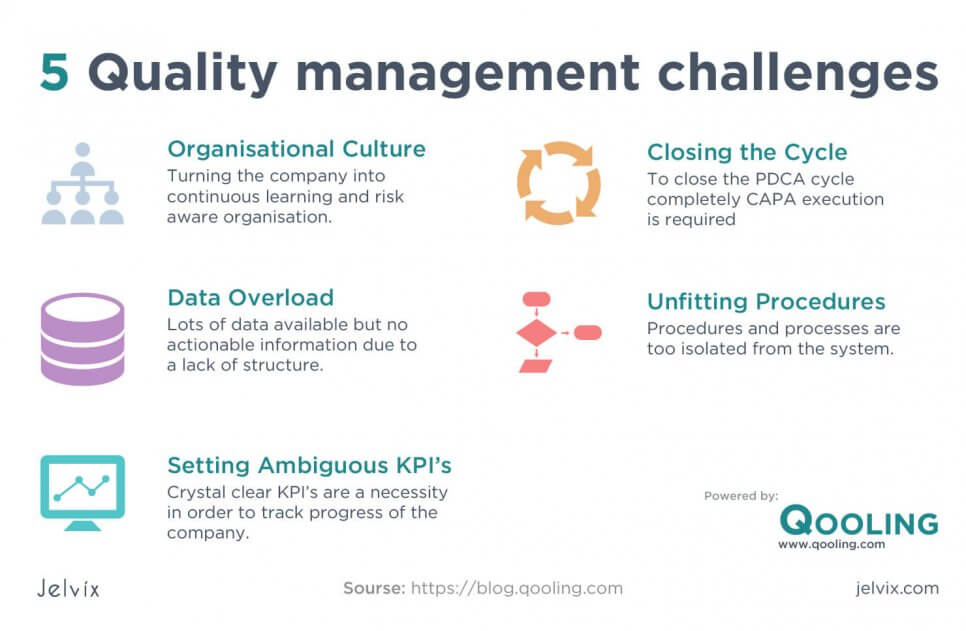

Challenges of Data Quality Management

Data quality management presents two main challenges. The first one is dealing with the lack of enthusiasm – many teams don’t acknowledge just how much they actually rely on the information.

For many, focusing on data quality will not seem like a priority – until a crisis arrives. Communicating the importance of data quality to your team and stakeholders is the first challenge.

The second issue is the lack of established practices. Although there are guides and best insights available online, the process of execution differs depending on business structure, its software system, and industry.

The thing is, data quality management requires preparation. Here’s a list of just a few practical challenges that we usually see teams going through.

Dividing Responsibilities

Data quality management processes fall into three sections – operational, tactical, and strategic activities. These pillars require different competencies, and constant cooperation – because one stage depends on another.

First, you need to determine who is responsible for strategic activities – this person will define the objectives of the data quality management. Such an expert should have a deep understanding of business processes, industry, and the company’s challenges. Technical expertise is important, but it’s less relevant.

The next step is dividing tactical activities. After you built a strategy, the next step is to plan steps of data quality management execution. You need people who will set smaller objectives, articulate tech challenges, and choose data analytics tools. Here, technical expertise is more important than strictly business-related knowledge, although ideally, you need a mix of the two.

Finally, you need a technical team that will organize and manage operations. A team of engineers and architects should set up information architecture, go through record management practices, update database security. You will need to build new tools and features in your product, and create tools for internal use – or at least, integrate existing one. For operation processes, technical expertise is essential.

Managing Cross-Functional Teams

As you can see, data quality management deals with many competencies. Business and management experts work closely with the technical team. In a tech team itself, there is quite a variety of skills as well. You need data architects, solution architects, engineers, and testers – all of them should communicate well and understand each other’s progress.

When teams manage such a cross-functional process, they need a lot of documentation. Requirements, specifications, quality metrics examples, and reporting processes – all this information should be created at the beginning of the process.

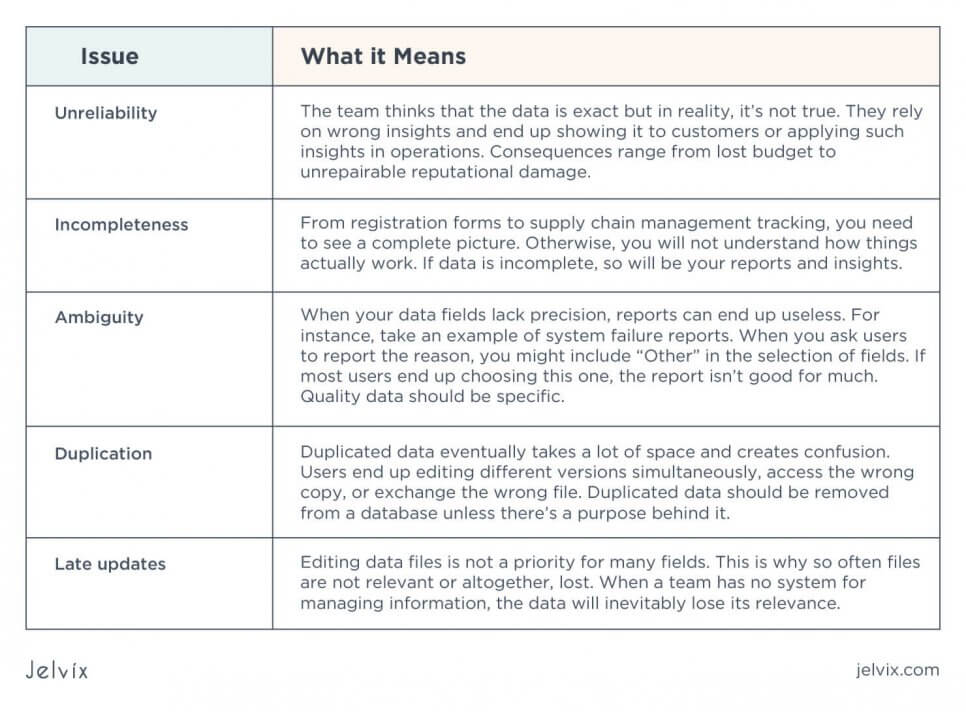

Recognizing Data Quality Issues

Experience is a key criterion in choosing a data analytics team. The theory may seem simple at first, but there are many nuances underneath. For one, how does a team determine if the data is good or not? Putting unrealistically strict criteria will just deprive the company of data altogether. On the other hand, having no standards whatsoever is even worse – you are setting yourself up for data errors.

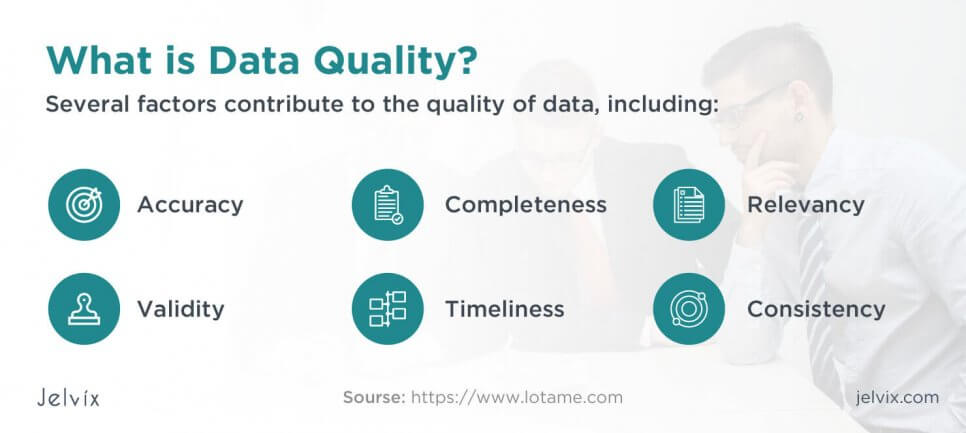

What is Data Quality?

Data quality issues range a lot, but they still can be grouped into main groups. Here’s a short rundown of problems that you should watch out for.

To deal with these and other challenges, you need an experienced team who knows the fine line between valid and irrelevant data. Using “the more, the better” as a guiding principle can lead to a crisis, but deleting everything will just deprive you of insights altogether. With experience, data engineers know when it’s still possible to “squeeze” utility out of files, or when it’s best to delete it.

Maintaining Organization and Discipline

Another problem with data quality management is that there’s really no end in sight. It might feel like you are constantly jumping through hoops without getting anywhere. The team gets discouraged, members are no longer enthusiastic about applying best practices. The company circulates the drain, using the same outdated data management practices.

This is why, along with experienced tech experts, you also need managers and visionaries on your side. Make sure you have bigger-picture team members who can zoom out of smaller tasks and make significant changes. All progress and challenges should be clearly communicated to the team so that everyone stays motivated and can keep up.

Monitoring Investments and Efforts

If your data quality management team doesn’t pay attention to introspection, you will struggle with measuring the results of your activities. Since data quality management KPIs aren’t immediately apparent, some teams ignore reporting altogether.

Experienced teams already know what variables matter in data quality management the most. They know which ones should be tracked as the first priority and which belong in the additional categories. Once again, data quality management is all about balance.

Let's discuss which IT outsourcing trends will change the industry.

The Process of Data Quality Management

Now that you understand theoretical concepts and challenges, it’s time to discuss the actual “How-to” part. Where do you start data quality management and what stages should you follow to monitor information effectively? What is good data quality? Let’s see.

Analysis of Poor Data Characteristics and Performance

To understand where to start the data management process, the first thing you need to do is to define the consequences of using poor data. It will help you understand your priorities. So now, you will define the areas where the presence of low-quality data will do the most damage.

- A data quality analyst starts by analyzing issues reported by users and testers. The goal is to understand which of these issues were caused by inaccurate, incomplete, or duplicate files.

- After an expert creates a list of issues, the team takes a second look and defines the gravest problems. All issues are graded by priority and distributed across the data quality management schedule.

- The team creates data quality requirements and data quality dimensions. You define how the good data is supposed to look like – fields, formats, relations between objects.

Data Profiling

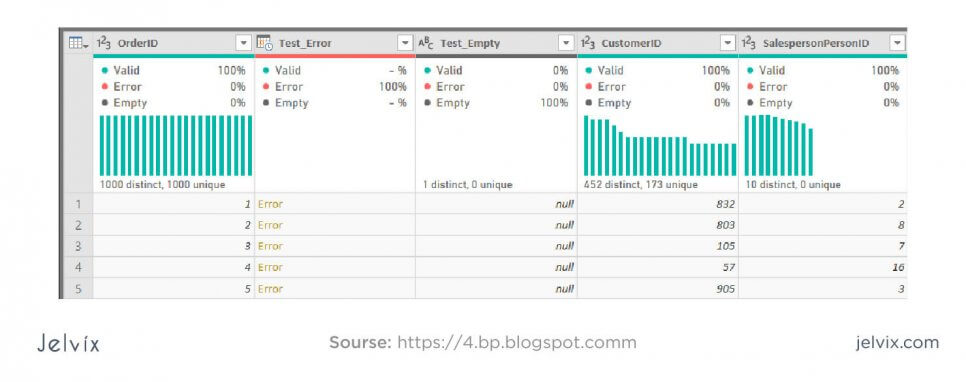

Before the team starts sorting high- and low-quality data, it helps to understand overall what information the company is dealing with. There are three types of discoveries that are useful here.

- Structure discovery. The team detects patterns that systems currently use to store and maintain data. They define medians, ranges, minimal and maximal values that were used thus far. An important benefit of this quantitative advantage is that it can be analyzed easily.

- Content discovery. At this point, you’re no longer looking at just general settings, but you’ve actually already moved on to content evaluation. You’re running quantitative and qualitative analyses to detect wrong or empty values.

- Relationship discovery. The team investigates connections between folders, databases, and fields. You need to understand where your dependencies are, define essential fields, and reduce the number of cells, if possible. The goal is to store data that is truly necessary for business processes, not simply for the sake of it.

The aim of data profiling is to evaluate the current state of your data. Combined with issue reports, performed in the previous stage you will have an idea of how data flaws translate into system issues.

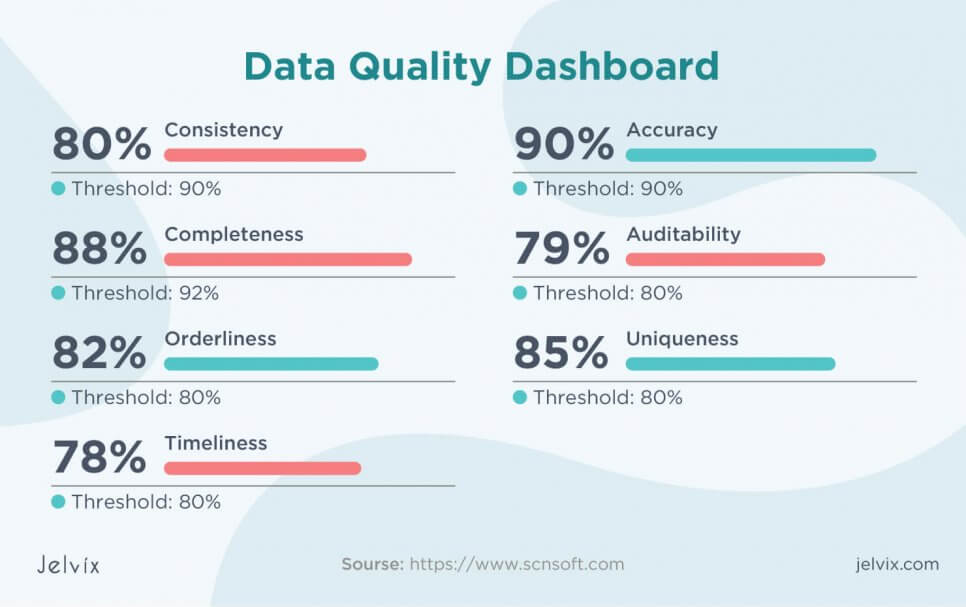

Understanding Data Metrics and quality criteria

The first two steps were targeting your current data situation. To actually start building a new framework, you need a deep understanding of the end result. Ideally, it’s an empirical analysis that’s easily quantified and reported.

To achieve this goal, analysts should come up with a framework for measuring data quality.

- Creating acceptability thresholds: data length, format, accuracy, and values should have specific thresholds. If the input is above or below the threshold, it’s not stored in the database.

- Using measuring tools: the amount and quality of stored data can be measured automatically with available tools. Software like Integrate, LogStash, and Trifacta calculates the key metrics for databases and storage.

- Evaluating business impact. Analysts should predict how exactly storing data that corresponds to metrics will translate into business improvements. It will be just an estimate at this point, but it will provide the team and stakeholders with a clear understanding of data quality purposes.

Setting Up Data Standards and Management Rules

At this point, the team has an deep enough understanding of how to come up with data standards. They should provide detailed data management plans and estimates, and document new best practices.

- Define standards on data formatting, entry, exchange, and representation;

- Create policies on meta tags update and storage. Determine which fields and terms will be used to characterize both data. They should cover business, operational, and technical purposes. For a more precise description, we even recommend separating tags for data in internal use and the one that’s visible to the end-user.

- Determine the rules for data validity. You need to know which formats and data entries are considered valid. The crucial part of this step is defining essential fields – the ones that, if left empty, will block the storage of the entire form.

Finally, the team should describe practices for data tracking, from tools to log attributes. By the end of this stage, you should already have a full wiki of the best data management practices.

Practical Execution

Laying out the theory is perhaps the most important and the most difficult part of data quality management. Once you crystallize your understanding of the data quality check goal, getting to that point is much easier.

Here is a brief list of technical measures that are taken during data quality implementation:

- Database restructuring and editing: the audit may show errors in your database design and architecture. A database developer can restructure your data schema, change the relations between the data cells, eliminate some fields altogether, etc.

- Changes in information architecture: the team can change the data functionality of the software updating ways in which the outputs are uploaded to the system, search algorithms, and input functionality.

- Updates of records management. A team can come up with an automated system that evaluates records quality. Also, you might have to reconsider the quantity and quality of stored records.

The goal of making these changes is to adapt your database and information architectures to business goals. The results should be tangible – the reduction of data flows, system crashes, better insights on personalization, and a higher percentage of form fulfillment.

Data Monitoring and Continuous Updates

The process of analysis and clean-up is non-stop. For as long as the company operates, new information will accumulate. It’s important to assure that all new inputs and outputs correspond with standards. To make this continuous maintenance easier, there are several paths that companies could take.

- Automation: using scripts and tools to analyze data, track performance, and detect issues saves time and allows focusing on other data challenges.

- Merging the same datasets: to decrease the workload, the team should review datasets and identify similar ones. Grouping them by purpose and content will make updates and maintenance much faster and easier.

- Artificial enhancement: the team can send extra data from publicly available services to the database to enrich the range of insights.

The key to long-term success is experience and rich documentation. If the team knows how to establish and record long-term practices, you will have no issues with maintaining these practices for years after their establishment.

Conclusion

The complexity of data quality management lies not as much in the technical execution, but rather, in the conceptual realm. Product owners, managers, stakeholders, and the team should be on the same page to get long-term results. The goals of the data quality team should be synchronized with the business objectives.

The process unites the competencies of many cross-functional teams. Without experience in planning, analysis, and execution, it’s easy to get confused, measure the wrong deliverables, and end up not getting results. Our team has worked with data quality management projects in different industries and with businesses of different scales, and each project had different specifics.

If you’d like our data quality management team to take a look at your project, just drop us a line. We will gladly share our analytical and technical expertise to create the data quality management framework for your project.

Need a qualified team?

Fill the expertise gap in your software development and get full control over the process.